🤝 AidfulAI Newsletter #18: Supercharge Your Prompting Experience With a Text Expander

Dear curious minds,

Welcome to the ultimate newsletter for those interested in Artificial Intelligence (AI) and Personal Knowledge Management (PKM). In this week's newsletter, we'll dive into several intriguing developments:

OpenAI's fine-tuning support for GPT-3.5 Turbo reduces prompt size and saves time and costs but increases the per-token price.

Midjourney's new inpainting feature impresses with easy application but is limited to Midjourney-generated images.

Meta's Code Llama offers impressive code completion and debugging capabilities, while GPT-4 maintains superiority in some areas.

Espanso's text expander enhances productivity through match-replacement systems, including a new Android release.

Coin Stories podcast episode explores AI's future with Brian Roemmele, emphasizing the value of local AI.

I hope you enjoy reading this newsletter and find it useful and informative. Thank you for your support and interest!

Major AI News

🔄🚀 Fine-Tuning Support for GPT-3.5 Turbo Launched by OpenAI

Testers reduced prompt size by up to 90% by fine-tuning instructions into the model, saving inference time and possibly costs while improving the results via reliable output formatting, and consistent custom tone.

Price for running GPT-3.5 Turbo is roughly 10 times more expensive for the same amount of token (source):

Default with 4k context size:

input: $0.0015 / 1K tokens

output: $0.002 / 1K tokens

Fine-tuned model:

input: $0.012 / 1K tokens

output: $0.016 / 1K tokens

Fine-tuning for GPT-4 announced to be realized this fall.

More information in the official blog article from OpenAI.

My take: This could lead to significant savings in inference time and costs if you create a service on top of GPT-3.5. However, for the regular user, fine-tuning should only be the final option, and you should try to achieve the desired result with few-shot prompting and retrieval-augmented generation, as described in this article, first.

🖌️✨ Midjourney Inpainting: More Control Over AI Image Generation

After Photoshops generative fill, Midjourney finally released their inpainting feature, which allows you to modify parts of an image.

Many users share their results in posts on 𝕏 and often they state that they are impressed by how easy it can be applied and how well it works.

Changing the dress of the person created with Midjourney. [source] More examples and additional insights are shared in this Let's Try Ai article.

My take: Nice to have more control over the image generation and fix areas you don't like or imagine differently. The biggest drawback is that you can only use it on images generated with Midjourney and can't use your own images as a starting point. The latter is still the big advantage of Photoshops Generative Fill feature.

Privacy-Friendly AI

⌨🦙 Code Llama: A New LLM for Coding from Meta

Meta released Code Llama, a new large language model for coding built on top of Llama 2.

Released with the same license as Llama 2, which allows free usage for research and commercial purposes.

There are three different versions, each with three variants differing in size (7B, 13B and 34B):

Code Llama

Code Llama - Instruct

Code Llama - Python

Overview of how the different models of Code Llama have been created. [source]

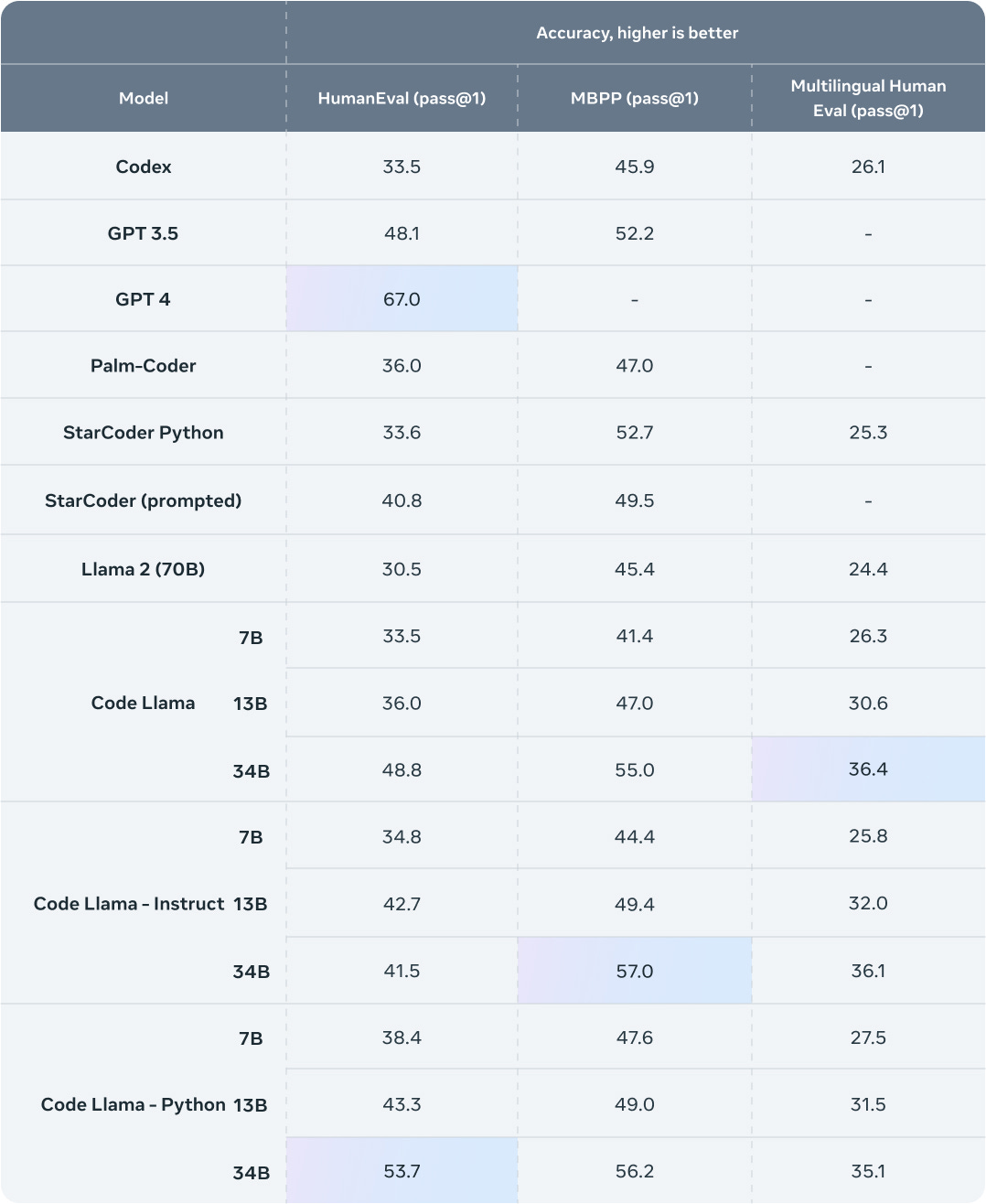

Code Llama outperforms other state-of-the-art publicly available models on code tasks and also GPT-3.5, in at least one version. GPT-4 is so far still superior, at least in the HumanEval python benchmark discussed in an earlier issue.

Benchmark results of Code Llama and other models. [source] Meta shares besides information stated in the official blog article, more insights in the research paper, the training recipes in the GitHub repository, and the model weights.

The hugging face team states that the demo will follow soon on the Code Llama community page.

My take: While GPT-4 may still be superior in some areas, Code Llama's potential for code completion and debugging is impressive. I'm excited to see how this technology will continue to evolve and improve, and how it will impact the future of coding.

PKM and AI

⏱⌨ Espanso: A Text Expander That Saves Time and Typing at Prompting AI

The beauty of Espanso lies in its match-replacement system. You can set up a 'match' which, when typed, Espanso will replace with 'predefined text'. For example, typing ":addr" could output your whole address.

This concept can also be applied to prompts you have entered so far manually in LLMs like ChatGPT, Claude, or Bard. Especially by using different tools and services, it is beneficial to have a way that works for all of these.

The search bar window is a neat way to use Espanso as you don’t need to remember the exact trigger to replace text. To open:

ALT + Space (Option + Space on macOS)

Click the status icon and select "Open Search bar" (Windows and macOS)

In June 2023, there was the release of an open-source app for Android that can import packages from Espanso. The code is shared on GitHub and the app can be installed from the PlayStore.

My take: With time, you'll find it becoming an essential tool in your productivity arsenal. Keep exploring and refining your shortcuts to get the most out of it. Happy (less) typing!

Podcasts

🎙️💡 Exploring the Future of AI and Bitcoin with Brian Roemmele

In this episode of the Coin Stories podcast, Natalie Brunell interviews Brian Roemmele, a scientist, researcher, and analyst who has been exploring AI since he was a teenager

They discuss topics such as human intelligence vs. machines, how ChatGPT works, the potential harms and benefits of AI, the future of Bitcoin and AI, deepfake concerns, and the possibility of living forever through AI.

Brian shares many interesting insights in this epsiode, e.g. that he proves ownership of his AI-generated content by invisibly encapsulating his signature.

My take: Brian Roemmele has been mentioned before in this newsletter, and I appreciate many of his ideas. One that stands out to me is the importance of having a local AI so that individuals don't have to share every detail with a cloud-based provider and become too reliant on them.

Disclaimer: This newsletter is written with the help of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.