🤝 Dragon vs eagle: The AI battle heats up

Dear curious mind,

Today, we're exploring how Chinese language models are reshaping the global AI landscape. Alibaba's new model family matches the state-of-the-art models from US tech giants. But this rise raises questions about bias, censorship, and the ethical implications of AI developed under different cultural and political systems.

In this issue:

💡 Shared Insight

How Chinese LLMs Challenge and Complement Western AI

📰 AI Update

Alibaba Unveils Massive Open-Source AI Model Family: Qwen2.5

🌟 Media Recommendation

Book: AI Superpowers: China, Silicon Valley, and the New World Order

💡 Shared Insight

How Chinese LLMs Challenge and Complement Western AI

To be honest, I don't know a lot about China, but the recent release of the Qwen2.5 family, including a 70B version claiming to be a new state-of-the-art foundation model, has caught my attention. This open-source model from Alibaba, a Chinese firm, challenges that cutting-edge AI is solely the domain of U.S. tech giants. While I'm viewing this through a Western lens, I assume Chinese models carry Chinese cultural and political views.

All LLMs contain biases from vast amounts of human-generated text. The training data sources overrepresent the model's country of origin, leading to broader knowledge and favorable views of that country. Let's examine these cultural differences in Chinese LLMs.

Traditional Chinese Medicine

A recent study compared the performance of Chinese and Western LLMs on Traditional Chinese Medicine (TCM) questions. Researchers found Chinese models like Qwen, GLM, and Ernie Bot significantly outperformed Western models like GPT-4 and Claude 2 on a TCM licensing exam, with average accuracies of 78.4% vs 35.9%. This reflects the greater familiarity with TCM in Chinese culture and medical practice. Western models trained primarily on English data lack in-depth knowledge of this domain.

Political Censorship in Chinese Models

Chinese language models show political biases and censorship around sensitive topics. An analysis of Qwen2, the predecessor of Qwen2.5, found it consistently refused to answer questions about the Tiananmen Square protests, Uyghur detention camps, and bypassing internet censorship when asked in English.

Interestingly, when asked the same questions in Chinese, the model was more likely to provide answers, but these were often nationalistic and aligned with official Chinese government positions. For example, when asked about Uyghur detention camps in Chinese, Qwen2 responded that this was "a complete lie made up by those with ill intentions to disrupt the prosperity and stability of Xinjiang and hinder China's development."

This shows how Chinese language models are aligned through training and filtering to avoid criticism of the government and promote officially sanctioned narratives. It's a striking example of how AI systems can reflect and reinforce the values and restrictions of their societies.

The Need for Diverse Perspectives

While the biases in Chinese models on political topics are concerning from a Western perspective, having alternatives to the dominating US-biased AI is valuable. Yann LeCun, VP & Chief AI Scientist at Meta, stated in a LinkedIn post:

A future in which everyone has access to a wide variety of AI assistants with a diverse collection of expertise, language ability, culture, value systems, political opinions, and interpretations of history is the future we want.

My Take: All language models contain biases shaped by their training data and creators' values. Chinese models demonstrate political censorship unacceptable in Western democracies, but excel in areas like Traditional Chinese Medicine. Instead of avoiding non-Western models, we should understand their strengths, limitations, and biases. Open-source models from diverse origins can help create a richer AI ecosystem that represents a broader range of knowledge and viewpoints.

📰 AI Update

Alibaba Unveils Massive Open-Source AI Model Family: Qwen2.5

The Qwen team announced the largest open-source AI model release in history, introducing the Qwen2.5 family of models.

The release includes general language models (Qwen2.5) and specialized models for coding (Qwen2.5-Coder) and mathematics (Qwen2.5-Math) in sizes ranging from 0.5B to 72B parameters.

Qwen2.5: 0.5B, 1.5B, 3B, 7B, 14B, 32B, 72B

Qwen2.5-Coder: 1.5B, 7B, 32B coming

Qwen2.5-Math: 1.5B, 7B and 72B.

The larger models have a 128k token context window and excellent multilingual capabilities. They cover over 29 languages, including Chinese, English, French, Spanish, Portuguese, German, Italian, Russian, Japanese, Korean, Vietnamese, Thai, and Arabic.

The team benchmarked their 72B model against leading open-source models like Llama-3.1 and Mistral Large2. The results showed competitive performance at a smaller size.

Even smaller models like Qwen2.5-3B are showing impressive capabilities, highlighting the trend towards efficient smaller language models.

The 3B model is released under Qwen Research License Agreement, which prohibits commercial usage. Both 72B models are covered by the Qwen License Agreement, which allows commercial applications with restrictions for large-scale deployments. All other released models are under the Apache 2.0 license, allowing unrestricted use, modification, and distribution.

The models are available on Hugging Face and have already been integrated with Ollama.

Last week, the Qwen2-VL model family was released. These vision models support image understanding and video analysis. The 72B version was added to this model family with this announcement.

Furthermore, Alibaba announced APIs for their flagship models: Qwen-Plus and Qwen-Turbo.

My take: This release of open-source models across different sizes and specialties is a major contribution to the AI community. It provides researchers and developers with a wide range of options to experiment with and build upon. However, independent benchmarks and real-world testing are needed to assess the capabilities, limitations and biases of these new models.

🌟 Media Recommendation

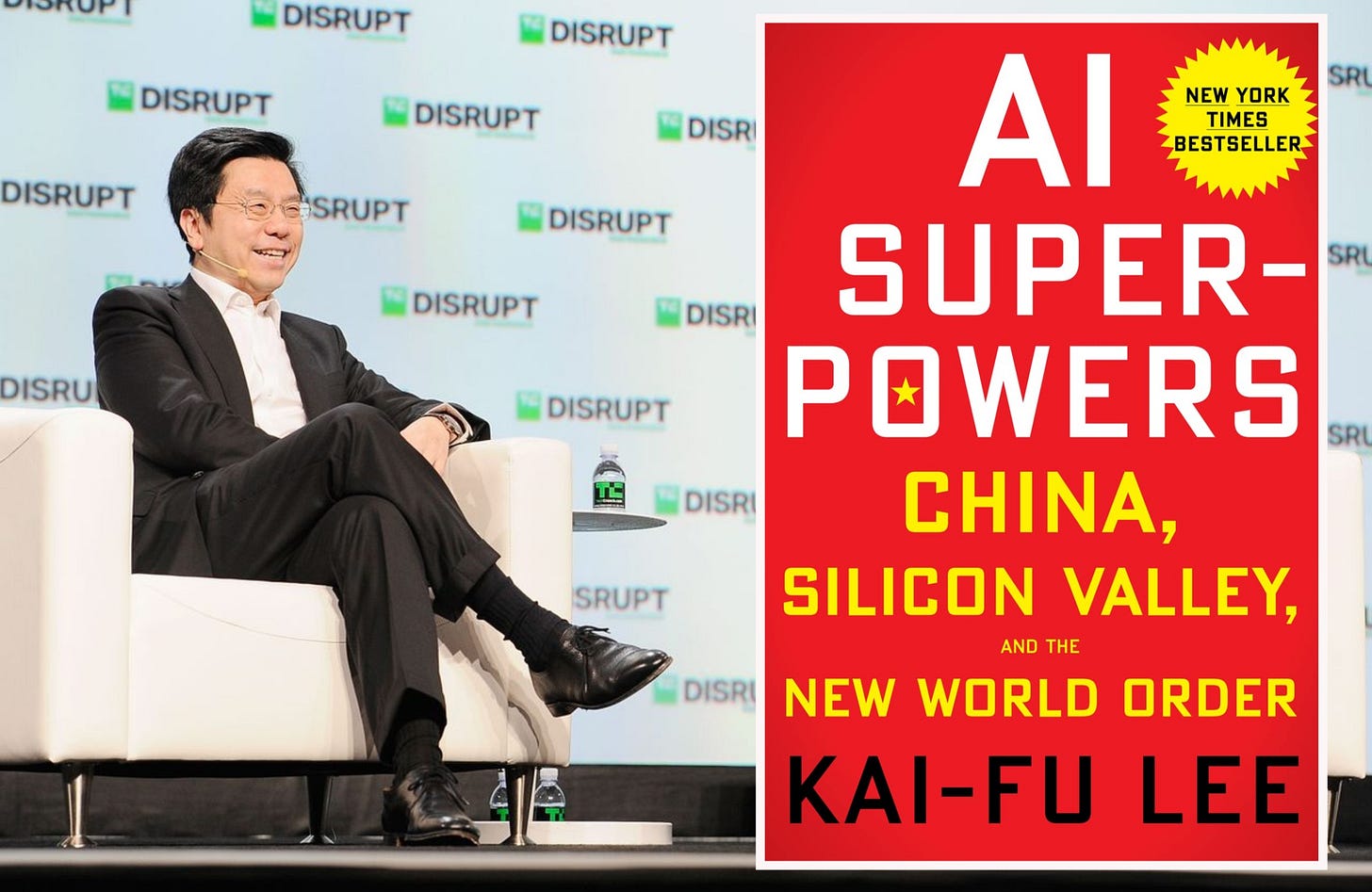

Book: AI Superpowers: China, Silicon Valley, and the New World Order

The book "AI Superpowers: China, Silicon Valley, and the New World Order" by Kai-Fu Lee was already released in 2018. However, with the current advances shared by Chinese companies in the area of LLMs, it might be more relevant than ever before.

The book compares AI development in China and the United States, offering insights into the strengths and strategies of both nations in the AI race.

Key topics covered are:

The differences in AI implementation between China and the US.

How do China's data advantage and government support accelerate AI progress?

The potential economic and social impacts of AI, including job displacement.

Ethical considerations and responsible AI development

Kai-Fu's unique perspective comes from his extensive experience in US and Chinese tech industries. He's a former executive at Google, Microsoft, and Apple. Recently, he co-founded 01.AI, a Chinese AI startup with the vision to make AGI accessible and beneficial to everyone.

My take: You should read this book if you're interested in the geopolitical implications of AI advancement. It offers a balanced view of the AI landscape while challenging Western assumptions about China's AI capabilities. While some predictions may have evolved since its 2018 publication, the core insights stay relevant to understand the ongoing global AI competition. Kai-Fu's recent venture into 01.AI, focused on making AGI accessible and beneficial, demonstrates his commitment to shaping an inclusive and positive AI future.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.