🤝 Is AI breaking our shared reality?

Dear curios minds,

I am currently spending a lot of time tinkering on my own applications with Claude Code, and I really love it (I will likely cover in an upcoming issue). At the same time, I am constantly reminded of the negative consequences which are on the horizon of the AI revolution. This week, I want to share my thoughts on how advanced AI video generation will lead to personalized, psychologically manipulative content, potentially breaking our shared reality.

This week, there were no major open source model releases or tools which gathered my attention. In contrast to that, there were a ton of interesting interviews with leading minds in the AI industry.

In this issue:

💡 Shared Insight

The End of Shared Reality: When AI Makes Videos Just for You

🌟 Media Recommendation

Podcast: "Become a Plumber" is Geoffrey Hinton's Job Survival Guide

Podcast: OpenAI Launches Official Podcast with Sam Altman Revealing GPT-5 Timeline And The Possibility of Ads in ChatGPT

Podcast: Sam Altman on Why Even 400 IQ AI Might Not Change Everything

Video: Andrej Karpathy on the Future of Programming

💡 Shared Insight

The End of Shared Reality: When AI Makes Videos Just for You

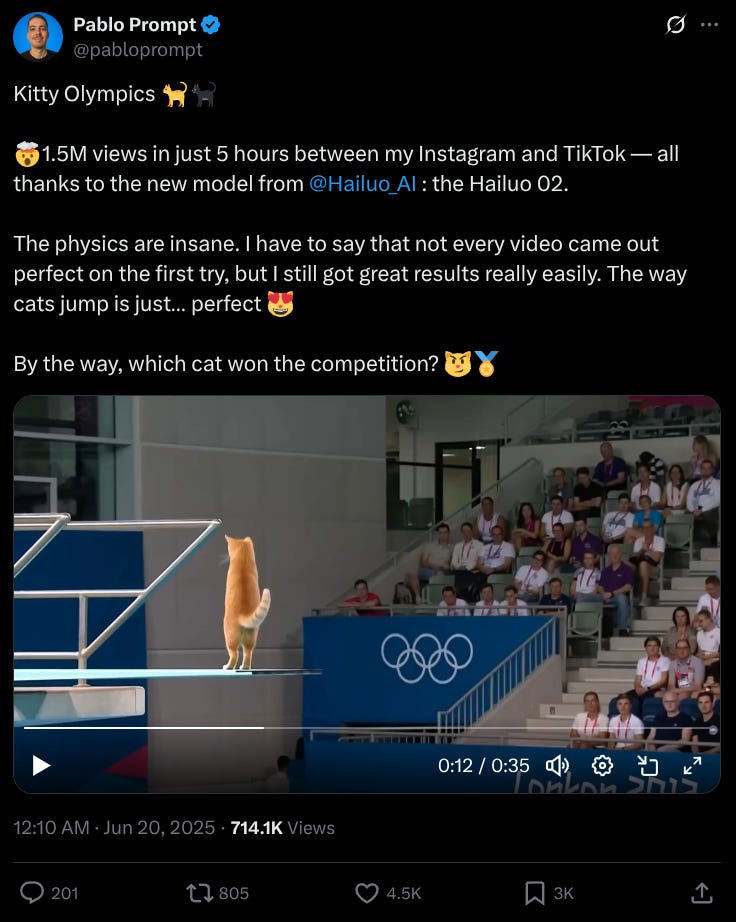

A fundamental shift is on the horizon in the world of entertainment, and most people haven't noticed yet. Over the past few weeks, AI video generation took a massive leap forward with models like Google's Veo 3, Kling v2.1, Hailuo 02 and others reaching near-Hollywood quality at costs under a dollar per video.

It is all about making videos by describing them with natural language. Think about it this way: until now, YouTube's and TikTok's algorithm was like having the world's most obsessive librarian. It knew exactly which books (videos) you'd love from the millions on the shelves, and it got scary good at predicting your taste. But we are about to cross into something entirely different, an AI that does not just find the perfect content for you, but writes it specifically for you, designed to push every psychological button you have.

The difference is staggering. Current social media algorithms are like sophisticated recommendation engines, trying to serve you the most addictive content from what humans have already created. The new AI video systems are like having a Hollywood studio that knows your deepest fears, desires, and weaknesses, and can create an infinite number of perfectly tailored movies just for you. As former OpenAI employee Andrej Karpathy put it in an 𝕏 post:

"Ok, people are already addicted to TikTok so clearly it's pretty decent, but it's imo nowhere near what is possible in principle."

— Andrej Karpathy

This isn't science fiction, it's happening right now. TikTok has already rolled out AI video creation tools globally. Google announced that they will release a tool which allows creators to use their powerful video creation model Veo 3 to create YouTube shorts. Major platforms are spending billions to make this the new normal, and the economics for the final stage are compelling: Why pay human creators when AI can generate personalized content for pennies?

Here's where it gets truly frightening. We already know that social media algorithms exploit psychological vulnerabilities. These tools were originally designed to keep you scrolling through existing human-created content. But AI-generated content takes this manipulation to an entirely new level. Instead of finding the most addictive video someone else made, AI can create new videos specifically designed to trigger your individual psychological triggers.

Imagine TikTok not just knowing you love cat videos, but generating an entirely new cat video every time you open the app. A video featuring your name, your pet's breed, addressing your specific emotional state detected from your recent activity, with just the right music and timing to make you feel a particular way. And then creating another one. And another. Forever.

We are essentially building a machine that studies human psychology at scale and weaponizes those insights against us. The scary part isn't that AI will create fake news (though it will). It's that AI will create perfectly optimized emotional manipulation disguised as entertainment. Content that's designed not to inform or even entertain in any meaningful way, but simply to capture and hold your attention for as long as possible.

The timeline for this transformation? It's not years or decades. It's months. By the end of 2025, AI video generation will likely be as common as photo filters are today. The technology is ready, the economic incentives are there, and the platforms want to keep you glued to your screen.

Maybe the most unsettling part is how this could fragment our shared reality. When everyone receives personalized, AI-generated content optimized for their individual psychology, we stop experiencing the same cultural moments together. We lose the common ground of shared media experiences. Instead, we each live in our own custom-built reality bubble, designed not by our choices, but by algorithms that know exactly how to manipulate us.

The future of entertainment might not be about creating better stories or more meaningful content. It might be about creating the most psychologically manipulative content possible, perfectly tailored to each individual's vulnerabilities. The question isn't whether this technology is coming, it is already here. The question is whether we'll recognize what we're giving up before it is too late to choose a different path.

🌟 Media Recommendation

Podcast: "Become a Plumber" is Geoffrey Hinton's Job Survival Guide

Geoffrey Hinton, the "Godfather of AI" and 2018 Turing Award winner, was recently interviewed on "The Diary of a CEO" podcast. After leaving Google in 2023 to speak freely about AI dangers, Hinton shares his warnings about humanity's future with AI.

Why he earned the "Godfather of AI" title: Hinton was one of the few researchers who believed artificial neural networks could work, pushing the brain-modeling approach for 50 years while most experts focused on logic-based AI. His persistence attracted top students who later founded companies like OpenAI, making him the intellectual father of today's AI revolution.

The job displacement crisis is already here: Hinton's niece now handles five times as many customer complaints using AI chatbots, essentially doing the work of five people. Unlike previous technological revolutions that created new jobs, AI may simply require fewer people across most sectors. His advice? If his kids didn't have money, he'd recommend they become plumbers.

AI scams are just the beginning: Both hosts discuss experiencing AI-generated scams, using their voices and images to promote crypto schemes. Despite takedown efforts, new scams keep appearing, showing how AI makes sophisticated fraud accessible to anyone with basic technical skills.

The biological weapons threat is real: AI can now help design dangerous viruses without requiring molecular biology expertise. A small cult with a few million dollars could potentially create multiple harmful pathogens, dramatically lowering the barrier for bioterrorism.

Universal Basic Income (UBI) won't solve everything: Even with UBI, Hinton warns that joblessness threatens human happiness because people need purpose and the feeling of contributing. Work provides more than income, it gives meaning and social connection that money alone can't replace.

My take: I loved listening to this podcast episode as it made me think about the implications of the ongoing AI developments. But the most important lesson was shared by Hinton in the end: Spend more time with your loved ones!

Podcast: OpenAI Launches Official Podcast with Sam Altman Revealing GPT-5 Timeline And The Possibility of Ads in ChatGPT

OpenAI has officially entered the podcasting world with the launch of "The OpenAI Podcast". The first episode features CEO Sam Altman who is interviewed by Andrew Mayne, OpenAI's Science Communicator since 2021, in a 40-minute-long conversation.

In the episode, Sam Altman shared for the first time a rough release window for GPT-5 by stating it will "probably" arrive "sometime this summer". This represents the most concrete timeline we've heard from OpenAI about their next major model release.

The conversation covers fascinating ground beyond GPT-5. Altman discusses various topics from Artificial General Intelligence (AGI) and Project Stargate to new research workflows and even AI-powered parenting by revealing how he personally relies on ChatGPT for childcare advice as a new parent.

His shared insights about privacy concerns, the ongoing New York Times lawsuit, and even the possibility of ads in ChatGPT offer a rare glimpse into the strategic thinking of a company that is reshaping how we interact with technology.

My take: Please no ads. I understand the economic pressure, as creating and running the models is very cost intensive. But when ads enter the equation, users will lose the trust in the application as they cannot be sure anymore if the response is really what they are looking for or just trying to sell them the solution, which results in the biggest profit for OpenAI.

Podcast: Sam Altman on Why Even 400 IQ AI Might Not Change Everything

Sam Altman was recently interviewed by his brother Jack Altman in his podcast "Uncapped". The conversation covered a lot, from autonomous scientific discovery to why humanoid robots will shock us more than ChatGPT ever did.

AI scientists are closer than expected: Altman believes we've already "cracked reasoning" in AI models, enabling them to perform at PhD-level in specific domains. More importantly, he predicts AI will autonomously discover new physics within 5–10 years, moving beyond just assisting researchers to actually conducting independent scientific breakthroughs.

The superintelligence paradox: Despite feeling more confident than ever about building "incredible AI systems," Altman worries that even superintelligence might not dramatically change daily life. He notes that ChatGPT's widespread adoption hasn't fundamentally altered most people's routines, suggesting even a 400 IQ system might exist without revolutionizing society if focused on specialized tasks.

Humanoid robots: While we've adapted to ChatGPT, Altman predicts physical robots will feel like "a new species taking over." Unlike digital assistants, embodied AI will create visceral reactions that are impossible to ignore.

AI business automation is happening now: People are already using AI to run entire small businesses autonomously. They handle market research, product development, manufacturing coordination, and sales with minimal human input.

Humans still need humans: Despite AI's advancing capabilities, Altman emphasizes that people are wired to care about human stories and decision-making processes, suggesting the human element in content and relationships remains irreplaceable.

My take: Already stated multiple times, but I cannot agree more: Success in the AI area will depend on learning to work alongside AI systems while maintaining the human elements that people actually value.

Video: Andrej Karpathy on the Future of Programming

In this compelling keynote from Y Combinator’s AI Startup School event in San Francisco, Andrej Karpathy presents a fascinating framework for understanding how artificial intelligence is fundamentally reshaping software development. Drawing from his extensive experience at Tesla, OpenAI, and Stanford, Karpathy argues we're witnessing the birth of "Software 3.0" - a new era where natural language becomes our primary programming interface.

Karpathy breaks down software evolution into three distinct eras:

Software 1.0: Traditional code we write for computers

Software 2.0: Neural networks and their trained weights

Software 3.0: Large language models programmed in English

What makes this transition remarkable is that for the first time in computing history, we're programming machines using our natural language. This isn't just a new tool - it's an entirely new kind of computer that requires us to rethink how we build and interact with software.

Looking forward, Karpathy envisions a world where we need to design digital infrastructure not just for humans and traditional programs, but for AI agents. This means creating AI-readable documentation, developing new protocols for agent communication, and rethinking how software interfaces should work.

My take: For anyone working with or thinking about AI, this talk provides essential context for understanding where we are and where we're heading. Karpathy provides the roadmap on how to think about software in the age of AI.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.