🤝 Stop Guessing: This Tool Reveals the Best AI for YOU

Dear curious minds,

Last week, the AI landscape was transformed by groundbreaking releases from OpenAI and Google, moving the field forward at an unbelievable pace. This issue is dedicated to bringing you the latest developments, equipping you with the insights needed to leverage these new tools effectively. Stay ahead of the curve and identify by using GPT-4 and Gemini Ultra side-by-side which model will solve your specific challenges and accelerate your success.

In this week's issue, I bring to you the following topics:

ChatGPT Gets a Personalized Memory

OpenAI Sora: Generate Stunning One-Minute Videos

Use GPT-4 and Gemini Ultra Side-by-Side

Gemini 1.5 Pro: Stunning Long Input Performance

Tech Term: Mixture-of-Experts (MoE)

If nothing sparks your interest, feel free to move on, otherwise, let us dive in!

🧠✨ ChatGPT Gets a Personalized Memory

OpenAI announced in a blog article their new memory feature that enables ChatGPT to remember and use information from past conversations. This feature is designed to make future interactions more efficient and personalized, eliminating the need for users to repeat themselves.

Users have full control over the memory feature. They can command ChatGPT to remember or forget specific information, and can toggle the memory function on or off through the settings. This ensures users can manage their privacy and the level of personalization they prefer.

The memory feature is currently being introduced to a select group of ChatGPT free and Plus users. OpenAI plans to gather feedback from this initial phase to inform a broader rollout in the near future.

If already rolled out to your account, you can activate and manage the memory feature in the personalization tab of the ChatGPT settings. [source] ChatGPT's memory is designed to improve with usage. Over time, it can learn preferences and details relevant to the user's needs, such as formatting preferences for documents or personal details that can result in more relevant responses.

For conversations that users prefer not to be remembered, a temporary chat feature is available. This ensures that certain interactions can remain completely private and not influence future responses.

Instead of the global switch, history and memory can be deactivated for single chats. [source] OpenAI emphasizes that user data, including memories, may be used to improve model performance. Data from ChatGPT Team and Enterprise customers will not be used for training purposes.

The memory feature will not be limited to ChatGPT alone but will extend to other GPT models. Each GPT will have its distinct memory per user, enabling specialized applications like book recommendations to provide more tailored experiences based on past interactions.

My take: The memory feature is especially beneficial for Enterprise and Team users, allowing ChatGPT to remember work-related preferences and details. OpenAI announces this feature as something ground-breaking new, but you could already provide this kind of information from August 2023 in your custom instructions. However, adding information automatically or triggered during chats simplifies the process and makes it more user-friendly. And we have learned with the initial release of ChatGPT, a huge user adoption is created by a seamless experience.

🎥✨ OpenAI Sora: Generate Stunning One-Minute Videos

The state-of-the art in AI video generation was covered in past issues, and one thing all tools so far have in common is that the generated output is only a few seconds long.

Sora is a new approach from OpenAI that can make videos up to a minute long from your text prompts. It can generate detailed scenes, camera motions and multiple characters.

Besides the product page, which shows stunning result videos, even more insights are shared in the technical report.

The resulting videos are wonderful, but not perfect. Sora might mix up some details or fail to get the physics right.

The video below shows a commented summary of the Sora release by Matt Wolfe. If you're interested in broader AI news beyond this newsletter, he's a great resource to follow.

Nvidia researcher Jim Fan shared on 𝕏 that he thinks Sora was trained with a lot of synthetic data generated with a PC game engine. By compressing this knowledge in an AI model, it is likely that Sora will replace hand-engineered graphic pipelines in the future.

Currently, Sora is checked by red team experts for possible harms and risks. Furthermore, selected artists and filmmakers were granted access. There is currently no information about the public release.

My take: Sora isn't just about making videos. It's about understanding our world. This AI learned how things move and interact, making its creations super realistic. This is another step in the direction of an Artificial General Intelligence (AGI). Impressive and scary at the same time!

🤖⚔️ Use GPT-4 and Gemini Ultra Side-by-Side

After my first negative impression shared in last week’s issue (still worth a read for a general introduction to the Gemini launch), I realized that Gemini Ultra works better for me than I initially thought. However, you should evaluate yourself which is the best model for your day-to-day use.

Besides my own experiments and judgement, there are various sources which state that the high-end models from OpenAI and Google are quite close. To name two:

A comparison shared on Reddit by u/lordpermaximum

Article “Gemini Ultra is out. Does it beat GPT4?” by Ben Sheffield

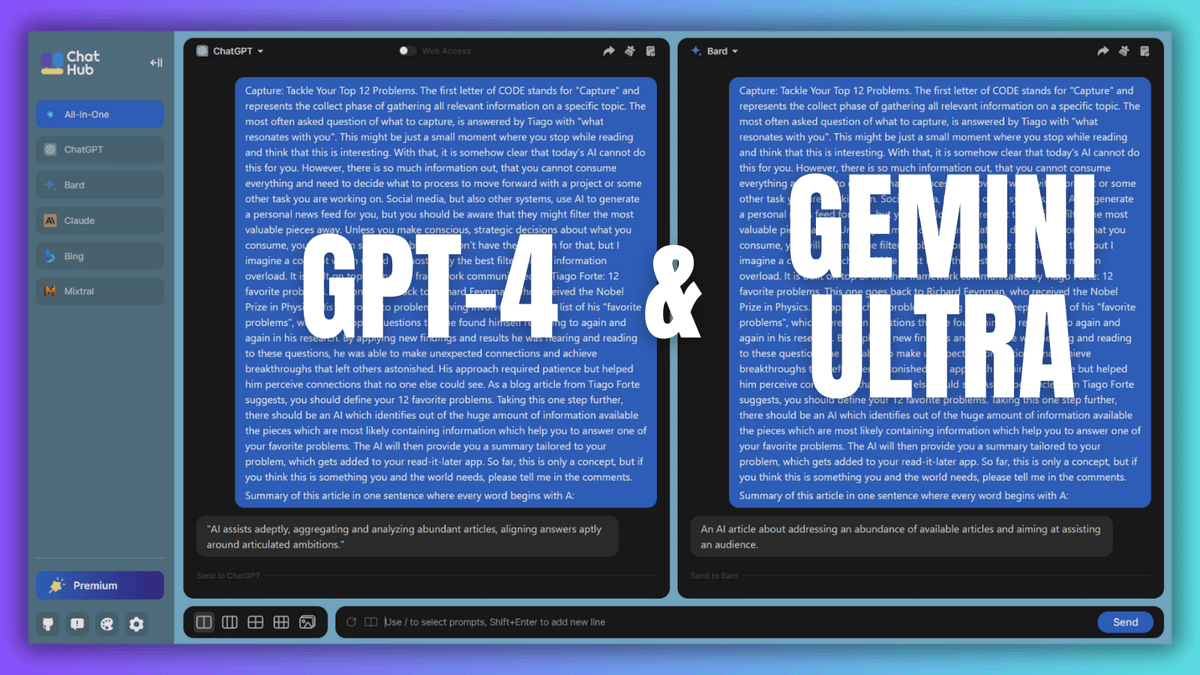

The browser extension ChatHub [affiliate link – use it to support my work if you go paid] lets you use the GPT-4 (paid ChatGPT subscription, Poe or OpenAI API required) and Gemini Ultra (Google One AI Premium trial available) simultaneously.

ChatHub uses your session cookies to utilize the official web apps from OpenAI and Google in the background.

A free side-by-side view (up to six models in the paid version of ChatHub) allows you to identify which model suits your needs best. No need for copying and pasting of prompts between the web UIs from ChatGPT and Gemini.

The user interface of ChatHub still states that Bard is used, and there is no option to choose between different models for Gemini in the settings. I verified that ChatHub really used Gemini Ultra (and GPT-4) with a prompt, which fails for GPT-3.5 and Gemini Pro. If you are logged in to multiple Google accounts, it might help to log out from all accounts except the one where you activated the Google One AI Premium trial. Furthermore, make sure that you select Gemini Advanced in the Gemini web app.

Capture: Tackle Your Top 12 Problems. The first letter of CODE stands for "Capture" and represents the collect phase of gathering all relevant information on a specific topic. The most often asked question of what to capture, is answered by Tiago with "what resonates with you". This might be just a small moment where you stop while reading and think that this is interesting. With that, it is somehow clear that today’s AI cannot do this for you. However, there is so much information out, that you cannot consume everything and need to decide what to process to move forward with a project or some other task you are working on. Social media, but also other systems, use AI to generate a personal news feed for you, but you should be aware that they might filter the most valuable pieces away. Unless you make conscious, strategic decisions about what you consume, you will live in some filter bubble. I don’t have the solution for that, but I imagine a concept which would be most likely the best filter for the information overload. It is built on top of another framework communicated by Tiago Forte: 12 favorite problems. This one goes back to Richard Feynman, who received the Nobel Prize in Physics. His approach to problem-solving involved keeping a list of his "favorite problems", which were open questions that he found himself returning to again and again in his research. By applying new findings and results he was hearing and reading to these questions, he was able to make unexpected connections and achieve breakthroughs that left others astonished. His approach required patience but helped him perceive connections that no one else could see. As a blog article from Tiago Forte suggests, you should define your 12 favorite problems. Taking this one step further, there should be an AI which identifies out of the huge amount of information available the pieces which are most likely containing information which help you to answer one of your favorite problems. The AI will then provide you a summary tailored to your problem, which gets added to your read-it-later app. So far, this is only a concept, but if you think this is something you and the world needs, please tell me in the comments.

Summary of this article in one sentence where every word begins with A:My take: Upgrade your AI experience today! Install ChatHub [affiliate link – use it to support my work if you go paid] and compare side-by-side which model performs best on your tasks. I encountered many requests where Gemini Ultra clearly outperformed GPT-4.

Two other loosely connected thoughts: Google owns YouTube, which is likely the best data source for trainings of future models, as there is a huge amount of new high-quality multi-modal (video, image, audio, text) content uploaded daily. Furthermore, many people use intensively Google services like Gmail for email and Drive for documents. Providing exclusive access to your emails and documents with the Gemini models will be a game changer. In conclusion, and contrary to what I said last week, I believe that Google will soon be the home of the most popular generative AI models.

📚🚀 Gemini 1.5 Pro: Stunning Long Input Performance

Google announced, only one week after the release of Gemini Ultra, the introduction of Gemini 1.5 Pro.

Besides the launch article linked above, Google released a 58-page-long report describing and evaluating the new model.

Gemini 1.5 Pro matches the performance of Gemini 1.0 Ultra (their best model yet) while needing less computing power.

The new model outperforms its earlier versions in many benchmarks, demonstrating enhanced reasoning across texts, code, images, and even video.

It is stated that Gemini 1.5 Pro uses a new Mixture-of-Experts (explained below in a separate section) architecture, which is also rumored to be used by GPT-4 from OpenAI.

Its ability to process huge inputs (context window) has been massively boosted to up to 10 Million tokens. This means it can analyze things like entire books, complex code, or hour-long videos to provide better responses.

Comparison of context window size of various models. [source]

A long context window alone is worth nothing, as the performance for successful retrieval might be bad. Anthropic shared in a blog post that for Claude, the so far best model for long-form inputs, that the retrieval performance for information at the end of the context window is higher.

The retrieval of information from a large context window is stated to be close to perfect. [source]

Google emphasizes ongoing "red-teaming" to test and improve safety to mitigate potential ethical risks of the model. So far, Gemini 1.5 Pro is only available in limited preview for selected developers and enterprise customers. Signing up as an early tester for Gemini 1.5 Pro takes less than a minute in AI studio.

It is planned to release Gemini 1.5 Pro initially with only 128k tokens and scale this up to 1M tokens with different pricing tiers as they improve the model.

My take: I loved the huge context windows from Claude, but 10M token is a totally different game. You can use multiple long documents or books as input and let the model identify inconsistencies or other relations in them. However, the question is how reliable the model can extract the needed information from these large inputs. The results published in the report look promising, but they need to be verified.

💻🔍 Tech Term: Mixture-of-Experts (MoE)

Many of today’s state-of-the art LLMs - GPT-4, Gemini 1.5 Pro, Mixtral 8x7B - use internally a so called Mixture-of-Experts (MoE) architecture.

A Mixture-of-Experts is a machine learning model architecture that involves a collection of individual models, each referred to as an "expert." Each expert is trained to handle specific types of tasks or data within the larger problem space. Here's how it generally works:

Division of Labor: The Mixture-of-Experts model divides a complex problem into simpler, more manageable sub-tasks. Each expert specializes in one aspect of the problem.

Gating Network: A separate component, known as the "gating network," determines which expert should be applied to a given input. The gating network learns to decide which experts are most suitable for the current task.

Combining Outputs: The outputs of the experts are combined, often in a weighted manner as determined by the gating network, to produce the final output of the model.

The key advantage of a Mixture-of-Experts is its ability to handle diverse and complex problems by leveraging the specialization of individual experts, leading to more accurate and at the same time more efficient problem-solving.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.