🤝 The future of AI is open: Two new models challenge OpenAI

Dear curious minds,

The landscape of AI is rapidly evolving, and this week brings exciting news that could reshape the future of AI as we know it. Two groundbreaking open-source models have emerged, presenting formidable challenges to the dominance of closed systems like ChatGPT from OpenAI and Claude from Anthropic.

In this issue:

💡 Shared Insight

Why Open-Source AI Matters for Individuals

📰 AI Update

Llama 3.1: Meta's 405B Model Challenges AI Giants

Mistral Large 2: Mistral AI’s Powerful New 123B Model

🌟 Media Recommendation

Video: Mark Zuckerberg on Llama 3.1, Open-Source AI, and the Future

💡 Shared Insight

Why Open-Source AI Matters for Individuals

Before diving into the personal implications of open-source AI, I strongly encourage you to read Mark Zuckerberg's recent article “Open Source AI Is the Path Forward” which was published along the release of the open model family Llama 3.1. In the article, he provides a clear explanation for why open-source AI is crucial for developers, companies, and even nations. Mark discusses how open models promote innovation, ensure data privacy, and will lead to safer AI development. He also explains Meta's commitment to open-source AI and how it aligns with their business model.

It's crucial to recognize that these advantages extend to individuals as well. As AI increasingly integrates into our daily lives, open models become a cornerstone of personal empowerment and privacy protection.

For individuals, open-source AI models like Llama 3.1 offer several key benefits:

Privacy Protection: Just as organizations need to safeguard sensitive data, individuals have personal information they'd rather not share with large tech companies. Open models allow you to run AI locally, ensuring your private conversations, documents, and queries remain truly private.

Customization and Learning: Open models provide a unique opportunity for tech enthusiasts and learners to dive deep into AI technology. You can fine-tune models for personal projects, gaining hands-on experience with cutting-edge AI without the high price tag of cloud-based services.

Long-term Reliability: As Mark points out, depending on closed models means being at the mercy of their providers. For individuals, this could mean losing access to AI tools you've integrated into your workflow or personal projects. Open models ensure you always have access to the technology you started to use.

As we move forward in the AI era, it's crucial that individuals, not just organizations, have the tools to make use of this technology safely and effectively. Open-source models like Llama 3.1 are a significant step towards ensuring that the benefits of AI are accessible to all.

📰 AI Update

Llama 3.1: Meta's 405B Model Challenges AI Giants

Meta has just unveiled on 07/23/2024 the Llama 3.1 family, marking a significant milestone in open-source AI. The star of this release is the Llama 3.1 405B model, which Meta claims is the world's most capable openly available foundation model.

The released benchmark results show a performance which is often ahead of the so far best models, GPT-4o from OpenAI and Claude 3.5 Sonnet from Anthropic. However, the likely most trustworthy evaluation is done by humans and a first insight shows on par results as shown in the following image.

Besides the first 405 billion parameters model, Meta also released updated versions of the 8B and 70B models which can be used in the following eight languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai. This is especially a big deal for the 8B version, which previously only supported English.

Furthermore, the context size was increased from 8k to 128k tokens for all models.

Meta has updated their license, now allowing full commercial use of the Llama 3.1 models. This means that you can not only use these models in commercial projects, but also leverage outputs from Llama models to improve other models.

Along to the model itself, Meta shared an extensive research paper, which shared in 92 pages many details about how they trained the Llama 3.1 models.

Meta has partnered with over 25 companies across the cloud, hardware, AI/ML, and enterprise sectors to support the release of Llama 3.1. These partnerships aim to make the models more accessible, optimize infrastructure, enable advanced workflows, and accelerate adoption of the open-source Llama ecosystem. This collaboration helps address challenges of working with large models and broadens access to Llama 3.1's capabilities for developers and organizations.

You can test the models on Meta.ai or WhatsApp if you are living in one of the currently 22 supported countries (sidenote: VPN does not work anymore as the region of your Facebook or Instagram account you are login in with is checked):

Argentina

Australia

Cameroon

Canada

Chile

Colombia

Ecuador

Ghana

India

Jamaica

Malawi

Mexico

New Zealand

Nigeria

Pakistan

Peru

Singapore

South Africa

Uganda

United States

Zambia

Zimbabwe

As the models are open, many providers started to serve them, including Groq, which is known for its superfast model execution. However, the requirements of the 405B model are so high that there is no free service running this version except Meta.ai mentioned before.

If you want to run the Llama 3.1 models on your own hardware, you can use Ollama. But be aware that the 405B version requires 231GB of memory, and with that, you can likely only use the smaller versions.

My take: This release is a game-changer for the open-source AI community. The combination of a frontier-level model and a commercial-friendly license is truly exciting. While the raw power of Llama 3.1 405B is impressive, I believe the real innovation will come from what developers build on top of it. The ability to freely fine-tune and adapt this model for specialized tasks could unlock a new wave of AI applications across various industries. We might see highly specialized AI assistants emerging in fields like medicine, law, or engineering.

Mistral Large 2: Mistral AI’s Powerful New 123B Model

The French start-up Mistral AI has released on 07/24/2024 their latest flagship model, Mistral Large 2, marking a significant leap forward in AI capabilities. This new model boasts impressive improvements across the board, from code generation and mathematics to reasoning and multilingual support.

Key highlights of Mistral Large 2 include:

A 128k context window, allowing for processing of long inputs

Support for dozens of languages, including French, German, Spanish, Italian, and many more

Works with over 80 coding languages, such as Python, Java, C++, and JavaScript

Enhanced instruction-following and conversational abilities

Improved function calling and retrieval skills

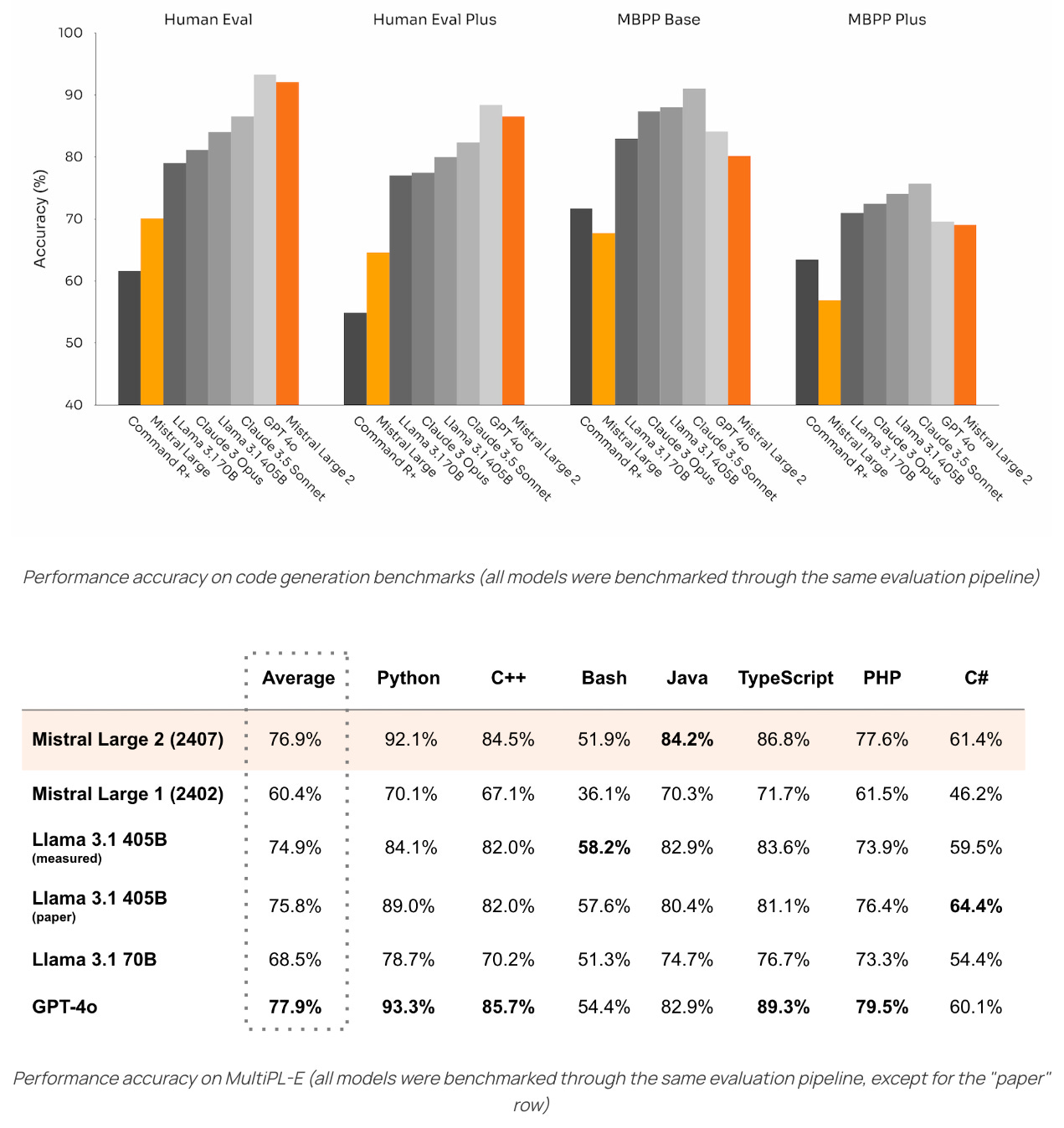

Mistral shared a comparison of the coding capabilities of Mistral Large 2. Strangely the currently best model at coding, Claude 3.5 Sonnet, is only shown in the upper part of the image below which shows results for various Python benchmarks.

It is stated that the base model achieves an impressive 84.0% accuracy on the MMLU (Massive Multitask Language Understanding) benchmark. However, the base model is not released, and it is not stated if few-shot or chain-of-thought evaluation was used. To confuse the readers even more, the image Mistral AI shares shows a comparison to the Llama 3.1 models on the Multilingual MMLU with a score of ~80.0% for Mistral Large 2.

In code generation and mathematical reasoning, Mistral Large 2 is showing performance on par with industry leaders like GPT-4, Claude 3 Opus, and Llama 3 405B. This is particularly impressive given Mistral AI's focus on efficiency and cost-effectiveness, with a size of 123B parameters for the Mistral Large 2 model.

The model is now available on Mistral's "la Plateforme" and “le Chat” under the name

mistral-large-2407. For researchers and non-commercial users, it's released under the Mistral Research License, while commercial usage requires to reach out to Mistral AI.You can get the model weights on HuggingFace or download and use the model super straightforward in Ollama.

My take: Mistral AI continues to push the boundaries of what's possible with open-source AI models. The combination of strong performance, multilingual capabilities, and a size which fits high-end consumer machines, Mistral Large 2 is a nice release for both researchers and private users. The presented results feel a bit cherry-picked, especially as the release took place one day after the Llama 3.1 models. Time will tell if the model is as good as Mistral AI tells us.

🌟 Media Recommendation

Video: Mark Zuckerberg on Llama 3.1, Open-Source AI, and the Future

Mark Zuckerberg, CEO of Meta, shared insights on the release of Llama 3.1 and Meta's vision for open-source AI in a recent YouTube interview hosted by Rowan Cheung. As the leader of one of the world's largest tech companies, Mark offers a unique perspective on the future of AI development and its potential impact on society.

Mark believes open-source AI will become the industry standard, similar to Linux's dominance in operating systems.

Open-source AI is expected to accelerate innovation and economic growth by democratizing access to advanced AI capabilities.

Meta is focusing on building partnerships and an ecosystem around Llama, enabling customization and fine-tuning.

Meta aims to enable the creation of millions of AI agents for businesses, creators, and individuals, rather than focusing on a single, centralized AI.

My take: This interview provides valuable insights into Meta's strategy for AI development and its potential impact on the tech industry. Mark's commitment to open-source AI and his vision for a decentralized AI ecosystem could significantly shape the future of AI development and usage. The full interview offers a comprehensive look at Meta's AI strategy and Mark's thoughts on the future of AI, making it a must-watch for anyone interested in the direction of AI technology and its societal implications.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.