🤝 The future of humanity: Can we survive the rise of superintelligence?

Dear curious minds,

The rapidly evolving landscape of Artificial Intelligence (AI) could lead to the creation of a superintelligence, an entity capable of surpassing human intelligence in every aspect. The open question is whether this pursuit of technological advancement will ultimately benefit humanity or lead to unforeseen consequences that could have far-reaching and devastating effects.

In this issue:

💡 Shared Insight

P(doom) and the Future of Humanity: Expert Insights and Predictions

📰 AI Update

Claude 3.5 Sonnet: Anthropic Challenges OpenAI with Powerful New AI Model

Former OpenAI Chief Scientist Ilya Sutskever Launches Safe Superintelligence Inc.

🌟 Media Recommendation

The Future of AI and Geopolitics with Former OpenAI Employee Leopold Aschenbrenner

The Mind Behind ChatGPT: Ilya Sutskever's Vision for AI's Future

💡 Shared Insight

P(doom) and the Future of Humanity: Expert Insights and Predictions

P(doom) refers to the estimated probability of catastrophic outcomes or "doom" resulting from AI development, particularly related to existential risks from artificial general intelligence (AGI). It originated as an inside joke among AI researchers but gained prominence in 2023 following the release of GPT-4 and warnings from high-profile figures about AI risks.

Several prominent figures have stated their P(doom) estimates [the individual sources are linked in the P(doom) Wikipedia article]:

Yann Le Cun (Chief AI Scientist at Meta): <0.01%

Vitalik Buterin (Cofounder of Ethereum): 10%

Geoffrey Hinton (AI researcher): 10%

Elon Musk (CEO of Tesla, SpaceX, 𝕏 and xAI): 10-20%

Dario Amodei (CEO of Anthropic): 10-25%

Yoshua Bengio (AI researcher): 20%

Jan Leike (AI alignment researcher at Anthropic): 10-90%

Eliezer Yudkowsky (AI researcher): 99%+

It's important to note that these estimates are subjective and speculative, based on individual perspectives and assumptions about AI development and potential risks.

The concept of P(doom) has also faced criticism due to lack of clarity about timeframes, precise definitions of "doom," and its overall usefulness as a metric.

📰 AI Update

Claude 3.5 Sonnet: Anthropic Challenges OpenAI with Powerful New AI Model

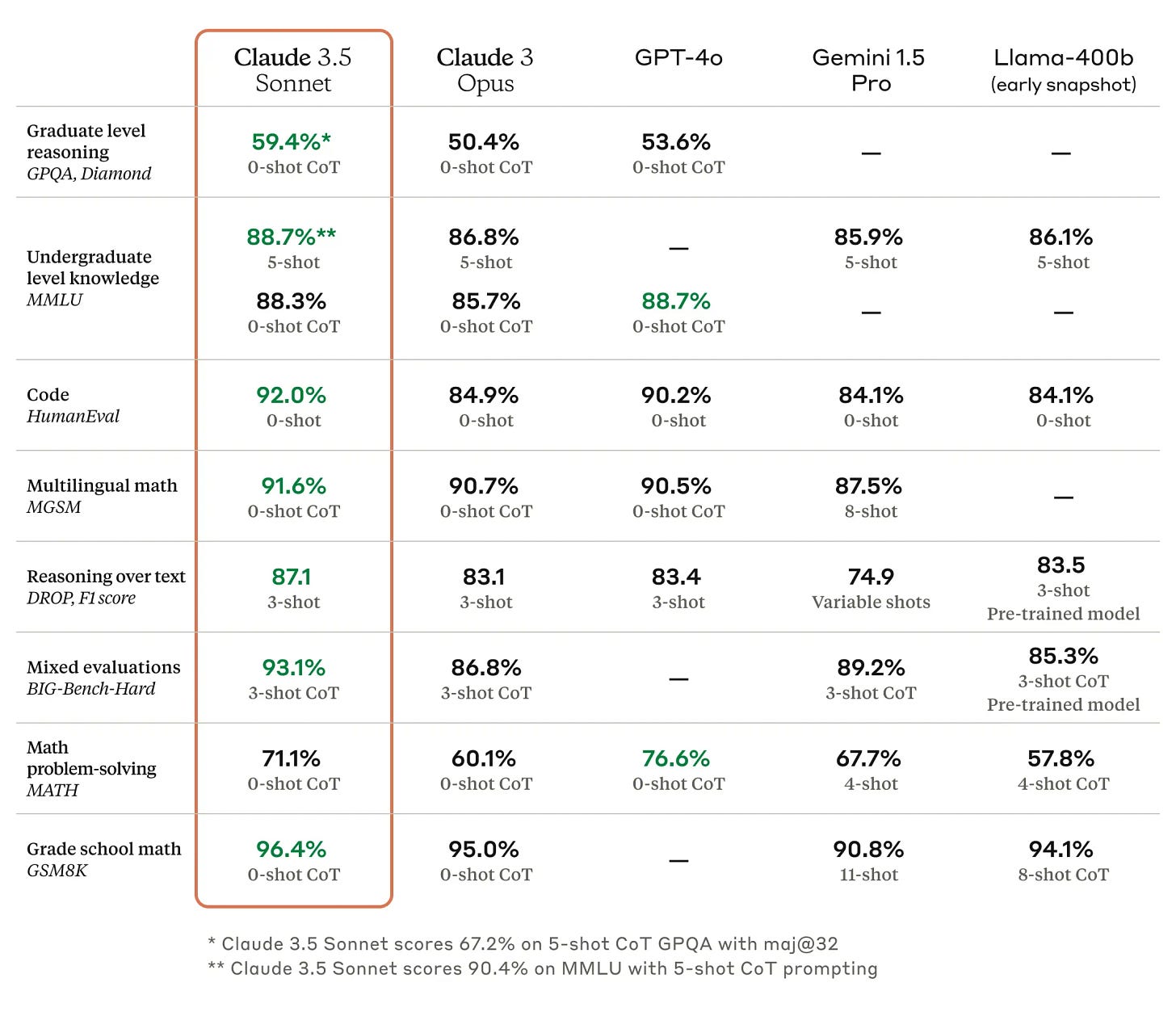

The latest release from Anthropic introduced Claude 3.5 Sonnet, a model that outperforms even GPT-4o the currently best model from OpenAI on key evaluations.

Claude 3.5 Sonnet is available for free on Claude.ai and the Claude iOS app, with higher rate limits for subscribers, as well as through the Anthropic API, Amazon Bedrock, and Google Cloud's Vertex AI.

The model is priced at $3 per million input tokens and $15 per million output tokens, with a 200K token context window. As comparison, GPT-4o costs $5 per million input tokens, the same for output tokens, but has only a 128k token context window.

Furthermore, the model is stated to be strong in image understanding.

A new interactive feature named Artifacts is made available in an experimental option. It allows users to edit and build upon real-time AI-generated content like code snippets, vector graphics or text documents.

My take: The release of Claude 3.5 Sonnet, which appears to outperform GPT-4o, could put pressure on OpenAI to release their next-generation model, likely named GPT-5, sooner. This could lead to a new wave of innovation in the field of large language models, ultimately benefiting users with more powerful and capable AI tools.

The newest Artifact feature offers a glimpse of a more interactive mode, wherein the model can be utilized for real-time collaboration on your own files.

Former OpenAI Chief Scientist Ilya Sutskever Launches Safe Superintelligence Inc.

Ilya Sutskever is a well-known figure in the AI research space. He co-founded OpenAI and served as its Chief Scientist, leading the groundbreaking research which resulted in the models powering ChatGPT.

He was part of the board decision that led to the firing of CEO Sam Altman in November 2023. Following this event, Sutskever remained invisible to the public until May 15, 2024, when he announced his exit from OpenAI after nearly a decade.

Ilya now wrote on 𝕏 that he kickstarts a new venture aimed at achieving safe ASI (Artificial Superintelligence). The company is named Safe Superintelligence Inc. and co-founded by Daniel Gross, an entrepreneur and investor, and Daniel Levy, a former scientist at OpenAI.

So far there is not much more than the mission statement shared with the public:

Safe Superintelligence Inc.

Superintelligence is within reach.

Building safe superintelligence (SSI) is the most important technical problem of our time.

We have started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It’s called Safe Superintelligence Inc.

SSI is our mission, our name, and our entire product roadmap, because it is our sole focus. Our team, investors, and business model are all aligned to achieve SSI.

We approach safety and capabilities in tandem, as technical problems to be solved through revolutionary engineering and scientific breakthroughs. We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.

This way, we can scale in peace.

Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures.

We are an American company with offices in Palo Alto and Tel Aviv, where we have deep roots and the ability to recruit top technical talent.

We are assembling a lean, cracked team of the world’s best engineers and researchers dedicated to focusing on SSI and nothing else.

If that’s you, we offer an opportunity to do your life’s work and help solve the most important technical challenge of our age.

Now is the time. Join us.

Ilya Sutskever, Daniel Gross, Daniel Levy

June 19, 2024

My take: When Ilya Sutskever announced his departure from OpenAI, many speculated about his next move. It was only natural to assume that he would either start his own endeavor or join a competitor. Fast-forward to today, and we have our answer - Ilya is co-founding Safe Superintelligence Inc., a company dedicated to achieving safe Superintelligence. Best wishes to Ilya, his co-founders, and the entire team at Safe Superintelligence Inc.! May their efforts lead to groundbreaking advancements in AI safety and contribute to a brighter future for all.

🌟 Media Recommendation

The Future of AI and Geopolitics with Former OpenAI Employee Leopold Aschenbrenner

Leopold Aschenbrenner (personal blog), who was part of the former Superalignment team of OpenAI, was a guest on the Dwarkesh Podcast. The episode is named: Leopold Aschenbrenner - China/US Super Intelligence Race, 2027 AGI, & The Return of History

Besides listening to the episode in your favorite podcast player, you can also watch the 4.5 hours long original interview on YouTube. A nice alternative to this is a one-hour walk-through with interpretations and comments from Matthew Berman on YouTube.

You might be aware that there are not many insights shared by former OpenAI employees. This is likely because OpenAI offers key employees who are leaving the company a substantial pay package in exchange for signing a non-disclosure agreement (NDA). Leopold stated that he valued his "freedom" over the equity worth close to a million dollars.

He stated that artificial general intelligence (AGI) seems to be in reach and that electricity will be the main resource needed to achieve it. Furthermore, he explained the vulnerability of data centers in the intelligence and industrial race and the need to protect them from possible first strikes.

Leopold wrote an essay series named “SITUATIONAL AWARENESS

The Decade Ahead”, which analyzes the AGI landscape, from deep learning advancements and computational power to geopolitical factors and the pursuit of AGI.

My take: There are countless interesting insights shared by Leopold in the podcast. If you are interested in the possible consequences of the race to AGI, this episode is a must-listen and Leopold for sure a person to follow.

The Mind Behind ChatGPT: Ilya Sutskever's Vision for AI's Future

Ilya Sutskever, a leading AI scientist behind ChatGPT, reflects on his founding vision and values in an 11-minute-long video called Ilya: the AI scientist shaping the world. The video was published by the Guardian at the end of 2023 and the interviews with Ilya are part of a 93-minutes-long documentary on AI, called iHuman.

Ilya finds inspiration in fundamental questions about learning, experience, thinking, and the workings of the brain, viewing technology as a force of nature akin to biological evolution.

He draws parallels between technological advancement and biological evolution, emphasizing mutation, natural selection, and the emergence of complexity.

Ilya states that AI has the potential to solve major global issues like unemployment, disease, and poverty. At the same time, AI also brings new challenges as amplified fake news, more extreme cyberattacks, and fully automated weapons.

My take: Another must-watch if you are interested in the further developments of AI and especially AGI. Getting the views and perspectives of one of the masterminds behind the recent advances in AI is a rare occasion, as Ilya is known for being relatively private. This video not only sheds light on the technical aspects of AI development but also delves into the philosophical implications, making it valuable for anyone seeking to grasp the broader impact of AI on society and human progress.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.