🤝 The hottest new programming language is English

Dear curious minds,

In previous issues, I mainly focused on leveraging large language models (LLMs) for text-based experiments and applications. In this edition, we delve deeper into the realm of using these powerful models for coding tasks. This was triggered by a new release from the only European company which so far created foundation models, Mistral AI.

In this issue:

💡 Shared Insight

AI Coding Assistants: A Powerful Tool, Not a Magic Wand📰 AI Update

Mistral AI's Codestral Redefines Local Code Generation

Beyond Chatbot Arena: SEAL's Private LLM Benchmarks🌟 Media Recommendation

The TED AI Show: Why the OpenAI Board Fired Sam Altman

💡 Shared Insight

AI Coding Assistants: A Powerful Tool, Not a Magic Wand

I received my doctoral degree in computer science in 2018. To realize the approaches developed in my thesis, I did write quite a lot of code. Most of it in the programming languages C++ and Python. But there is a change ongoing and if you want to be efficient, programming is not the same as it was in my most active coding time.

The hottest new programming language is English

— Andrew Karpathy (prev. Director of AI @ Tesla, founding team OpenAI)

Andrew Karpathy's bold claim expresses the transformative power of AI in the coding landscape. Today's AI coding assistants are not merely code generators or explainers which you prompt in natural language; they are interactive collaborators, capable of suggesting modifications, crafting comments and test cases, and engaging in meaningful discussions about code. This fundamental shift raises important questions about the future of programming education and the role of human programmers.

The Changing Landscape of Programming Education

Traditionally, I and other developers learned to code through a hands-on, problem-solving approach. We had to deal with challenges, discussed the problems they faced with colleagues and friends. We asked for or found solutions in online communities like Stack Overflow and adapted the code snippets to be used in our codebase. Furthermore, we put in significant effort to create test cases and documentation. This process helped develop our critical thinking, problem-solving skills, and deep understanding of code mechanics.

However, the rise of AI coding assistants presents a new model for learning. Will new programmers still wander the same path? Or will they use AI as their primary teacher, seeking its guidance and solutions at every turn? This shift could potentially accelerate the learning process, allowing newcomers to bypass some of the initial hurdles and focus on higher-level concepts.

The Importance of Foundational Knowledge

I like the analogy of the calculator and AI coding assistance. Calculators are extremely useful tools, but they are most effective when users have a foundational understanding of mathematical operations. Similarly, AI coding assistants are powerful, but their true potential is realized by programmers who understand the underlying principles of coding.

Relying solely on AI-generated code without understanding its inner workings is like trusting a calculator's output blindly. It's crucial for programmers to develop a critical eye, capable of evaluating the quality and accuracy of AI-generated suggestions.

The Evolving Role of the Programmer

As AI coding assistants continue to advance, the role of the programmer is likely to evolve. Rather than being mere code writers, programmers will become more like architects, designing the overall structure and functionality of software while leveraging AI to handle the implementation details. This shift will require a different set of skills, emphasizing critical thinking, problem-solving, and the ability to communicate effectively with AI.

The future of programming lies in finding the right balance between human expertise and AI assistance. Embracing AI as a collaborator can empower programmers to be more productive and creative, enabling them to tackle complex challenges and develop innovative solutions. However, it's essential to maintain a strong foundation in coding principles and understand the solutions generated by the AI.

By combining the best of both worlds, the programming community can unlock new levels of efficiency, creativity, and innovation. The future of programming is bright, and those who adapt to this changing landscape will be well-positioned for success in this exciting new era.

📰 AI Update

Mistral AI's Codestral Redefines Local Code Generation

Mistral AI introduces Codestral, their first model designed for code generation tasks.

The model is trained on a diverse dataset of 80+ programming languages, including popular ones like Python, Java, and JavaScript, as well as niche languages such as Swift and Fortran.

Codestral can complete functions, write tests, and fill in missing code, saving developers time and reducing errors.

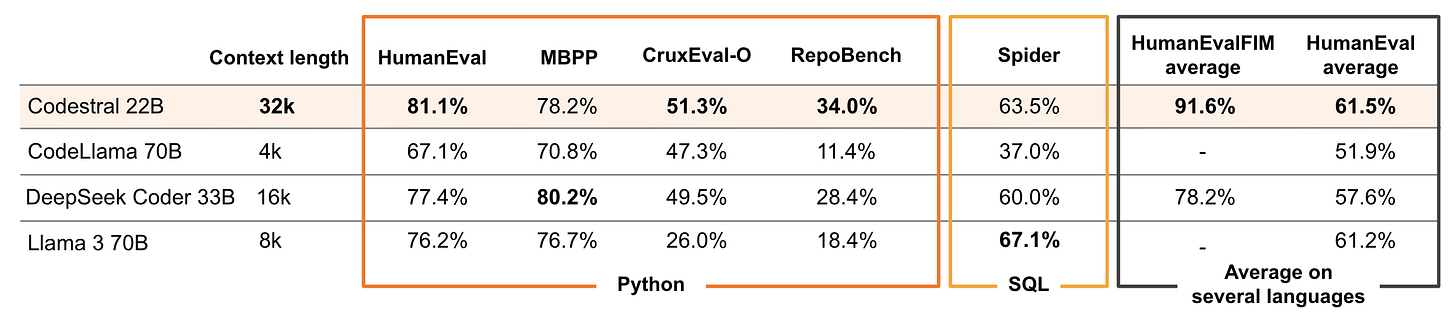

According to the shared benchmarks, Codestral outperforms it open competitors, even the larger ones, in most benchmarks.

Mistral AI names in the release article multiple options to run their model via API calls, but the big advantage of the model is that you can run it locally or in your own cloud instance.

As a 22B model, Codestral can run with 8bit Integer quantization on high-end consumer GPUs with 24 GB of GPU memory, like the NVIDIA GeForce RTX 3090 or 4090. With a 4bit Integer quantization, it will run on chips with 16 GB of memory.

The large context window of 32k tokens allows a better assistance in multi file programming scenarios. This advantage is also reflected in the RepoBench results (see image above), which tests the models on complex tasks.

Codestral is released under the new Mistral AI Non-Production License, which restricts commercial use and outputs, unlike the Apache 2.0 license used for previous open models from Mistral.

First local test of the model can easily be performed in Ollama which already added Codestral to their model catalog.

Mistral AI highlights the integrations in Tabnine and Continue.dev to use Codestral in your coding environment. The latter is an open-source extension which supports today’s most common editor, VS Code.

My take: I am impressed by the new coding model, Codestral, from Mistral AI. In a first test, chatting with Ollama, the model solved perfectly the programming task which was too difficult for many developers applying for the Deep Learning team at TOMRA. The exploration of the model integrated into VS Code via Continue.dev was even more impressive. Codestral approaches the capabilities of cloud-based solutions like GitHub Copilot, but stands out by running locally on my computer without sharing any data with the outside world.

Beyond Chatbot Arena: SEAL's Private LLM Benchmarks

If you are following this newsletter, you likely know that so far the Chatbot Arena is the best option to compare the performance of LLMs. Since a recent change, you can even pick a category, including the newly created option for hard prompts.

Good evaluations are difficult to build as public test datasets leak into training sets and an accurate and fair human evaluation is very labor-intensive.

However, there is an interesting new competitor for LLM evaluations, the SEAL Leaderboards for private, expert evaluations of frontier models:

Evaluated models include GPT-4o, GPT-4 Turbo, Claude 3 Opus, Gemini 1.5 Pro, Gemini 1.5 Flash, Llama3 70B, and Mistral Large.

Evaluation categories: Coding, Math, Instruction Following, and Spanish.

Design principles: Private domain expert evaluations.

Periodic updates with new evaluation sets and models.

My take: It's refreshing to see a new AI benchmark like the Seal leaderboard that aims to be unexploitable. However, as many models run only in the cloud, the companies behind these models get the prompts when they run the benchmark for the first time. Nevertheless, the detailed explanations for their approach in general and every evaluation category are fantastic. As this issue focussed mainly on AI coding assistance, I mostly looked at the explanation for the coding evaluation. This commitment to transparency is vital for understanding the capabilities and limitations of these models.

🌟 Media Recommendation

The TED AI Show: Why the OpenAI Board Fired Sam Altman

The new podcast “The TED AI Show” hosted by Bilawal Sidhu explores the thrilling and sometimes terrifying future of AI with leading experts, artists, and journalists.

Helen Toner joined as a guest in the second episode of the podcast. She was part of the OpenAI board which fired Sam Altman in November 2023. Within a week, Sam was rehired and less than two weeks later, Helen resigned from the board.

In the episode, Helen shared for the first time publicly the reasons for the board decision. She said that Sam was withholding information and misinterpreting things for many years, which made it hard for the nonprofit board to make sure that the company acted for the public good.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.