🤝 The secret to free Claude 3.5 Sonnet usage

Discover the coding editor Zed that unlocks unlimited AI assistance for non-coding tasks

Dear curious mind,

Today, I'm excited to share a powerful way to leverage Large Language Models (LLMs) directly within a coding editor for non-coding tasks. Even better, this method allows you to use the frontier model Claude 3.5 Sonnet for free!

It's been an eventful week in the AI world, with major announcements from tech giants like Meta and OpenAI. However, there is a concerning trend: many of the latest AI advancements are becoming inaccessible to users in Europe.

In this issue:

💡 Shared Insight

📰 AI Update

💡 Shared Insight

Zed: From Coding Editor to Universal AI Assistant

Imagine having unlimited access to one of the world's most advanced AI models, right at your fingertips, completely free. This isn't a far-off dream – it's the reality offered by Zed, an open-source coding editor that for me rapidly evolved into something much more. It is my central application to work with AI.

At its core, Zed is designed as a tool for developers, offering a fast and efficient coding environment. However, the recent addition of Zed AI has transformed it into a universal assistant capable of tackling a wide range of tasks. One aspect which made me started to use Zed, is that Anthropic's Claude 3.5 Sonnet, which from my point of view is currently the best model to assist you at writing, can be used for free in Zed. It's important to note that the duration of this free access is unclear, and using Zed intensively for non-coding tasks might not be the intended use case. However, the potential benefits are simply too good to ignore.

What truly makes Zed shine is its suite of context-building commands. The /file command allows you to seamlessly incorporate any text file from your disk into the AI conversation, while /fetch can parse and include a webpage directly in your prompts. Furthermore, you can create your own prompt library feature. You can save text snippets and recall them with the /prompt command, providing a neat way to store personal information that helps guide the AI's replies in the direction you need. For example, you could save details about your specific Linux operating system setup in a prompt, ensuring that responses to Linux-related questions are tailored precisely to your environment.

These features are not just powerful, they are also transparent. Every piece of added context remains fully visible and editable, giving you unprecedented control over your AI interactions. And by using the Claude 3.5 Sonnet model, you can make use of up to 200k tokens for your requests, which corresponds to around 150k words or 500 book pages.

As in other chatbot applications, you can organize multiple chats in tabs and access older chats via a history button, allowing you to revisit and build upon previous conversations.

Initially, I used Zed for the intended use case of programming. However, I realized its capabilities for general requests and now have the editor open and ask for assistance with a wide variety of tasks. From simple word translations to complex document analysis, creative writing, and in-depth research. The ability to easily incorporate content from popular file-based note-taking apps like Logseq and Obsidian has made Zed a valuable asset in my knowledge management workflows as well.

While Zed's primary focus remains on coding, its AI features have opened up a world of possibilities. It's not just a coding editor – it's a gateway to a new era of AI-assisted work and creativity. The context-building commands are really helpful in tailoring the AI responses to your needs. From my perspective, especially as long as you can use Claude 3.5 Sonnet for free in Zed, it is the best way to explore the new possibilities text-based AI models bring to you.

Currently, Zed offers official builds for macOS and Linux. While Windows is not yet officially supported, it can be installed relatively easily using Msys2 instead of building it yourself. MSYS2 is a software distribution and building platform for Windows that provides a Unix-like environment, making it easier to port and run Unix-based software on Windows systems. I successfully installed Zed on a Windows 11 system, following the MSYS2 installation instructions for Windows.

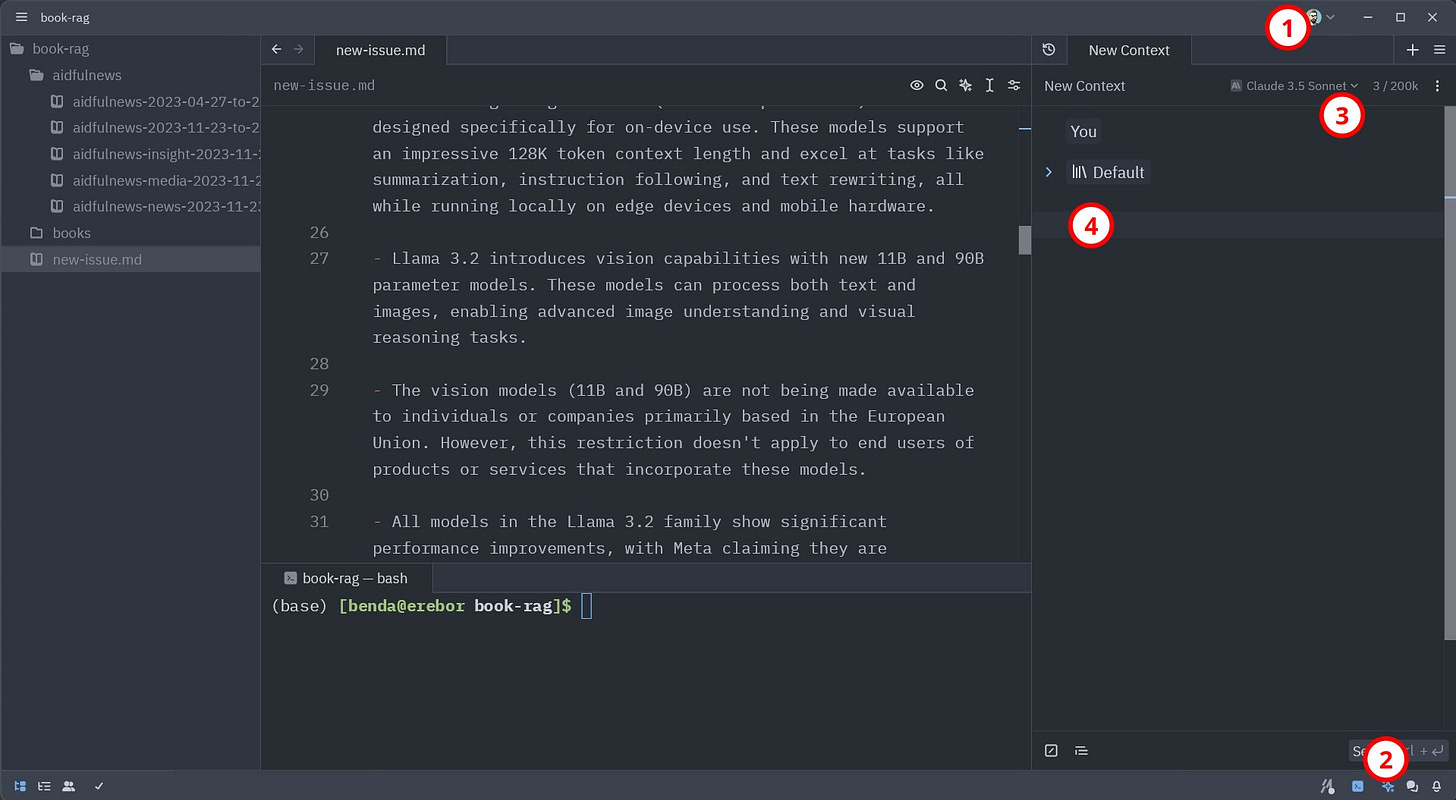

If you are now eager to get started with Zed for non-coding tasks, follow these steps after installation (the enumeration corresponds to the numbers shown in the image above):

Open the assistant panel with a click on the ✨ button in the lower right (or CTRL/CMD + ?)

Choose “Claude 3.5 Sonnet Zed” in the dropdown menu

Start chatting in the assistant panel (send message with CTRL/CMD + ENTER)

As Zed is, from my perspective, currently one of the most powerful ways to use text-generating AI, I intend to create some video tutorials to help others unlock Zed's full potential. If you're interested in seeing tutorials on specific Zed features or use cases, please let me know! Your feedback will help shape the content and ensure it's as useful as possible. Have you tried Zed yourself? What has your experience been like? I'm eager to hear your thoughts and suggestions on how we can make the most of this powerful tool. Just hit reply and share your thoughts.

📰 AI Update

Molmo: Allen AI's Open Breakthrough in Image Understanding AI Models

Allen AI has unveiled Molmo, a family of open-source multimodal AI models that are challenging the dominance of proprietary systems. The release includes four variants ranging from 7B to 72B parameters, all available under the Apache 2.0 license.

Allen AI, more formerly known as the Allen Institute for Artificial Intelligence (AI2), is a non-profit research institute founded by the late Paul Allen, co-founder of Microsoft.

Molmo outperforms larger models: Molmo 72B surpasses Llama 3.2 90B, which was released on the same day, and matches or exceeds performance of proprietary models like OpenAI's GPT-4o, Google's Gemini 1.5 Pro, and Anthropic's Claude 3.5 Sonnet on various benchmarks.

The models are trained on PixMo, a high-quality dataset 1000 times smaller than competitors, using speech transcriptions from human annotators for detailed captions.

The initial release includes a demo, inference code, technical report and the following model weights:

Molmo 72B: Based on Qwen2-72B with OpenAI CLIP as vision backbone

Molmo 7B-D: Using Qwen2 7B as backbone

Molmo 7B-O: Using Olmo 7B as backbone

MolmoE 1B: a mixture of experts model with 1B active and 7B total parameters

Allen AI plans to release in the next two months a more detailed technical report, the PixMo family of datasets, additional model weights and checkpoints, as well as training and evaluation code.

My take: This release demonstrates once more that high-quality data curation can overcome the need for massive datasets and compute in AI development. Even better is the open approach, which, in contrast to Meta’s new multimodal models, are usable for European users.

Llama 3.2: Meta's On-Device and Image Understanding AI Models

Meta has released Llama 3.2, expanding its AI model family with powerful new capabilities. This update introduces small models for edge devices, vision-enabled large language models, and improvements across the board in performance and efficiency.

Two new lightweight models (1B and 3B parameters) are designed specifically for on-device use. These models support an impressive 128K token context length and excel at tasks like summarization, instruction following, and text rewriting, all while running locally on edge devices and mobile hardware.

Llama 3.2 introduces vision capabilities with new 11B and 90B parameter models. These models can process both text and images, enabling advanced image understanding and visual reasoning tasks.

The vision models (11B and 90B) are not being made available to individuals or companies in many European countries, which is likely related to the EU AI Act and other data protection regulations in these regions. However, this restriction does not apply to end users of products or services that incorporate these models.

With respect to any multimodal models included in Llama 3.2, the rights granted under Section 1(a) of the Llama 3.2 Community License Agreement are not being granted to you if you are an individual domiciled in, or a company with a principal place of business in, the European Union. This restriction does not apply to end users of a product or service that incorporates any such multimodal models. [source]

All models in the Llama 3.2 family show significant performance improvements, with Meta claiming they are competitive with or exceed the capabilities of similar closed-source models in various benchmarks.

My take: It's nice to see Meta continuing to push the boundaries of open-source AI. The new releases add new capabilities to their Llama model family. However, it is sad to discover the restrictions for European users. Mistral with their current Pixtral release and Ai2 with Molmo are brave enough to do release their competing open models without restrictions.

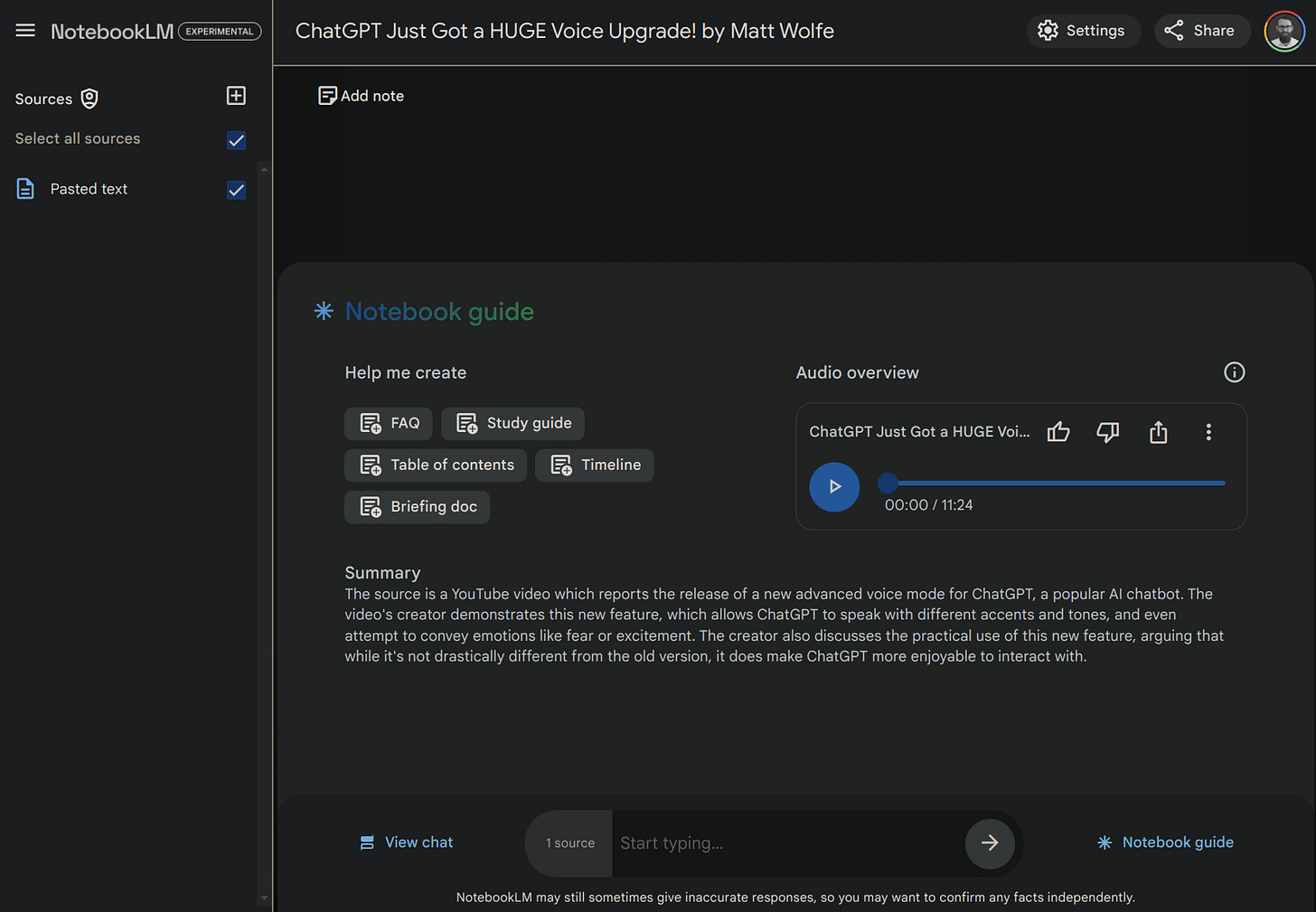

Google's NotebookLM: YouTube and Audio Support for AI-Generated Podcasts

Google has announced new features for NotebookLM, its AI-powered note-taking and research assistant.

Users can paste public YouTube video URLs directly into their notebook. NotebookLM analyzes the video transcript and allows for in-depth exploration through inline citations linked to the video's transcript.

The tool now supports audio file uploads which are transcribed to add the content to the sources.

Earlier in September, the Audio overview feature was released. It turns documents into podcast-style discussions, which have a really high quality.

Sharing an Audio overview has become simpler. Users can generate a public link with just a click, making collaboration and information sharing easier.

My take: The new capabilities of NotebookLM, especially the expansion of its podcast-like feature to include YouTube videos and audio files, are impressive and potentially very useful. The quality of these generated discussions is fantastic, as this example shows. However, it's important to remember that NotebookLM is still an experimental feature from Google. As with any experimental product, there's always a risk that Google might discontinue or significantly alter the service at any time.

Notion Unveils Major AI Assistant Upgrade

Notion has released a significant update to their Notion AI assistant.

A key new feature is "selective knowledge", which allows users to specify exactly which parts of their workspace the AI should reference when responding. This enables more targeted and relevant outputs.

The AI can now save chat outputs as full Notion pages, including inserting them directly into databases. This simplifies the process of turning AI-generated content into notes.

Improved attachment handling allows the AI to read and summarize PDFs. Despite this, spreadsheet support is still limited.

The assistant can now read and reference information from connected tools like Slack and Google Drive for a more comprehensive knowledge base.

My take: This update represents a meaningful step forward in making AI assistants more useful for working in Notion. The selective knowledge feature in particular could be a game-changer for getting relevant outputs. However, according to a review shared on YouTube by Landmark Labs, there's still room for improvement, especially in working with complex multi-database pages. It will be interesting to see how Notion continues to evolve their AI capabilities to support their core product.

OpenAI Rolls Out Advanced Voice Mode for ChatGPT, but Excludes European Users

OpenAI has begun the broader rollout of Advanced Voice Mode to ChatGPT Plus and Team subscribers, following its initial announcement during the Spring Launch event in May 2024.

Advanced Voice Mode enables natural, spoken conversations with ChatGPT through the mobile app. Users can ask questions or have discussions via voice input and receive spoken responses. More details are shared in the Voice Mode FAQ.

Key features:

Nine lifelike output voices, each with distinct tones and characters

Ability to have background conversations while using other apps or with the phone screen locked

Integration with ChatGPT's memory and custom instructions features

Daily usage limits, with notifications as users approach their limit

Privacy aspects:

Audio clips from Advanced Voice conversations are stored alongside chat transcriptions

Users can delete conversations, which removes associated audio within 30 days

By default, audio is not used to train OpenAI's models unless users explicitly opt-in

Advanced Voice is currently unavailable in several European countries, including the European Union member states, the United Kingdom, Switzerland, Iceland, Norway, and Liechtenstein. OpenAI has cited regulatory concerns as the reason for this restriction, which is likely related to the EU AI Act and other data protection regulations in these regions. Sam Altman, CEO of OpenAI, has specifically mentioned that this limitation is due to "jurisdictions that require additional external review," suggesting that the company is being cautious about deploying the technology in areas with more stringent AI oversight and data protection laws.

My take: It is great to see Advanced Voice Mode for ChatGPT finally reaching a wider audience. As a European user, it's disappointing to be excluded. While some suggest using a VPN to access it, I will wait. There are many other exciting AI developments to explore meanwhile.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.