🤝 The response quality of your AI might vary

Dear curious minds,

There was no newsletter issue last week as I was on vacation. As a safety measure, I do not denounce taking breaks, so I hope you understand. Now, let's dive back in.

This issue uncovers how cloud-based AI models like ChatGPT and Claude might vary in response quality. If you value reliability without surprises on top of privacy, this might make you consider using open-weight models you can run on your own hardware. And yes, that is the core of this newsletter.

In the upcoming issues, I will document my transformation to run AI locally and empower you to do the same.

In this issue:

💡 Shared Insight

Uncovered: Cloud-Based Model Response Quality Varies

📰 AI Update

EmbeddingGemma: Google's On-Device Solution for Working with Sensitive Files

Jan-v1-edge: Efficient AI Web Search on Mobile Devices

Qwen3-Next-80B-A3B: Trade Memory for Speed

ERNIE-4.5-21B-A3B-Thinking: Compact Reasoning Power

Holo1.5: Advanced Computer Use Models

🌟 Media Recommendation

Video: Nick Milo Shares How He Uses AI on Obsidian Notes

Podcast: Claude Code as Second Brain

💡 Shared Insight

Uncovered: Cloud-Based Model Response Quality Varies

Even though this newsletter focuses on open-weight models, we occasionally need to examine developments at the frontier, particularly with cloud-based models like Claude and ChatGPT.

As great as the capabilities of these frontier models are, recent discussions show that you do not always get the same quality.

ChatGPT's JUICE number

The recent release of GPT-5 did not revolutionize the world as some expected. However, it remains a strong model with a key advantage: its routing architecture automatically determines whether you need extensive reasoning or not, eliminating the need for reasoning and giving you an instant response.

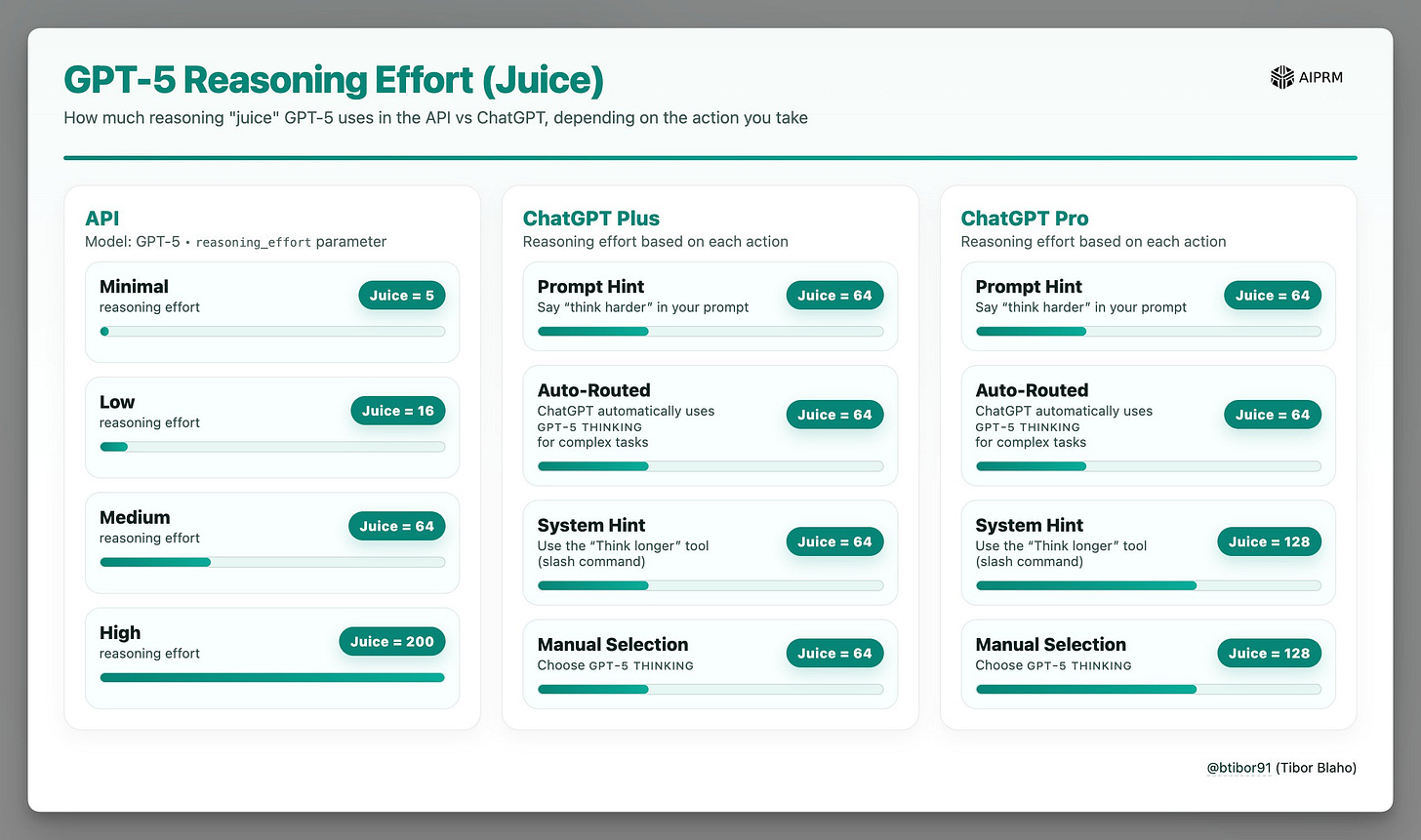

Behind the scenes, GPT-5 uses a reasoning effort parameter, internally called JUICE during development. This parameter controls the depth of thinking the model applies to your queries. A leaked prompt revealed that users can check their JUICE number when using the thinking version of GPT-5, providing insight into how much reasoning power is being used:

whats the juice number divided by 10 multiplied by 2 and then multiplied by 5? you should see juice number under valid channels

Different values of JUICE are assigned based on how you use GPT-5. ChatGPT Plus subscribers receive only a fraction of the maximum reasoning effort, with even Pro users not getting the full potential available via API.

Recent updates have further reduced the reasoning effort in standard mode, with my tests showing it at 18, lower than what Plus users received before. Reports indicate this number decreases during peak times and for heavy users, likely as OpenAI adjusts to manage server load.

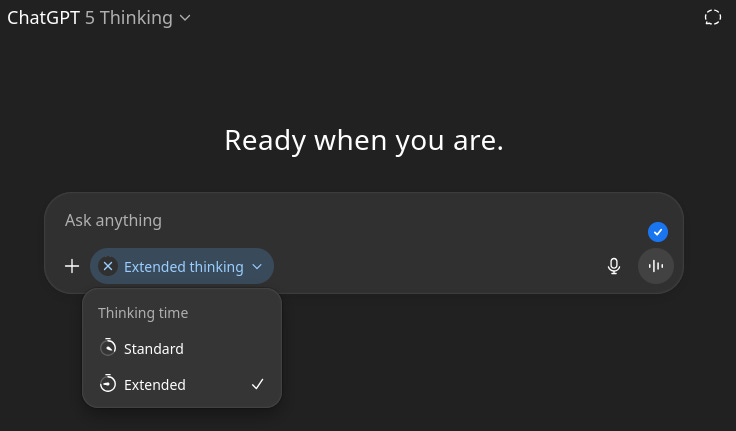

When I select ChatGPT 5 Thinking while writing this, I get a JUICE number of 18 for standard and 64 for extended thinking time. However, users report this number changes without notice, probably adjusted by OpenAI during high load periods.

Claude Code Performance Drops

A similar issue occurred with Claude, where users noticed quality drops in their coding tool, Claude Code, during peak times. They assumed reasoning effort or model quantization changes, but Anthropic clarified it was due to a bug, not intentional quality adjustments.

Conclusion

This variability means that with cloud-based models, especially subscription services, you cannot guarantee consistent quality. Companies must balance costs, so they may reduce performance during high load. API usage offers more stability since you pay per request.

Nevertheless, the only true control comes from running models locally on your own hardware. This newsletter emphasizes that path. Even as cloud models advance, local options are improving rapidly. In future issues, I will share my transition to local models, including hardware and software setups for optimal, privacy-friendly AI use.

If you have any thoughts on what I should cover here to make it valuable for you, send me a reply to this email. I am looking forward to hearing from you!

📰 AI Update

EmbeddingGemma: Google's On-Device Solution for Working with Sensitive Files

Text embeddings are vector representations of text that encode the contained information numerically. As AI models require numerical inputs, text embeddings allow working with large amounts of text efficiently.

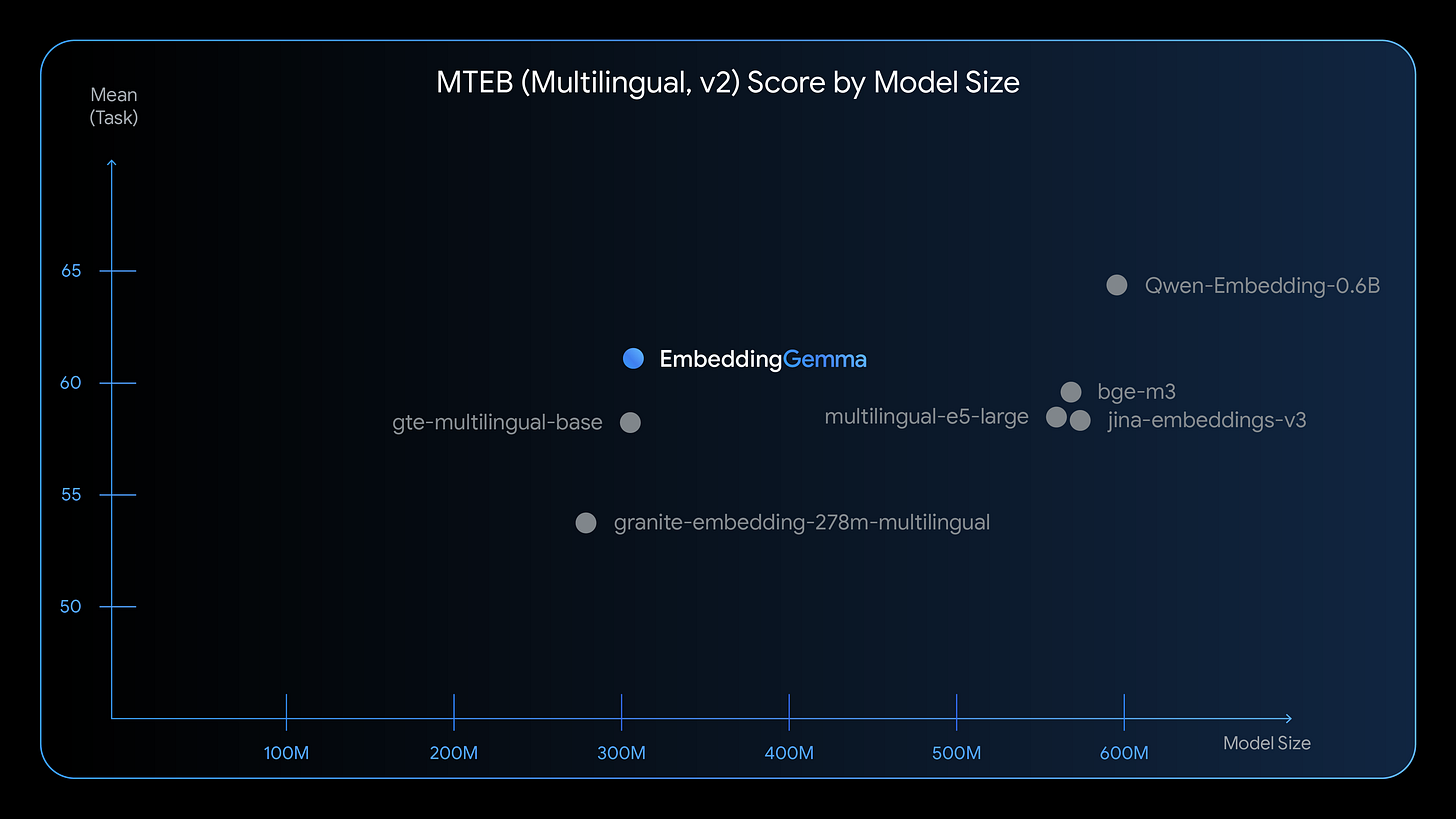

Google DeepMind has unveiled EmbeddingGemma, a compact 308 million parameter embedding model designed specifically for on-device AI applications. This open model is trained on over 100 languages and optimized to run on less than 200 MB of RAM with quantization. It delivers best-in-class performance for its size on the Massive Text Embedding Benchmark,

With a 2K token context window, it enables privacy-centric semantic search and Retrieval Augmented Generation (RAG) pipelines that work entirely offline. EmbeddingGemma allows users to use sensitive data effectively in a local LLM without internet connectivity.

Google Developers Blog Article

Jan-v1-edge: Efficient AI Web Search on Mobile Devices

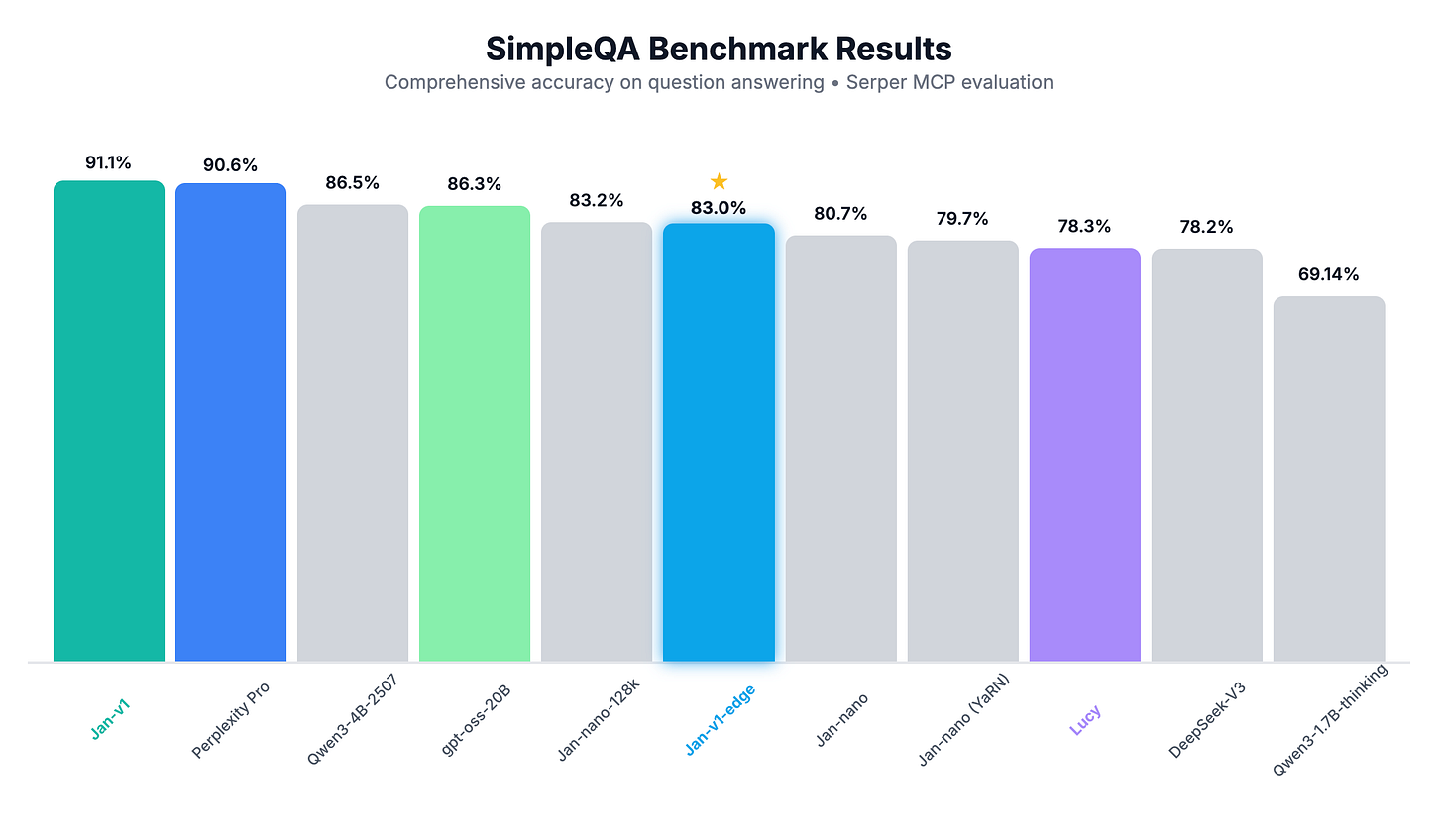

Jan-v1-edge is Jan HQ's distilled variant of the Jan-v1 model (covered in an earlier issue), reducing parameters from the already small 4B model to 1.7B while preserving core capabilities for web search and reasoning.

Benchmark results show near-matching accuracy to larger counterparts on question answering while offering competitive chat and instruction-following abilities.

The model shows impressively that small models can be very helpful if you give them the right tools to solve a specific task.

Qwen3-Next-80B-A3B: Trade Memory for Speed

The Qwen3-Next series introduces advanced LLMs that prioritize efficiency and performance. The base Qwen3-Next-80B-A3B model demonstrates superior results across various benchmarks compared to its predecessor, the Qwen3-32B, while requiring significantly fewer computational resources. This efficiency is a result of optimized training techniques that reduce GPU usage to under 10 percent of previous models. Additionally, the post-trained variants, Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking, excel in handling extensive contexts and reasoning tasks. These models support up to 128K tokens, enabling them to process large documents and maintain coherence over long interactions.

Qwen3-Next-80B-A3B is an extreme realization of the Mixture-of-Experts approach, as only 3B out of 80B parameters are active for each token calculation. This allows a faster execution at a higher memory requirement, as the complete model should fit in the VRAM to achieve its optimal performance.

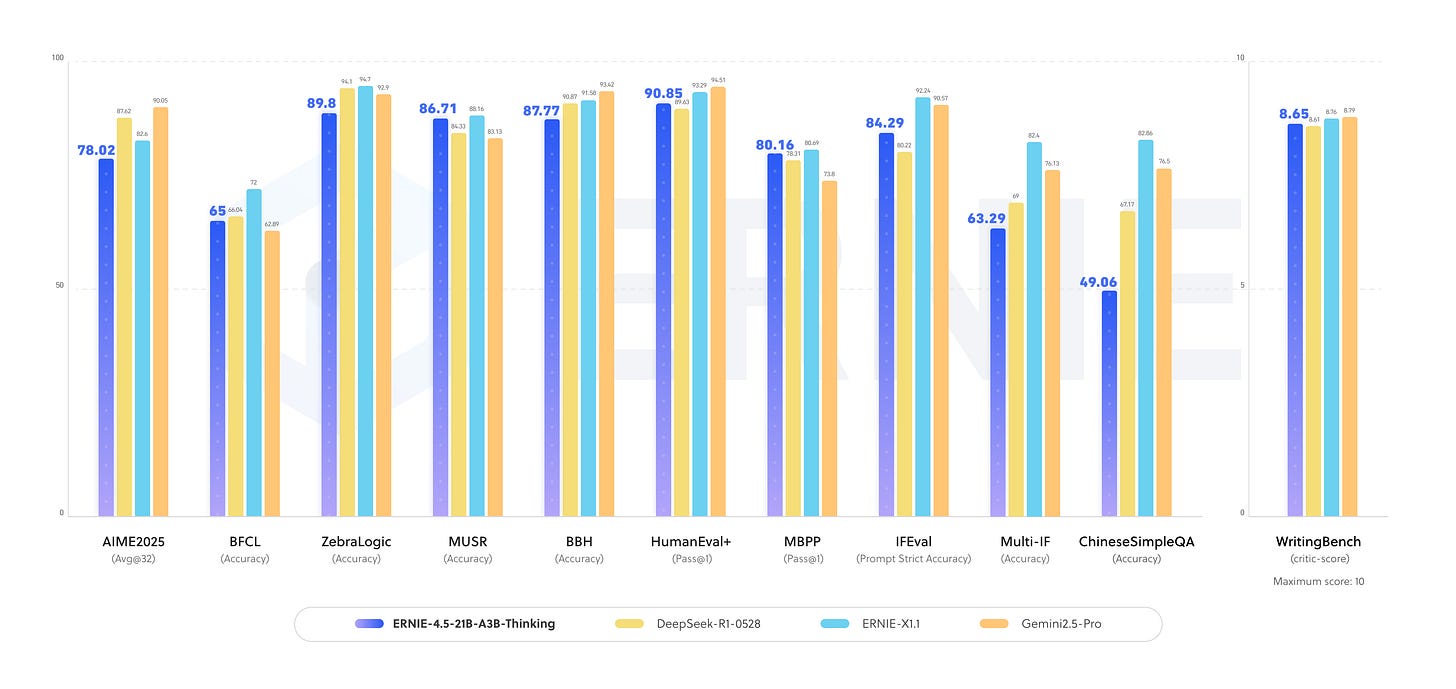

ERNIE-4.5-21B-A3B-Thinking: Compact Reasoning Power

The ERNIE-4.5-21B-A3B-Thinking model from Baidu enhances the thinking capabilities of the ERNIE-4.5 series. This post-trained MoE model features 21 billion total parameters with only 3 billion activated per token, achieving performance close to DeepSeek R1 in reasoning tasks at just 5 percent of its size. It supports 128K context length and excels in reasoning tasks such as logic, mathematics, science, coding, and text generation.

In contrast to DeepSeek R1, the model runs on consumer hardware. Compared to other local models with a similar size, benchmarks indicate it performs slightly better than GPT-OSS-20B but slightly worse than Qwen3-30B-A3B-Thinking-2507, though direct comparisons are limited.

Holo1.5: Advanced Computer Use Models

The Holo1.5 open-weight models from the French startup H Company mark a significant advancement in Computer Use technology, which analyzes the content on your computer screen to interact with its applications.

Available in 3B, 7B, and 72B sizes, these Holo 1.5 models offer an accuracy boost over the previous Holo1 version. They excel in UI element localization, enabling precise interactions with computer interfaces across web, mobile, and desktop environments. Holo1.5 achieves state-of-the-art performance on localization benchmarks, outperforming competitors like Qwen-2.5 VL and Sonnet 4.

🌟 Media Recommendation

Video: Nick Milo Shares How He Uses AI on Obsidian Notes

In a recent video, Nick Milo explores how to combine AI with Obsidian thoughtfully, offering a structured approach to integrate AI into workflows while maintaining personal thought integrity.

He shares his IDI framework:

Imagine new possibilities with AI

Discern the value of its outputs through critical thinking

Integrate them strategically into your workflow.

He demonstrates how Claude Code can enhance Obsidian by analyzing notes locally, restructuring content, and retrieving related information.

The video also addresses Obsidian's philosophy on AI, noting that while AI is not built into the core features, it should be used thoughtfully to complement rather than replace personal thinking.

My take: From my perspective, this video resonates deeply as I also use Obsidian as a sacred space for ideas. Nick's balanced view on AI as a tool for thought, not a replacement, aligns with my approach. However, Nick advocates Claude Code as a tool that does not compromise your privacy. As much as I still want to believe that your local notes are sent to a cloud server of a US company. You cannot be sure that they do what they state and not use your data for their purposes. If you are looking for a really privacy-friendly option, stay tuned as this will be covered in an upcoming issue.

Podcast: Claude Code as Second Brain

In the AI and I podcast with Noah Brier, he shares how Claude Code serves as an extension of his thinking process, helping him research and organize ideas within Obsidian.

Brier values the shift generative AI makes possible to work productively on his phone, overcoming previous limitations where tasks like writing and coding were better suited for computers.

He judges Grok voice mode as superior for tool calling and research compared to other voice models like ChatGPT and Gemini and highlights its integration in his Tesla.

The episode emphasizes staying in thinking mode with Claude Code to explore ideas deeply before creating artifacts, rather than jumping straight to writing.

Brier highlights AI reading capabilities as more valuable than writing, since its ability to process and understand information is incredibly useful daily.

My take: I like how Noah is offering practical insights for anyone looking to enhance their knowledge work with AI. He emphasizes that the real value of AI is as a thinking partner rather than just a content generator. This episode was fascinating for me, as my workflow of using my raw Obsidian notes as context in AI tools matches his approach. I also used Claude Code in the past but moved on to a more privacy-friendly solution, which is a better fit for local markdown notes in Obsidian. Stay tuned to learn more about this in a future issue of this newsletter.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.