🤝 Your AI might be a double agent

running local is the only way to trust your AI

Dear curious mind,

With the rapidly increasing possibilities of generative AI, we face a crucial question: Who does our AI really serve? The Shared Insight of this issue explores why your AI assistant might be a double agent, and how to ensure your data stays truly private.

In this issue:

💡 Shared Insight

Open vs. Closed AI: Why 2025 Will Change Everything

📰 AI Update

Llama 3.3: Squeezing 405B Performance into 70B Parameters

OpenAI: Final o1 Model With Image Support, Pro Tier, and Daily Releases

🌟 Media Recommendation

Video: Sam Altman on AGI Timeline, OpenAI's Future, and Industry Dynamics

💡 Shared Insight

Open vs. Closed AI: Why 2025 Will Change Everything

In biweekly 𝕏 Space discussions with a few friends about AI news and developments, we also explore crucial questions about responsible AI usage and its future implications. A recent conversation highlighted a fundamental challenge many organizations face: how to leverage AI's power while protecting sensitive data.

When a new participant asked about sharing customer data with OpenAI's API, it triggered an important discussion about data privacy and control. While OpenAI claims API conversations aren't used for training, the reality is that your data still leaves your system and resides on their cloud servers. There's no guarantee about how this data will be used in the future.

The Only True Data Protection is Local Usage

The only foolproof way to ensure data privacy is to keep data under your control. This means running AI models on your own hardware. While this might seem more complex than using cloud-based solutions, it's the only way to guarantee your data remains truly private.

The Evolving AI Landscape

Currently, there's a noticeable gap between cloud-based foundation models (OpenAI, Anthropic, Google) and open-source models with shared weights. The compute requirements for training state-of-the-art models are increasing exponentially, seemingly widening this gap as many companies are not willing to openly share the results of their investments. However, there are exceptions, like Meta and Alibaba.

While cloud-based models currently lead in benchmarks, the real question is:

Will this performance gap matter to end users in the near future?

Looking Ahead

As we move towards AI agents in 2025 and beyond, the paradigm is shifting. Instead of relying on one massive model to handle everything, agents will break down complex tasks into smaller, specialized subtasks. This shift presents three significant advantages for open-source models:

Specialization: Smaller, focused open-source models can excel at specific tasks.

Fine-tuning: Open weights allow customization for specific use cases.

Privacy: Complete control over data and processing.

While cloud-based solutions offer convenience today, the future might favor a more distributed approach with specialized open-source models. As AI agents become more prevalent, the ability to run these models locally while maintaining data privacy could become a crucial advantage.

The year 2025 could mark a turning point where open-source AI models shine, particularly in the context of AI agents. Organizations that start preparing for this shift now – by exploring open-source solutions and building infrastructure for local model deployment – will be able to protect their data while leveraging AI's full potential.

📰 AI Update

Llama 3.3: Squeezing 405B Performance into 70B Parameters (Ollama model page)

The new Llama 3.3 70B model from Meta impressively matches the performance of larger models with multilingual capabilities and extended features. However, a 30B version would be even more exciting for everyone who runs LLMs locally on consumer GPUs.

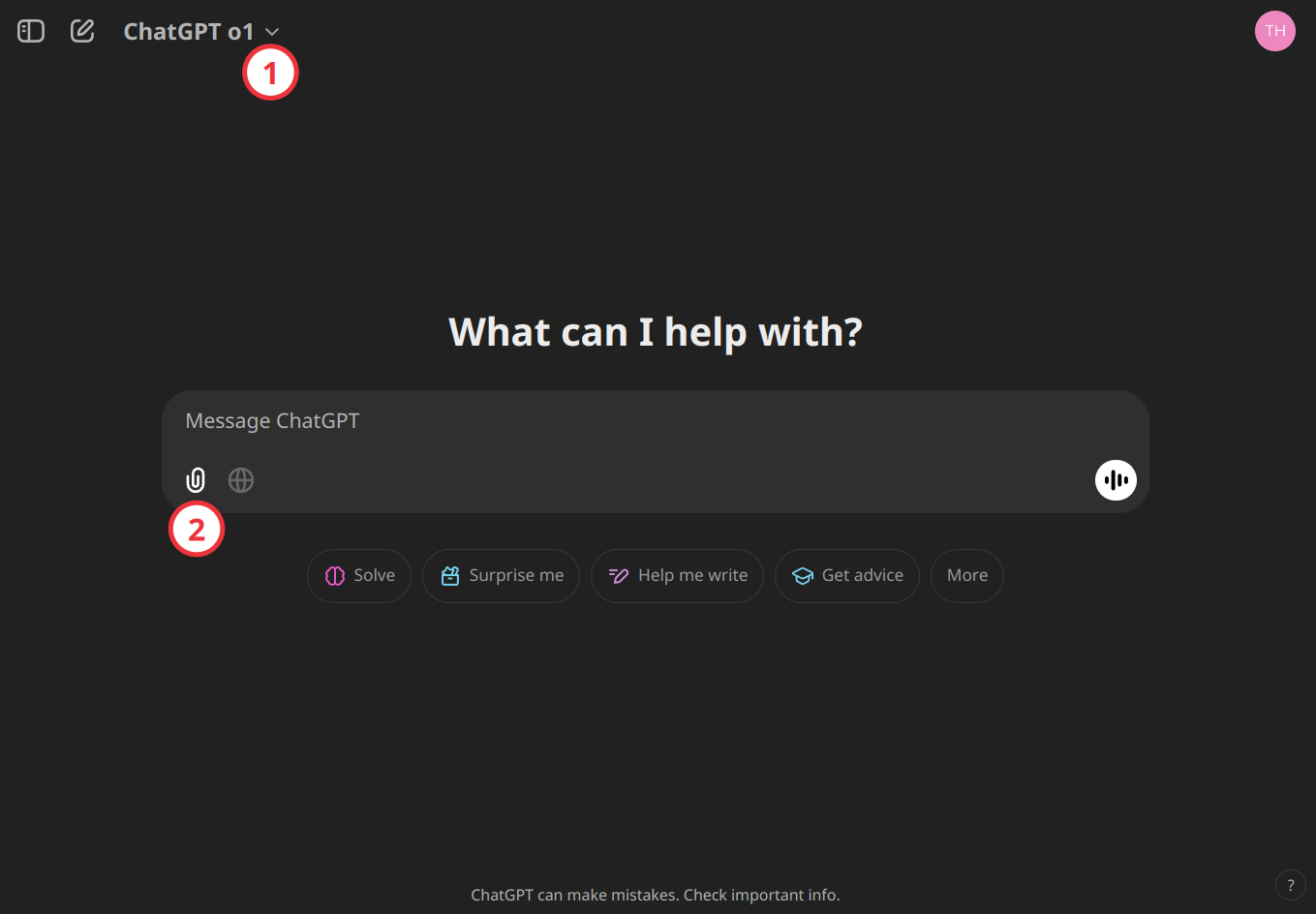

OpenAI: Final o1 Model With Image Support, Pro Tier, and Daily Releases (OpenAI webpage)

OpenAI has initiated a "12 Days of OpenAI" event, featuring daily livestreams with new product launches and demonstrations from December 5 to December 20, 2024. The event began with the release of the o1 reasoning model and the introduction of a $200 monthly ChatGPT Pro subscription. Let’s hope that we finally get access to the text-to-video model Sora which was already announced in February.

🌟 Media Recommendation

Video: Sam Altman on AGI Timeline, OpenAI's Future, and Industry Dynamics

Sam Altman, CEO of OpenAI, shared insights in a recent interview at the New York Times DealBook Summit about the company's progress towards Artificial General Intelligence (AGI) and Artificial Super Intelligence (ASI).

AI development timeline:

Altman expects significant AI breakthroughs in 2025 that will surprise even skeptics.

He predicts AI agents capable of handling complex human-like tasks will emerge next year.

There is "no wall" in AI scaling progress, with advances continuing across compute, data, and algorithms.

OpenAI's relationship with Microsoft:

Altman denied reports of "disentanglement" between the companies.

While acknowledging some challenges, he emphasized the partnership remains positive.

He clarified that OpenAI doesn't need to build its own computing infrastructure.

Commoditization:

Altman compared AI to the transistor - a fundamental technology that will become widely available.

He expects AI capabilities to become embedded across products and services.

My take: The interview provides valuable insights into OpenAI's strategic thinking and Altman's vision for AI development. His timeline predictions are very ambitious, while his views on AI commoditization suggest a future where the technology becomes widely available rather than concentrated among a few players.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.