🤝 AI Acting Drunk?

Dear curious mind,

This week, we explore why AI models might sometimes appear to be acting 'drunk', connecting it to LLM quantization. On top, you will get first insights about the first thinking model from the European company Mistral and a book bundle for a price where it is challenging to not buy.

In this issue:

💡 Shared Insight

Is Your AI Tipsy? The Alcohol Analogy for LLM Quantization

📰 AI Update

Mistral's Magistral: Behind in Benchmarks but Excelling in Languages

🌟 Media Recommendation

Humble Bundle: 17 O'Reilly Books on AI and ML You Can Chat With

💡 Shared Insight

Is Your AI Tipsy? The Alcohol Analogy for LLM Quantization

We interact with Large Language Models (LLMs) more and more frequently, whether through chatbots, coding assistants, or summarization tools. We often expect them to be fast and responsive, delivering insights quickly. But running powerful models, efficiently, involves some clever engineering. One key technique is called quantization.

Think of an LLM as a complex mathematical structure with billions of parameters (called weights) that define its knowledge and capabilities. These numbers are typically stored with high precision (often with 16 bits each as a floating-point number), offering maximum accuracy. However, this results in massive file sizes and requires significant computing power to answer requests (inference).

Quantization is a technique to reduce the precision of these numbers, storing them using fewer bits (e.g., 8 bits, 4 bits, or even fewer). This makes the model file size much smaller and speeds up the computations, making it possible to run larger models faster and on less powerful hardware. On top, instead of floating-point numbers, there are often whole numbers (integers) used to speed up the calculations even further.

But there's a trade-off. Reducing precision can impact the model's accuracy and performance. A great way to visualize this trade-off comes from John T Davies, who shared a simple yet insightful analogy in our last X-space (every Tuesday in even weeks at 9 PM CET - just go to @aidfulai on 𝕏 to join us) where we discuss various AI topics.

As the analogy illustrates, reducing the bits to store the LLM parameters (more drinks) makes the model smaller and faster (more tokens per second) responses, but it also reduces its clarity (output quality).

This concept is particularly visible and important for open-weight LLMs. Because you can download and run these models on your own hardware, you have control over the quantization level. Lower quantization (like Q4, which stands for 4 integer bits) often what makes it possible to run models with billions of parameters on consumer hardware and offers a good balance between size, speed, and a still quite usable quality. However, for tasks requiring maximum precision or when using many tools from multiple MCP servers, higher quantization (like Q8) or even full precision (FP16) might be beneficial.

Ultimately, you need to evaluate for yourself what works best for your specific needs. Q4 is often a great starting point for many tasks, offering a nice trade-off. But if you notice the model making errors or generating nonsensical outputs, especially in critical applications, consider trying a higher precision version. Just keep in mind that the lower you go in quantization, the worse the responses can get, and below Q4, you should treat the results with extra caution.

With cloud-based LLMs (like those offered in chatbots or via APIs by major providers), you typically have less transparency. The company running the service might use quantization internally to save costs and improve speed. Even worse, they might dynamically change the quantization level based on current server load. This means the quality of the responses you get could potentially vary from request to request, and you might not always be getting the peak performance you expect or have experienced previously.

Understanding quantization guides you in choosing the right model and settings for your tasks with open-weight LLMs, and gives you some background knowledge of what might happen behind the curtain for cloud-based LLMs.

📰 AI Update

Mistral's Magistral: Behind in Benchmarks but Excelling in Languages [Mistral news]

Mistral released the first reasoning models named Magistral in a Medium version via API and a small version building on top of Mistral Small 3.1 as open-weights with the Apache 2.0 license. While Magistral lags behind competing models like DeepSeek-R1 in their shared benchmarks even for the more powerful Medium version, the models shine in their multilingual capabilities, reasoning seamlessly across various languages including English, French, Spanish, German, Italian, Arabic, Russian, and Simplified Chinese. Personally, I'm particularly excited about the open-weights Magistral Small variant, which delivers impressive speed on my GPU setup with 24 GB of memory.

🌟 Media Recommendation

Humble Bundle: 17 O'Reilly Books on AI and ML You Can Chat With

There is so much happening in the AI space that it's really difficult to keep up with the news. Diving deeper into special topics of it is even harder and requires a lot of time and effort. On the one hand, books are static and seem to be outdated, but on the other hand, high-quality books written by authors which are experts in the topic are still a good entry point to dig deeper on a specific topic. A new Humble Bundle offers a fantastic opportunity to acquire high-quality, technical books on these vital topics at an unbeatable price:

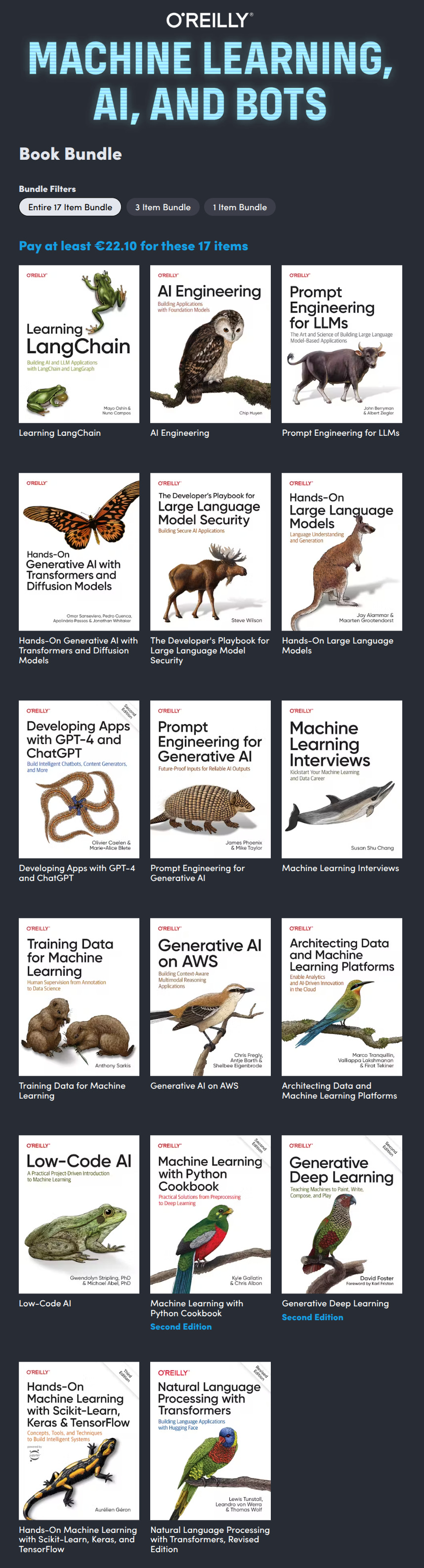

Humble Bundle is currently running an offer featuring a collection of 17 O'Reilly books.

These books cover key areas in cutting-edge AI, including Machine Learning, LLMs, LLM Engineering, and building agent systems. They are known for their high-quality and practical focus.

The price for the entire bundle is 22.10 EUR (or the equivalent in USD), representing a massive discount compared to buying the books individually.

This offer is limited in time, available for less than five more days.

A significant advantage of Humble Bundle books is that they are DRM-free. This means you can easily use their content as input for your LLM tools and summarize chapters, ask questions, or even "chat" with the book's content. You will be able to extract tailored knowledge and wisdom without needing to read the technical books cover-to-cover. You could even feed multiple books into large-context models like Gemini to find overlapping concepts or extract combined insights.

As with all Humble Bundles, you have the option to adjust how much of your purchase contributes to charity.

My take: This bundle is an absolute "no-brainer" if you're interested in the technical aspects of LLMs or engineering solutions with them. O'Reilly books are top-notch resources that go deep into the subject. Getting 17 of them for this price is truly remarkable. The ability to interact with the content using AI tools because they are DRM-free adds incredible value, making the information much more accessible and actionable. I can fully recommend this deal – seriously, act fast before it's gone! Just to be clear, I don't get any commission for recommending this, I just truly think it's a great offer.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.