🤝 China offers AI for everyone

Dear curious mind,

The AI world is splitting into two camps: America is pursuing dominance through closed, controlled development while Chinese researchers are releasing countless powerful AI models that anyone can use, modify, and build upon.

This divergence isn't just reshaping the competitive landscape but fundamentally changing who gets to participate in the AI revolution as I elaborate in the “Shared Insight” of this issue.

In this issue:

💡 Shared Insight

How Dueling National AI Strategies Could Reshape the Global Tech Landscape

📰 AI Update

HiDream-E1.1: New Open-Weight Image Editing Champion Emerges

FLUX.1 Krea [dev]: When AI Images Stop Looking Like AI

Step-3: A Strong Chinese Open-Weight Model That Targets Efficiency

Qwen3-235B: The Thinking Version Has Arrived

GLM-4.5: Another Chinese AI Model Matches Top Performers

Tencent's HunyuanWorld 1.0: Your Personal Playable 3D World

Wan2.2: Open-Source Video AI That Rivals the Big Players

💡 Shared Insight

How Dueling National AI Strategies Could Reshape the Global Tech Landscape

In the span of just days, the world's two AI superpowers unveiled dramatically different visions for AI's future. The contrast couldn't be more striking: while the United States focuses on achieving "global dominance," China is proposing international cooperation and open-source development. This divergence might determine not just who leads in AI, but how the entire world accesses and develops this transformative technology.

A Tale of Two AI Plans

Last week, the White House released its America`s AI Action Plan, emphasizing deregulation, growth, and explicitly stating America is in a "race to achieve global dominance" in AI. While the plan mentions open-source models as important, recent actions tell a different story.

Just days later, China unveiled its Global AI Governance Action Plan, striking a remarkably different tone. Chinese Premier Li Qiang proposed a global AI cooperation body and warned against AI becoming an "exclusive game" for certain countries and companies. The plan calls for joint R&D, open data sharing, cross-border infrastructure, and AI literacy training, particularly for developing nations.

The Current Open-Source AI Situation

Despite America's stated support for open-source AI in its action plan, the current reality looks different:

The latest Llama 4 models from Meta haven't matched expectations and are falling behind in performance.

Meta, once the champion of open-weight AI models, appears to be shifting strategy. Reports suggest they've paused work on their open "Behemoth" model to focus resources on closed models, with Mark Zuckerberg citing "superintelligence safety concerns."

OpenAI, despite its name, did not release a frontier model openly for a long time. They announced that they are about to change that. However, they continue to delay the release of the promised open-weight models week after week, officially due to "safety concerns".

Meanwhile, China is experiencing an open-source AI explosion:

The best-performing open-weight LLMs currently available are predominantly from various Chinese companies and research labs. Models like Qwen3, Kimi K2, and GLM-4.5 are getting really close to the best-performing closed models.

Furthermore, an entire ecosystem of specialized AI models and tools is being released openly.

The Linux Parallel

Remember how Linux transformed the software world? It provided an open alternative to proprietary operating systems, and as of today, it is powering everything from smartphones to supercomputers. China's current open-source AI strategy might be creating something similar: a foundational layer of AI technology that's freely available to anyone who wants to build on it.

This approach is particularly appealing to developing nations and organizations that might be excluded from Western AI ecosystems due to cost, politics, or access restrictions. By positioning itself as the open alternative, China could build a global network of developers, researchers, and nations aligned with its AI ecosystem.

The Strategic Implications

The divergence in strategies reveals different bets about AI's future:

The U.S. Approach: Control the most advanced AI through proprietary development, maintain technological superiority, and leverage this for economic and strategic advantage.

The Chinese Approach: Build influence through openness, create dependencies through freely available technology, and position as the collaborative alternative to US dominance.

Both strategies have advantages, but history suggests that open ecosystems often win in the long run through network effects. Developers worldwide will choose the path that gives them the most freedom and opportunity to innovate.

Looking Ahead

As we watch this unfold, several questions emerge:

Will safety concerns justify keeping advanced AI models closed, or are these concerns being used to maintain competitive advantage?

Can the U.S. maintain AI leadership while restricting access to its most advanced models?

Will China's open approach create the foundational AI infrastructure for much of the world?

The answers to these questions will shape not just the AI industry but potentially the entire global technology landscape for decades to come.

📰 AI Update

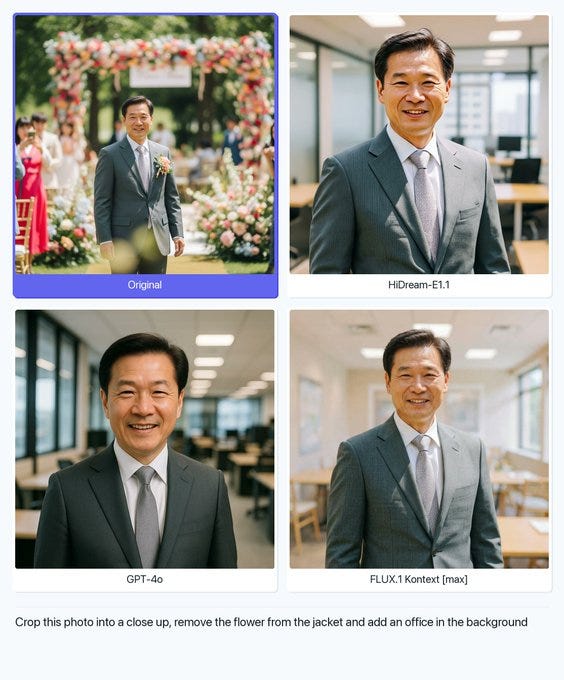

HiDream-E1.1: New Open-Weight Image Editing Champion Emerges [vivago.ai post on 𝕏]

A new player just claimed the top spot in open-source image editing: HiDream-E1.1. This 17B-parameter model from Hong Kong-based Vivago AI (Sparking Innovations Limited) has overtaken FLUX.1 Kontext [dev] in independent benchmarks, and what makes it especially compelling is its MIT license (commercial use allowed, unlike FLUX's restrictions). Built on Meta's Llama 3.1 text encoder, it supports direct commands and flexible aspect ratios while delivering impressive results that rival some of the best proprietary tools.

The timing feels significant as we are seeing this race heat up between open and closed AI systems. While HiDream-E1.1 still ranks behind GPT-4o and the premium FLUX models, having a truly open alternative that can compete at this level opens doors for developers and creators who need commercial flexibility. The quality improvements are clearly noticeable, and for anyone building AI-powered creative tools, this could be a game-changer.

FLUX.1 Krea [dev]: When AI Images Stop Looking Like AI [Black Forest Labs blog]

Black Forest Labs and Krea.ai just dropped their first model trained in collaboration, and the results are genuinely impressive. The 12B open-weight model FLUX.1 Krea [dev] outperforms every other open-weight text-to-image generator on the shared benchmarks. The sample images look very realistic as their approach focuses on eliminating the typical "AI look" that makes generated images easy to spot.

While the leap in quality is exciting for creators and developers, it raises important questions about AI detection. These photorealistic results will likely make it significantly harder for people to identify fake images, potentially fueling misinformation campaigns with convincing visual "evidence." It's a classic double-edged sword in AI development: the same technology that empowers legitimate creative work also makes deception more accessible. Check out the technical blog post if you're curious about their training methodology.

Step-3: A Strong Chinese Open-Weight Model That Targets Efficiency [StepFun blog post]

StepFun has released Step-3, a 321B parameter multimodal AI model that prioritizes speed and cost-effectiveness over raw performance. While it doesn't match the capabilities of the latest Chinese open-source releases, Step-3 delivers a faster and with that more cost-efficient inference. The real value here isn't in beating benchmarks but in making powerful AI more accessible through clever engineering.

What makes this particularly interesting is StepFun's transparency: they've published a detailed technical report explaining their cost-effective decoding innovations. This open approach to sharing efficiency breakthroughs could accelerate the entire field's progress toward more practical, deployable AI systems. Sometimes the most impactful advances aren't about being the smartest model in the room but being the one that more people can actually afford to use.

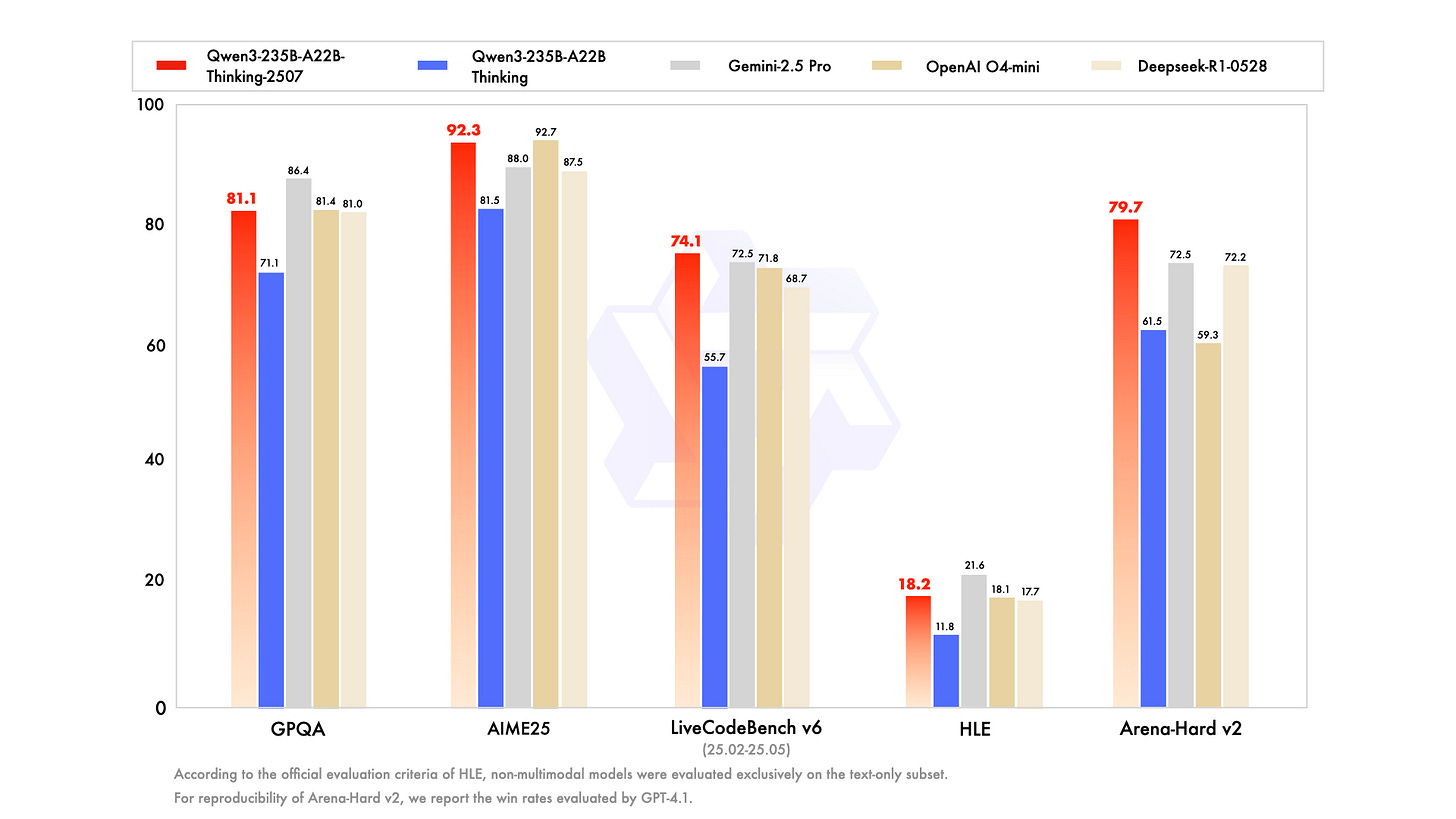

Qwen3-235B: The Thinking Version Has Arrived [HuggingFace model card]

Following last week's update about Qwen's new 235B parameter non-thinking model, Alibaba has now released the dedicated thinking version: Qwen3-235B-A22B-Thinking-2507. This massive 235B parameter model shows its reasoning in various directions before delivering answers. With impressive benchmark scores, it's positioning itself as a strong competitor in the high-performance reasoning space alongside models like OpenAI's o1.

Interesting to see how the Qwen team followed the user requests to split up the previous hybrid 235B model into a thinking and non-thinking version. This is the opposite route of the competition as most model providers, including OpenAI, plan to merge their models in the next major release.

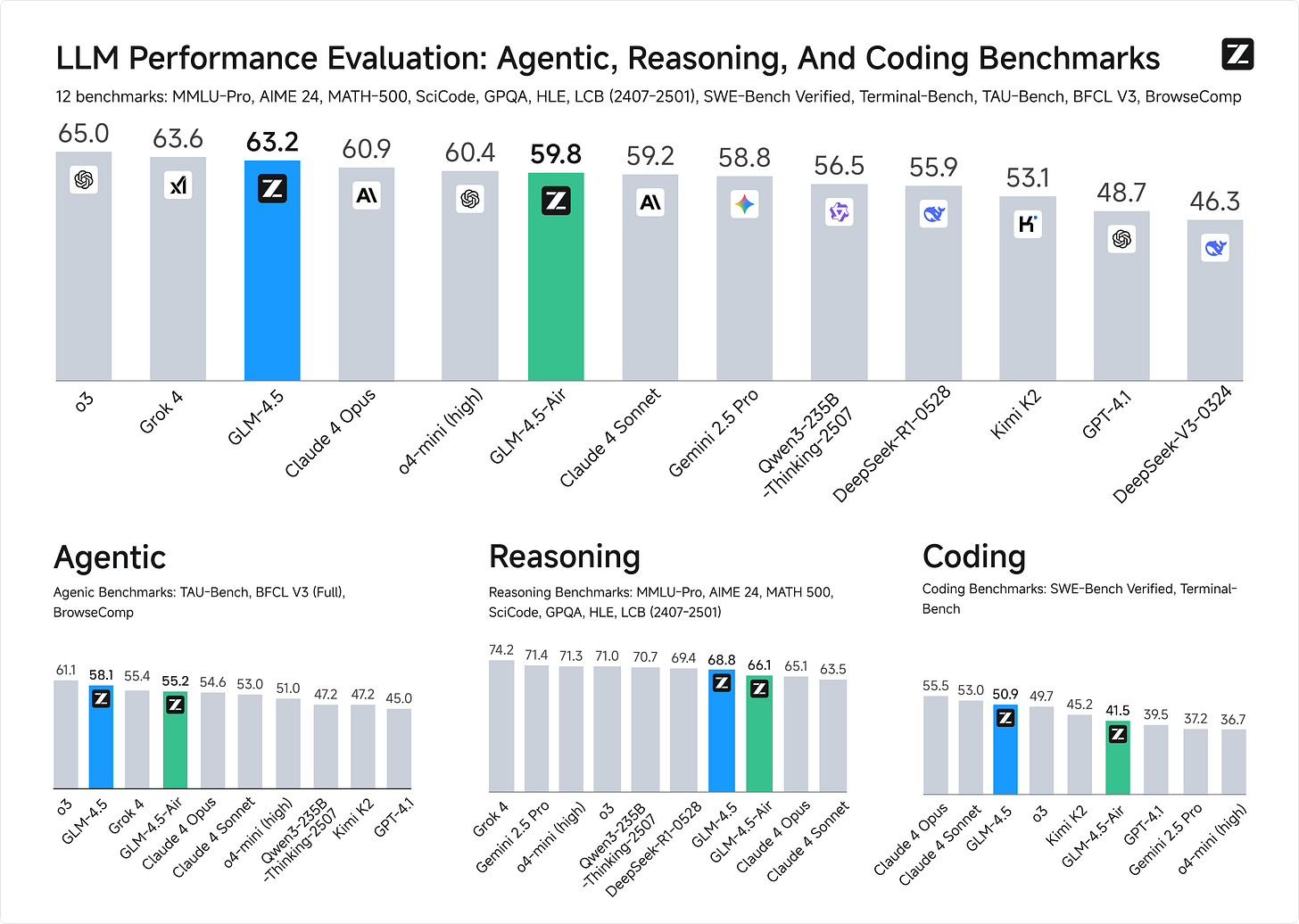

GLM-4.5: Another Chinese AI Model Matches Top Performers [Z.ai blog]

Another impressive open-source model has emerged from China, this time from Zhipu AI with their GLM-4.5 release. The model uses the Mixture-of-Experts approach with 355B parameters in total and 32B active parameters per token. The company claims their model ranks 3rd overall across 12 different benchmarks, putting it on par with Claude 4 Sonnet in agent capabilities and web browsing tasks. In contrast to the Qwen3-235B models, this one realizes "thinking" and "non-thinking" in one model.

The real standout for me is GLM-4.5-Air, a Mixture of Experts model with 106B parameters in total and 12B active parameters. This smaller version maintains strong performance while being runnable on high-end consumer hardware (e.g. am AMD Ryzen™ Al Max+ 395) when quantized.

Tencent's HunyuanWorld 1.0: Your Personal Playable 3D World [GitHub]

Tencent just released HunyuanWorld 1.0, an AI that creates immersive, explorable 3D worlds from simple text descriptions or images. Unlike traditional video generation that gives you flat, non-interactive content, this system builds actual 3D environments you can walk around in, complete with consistent geometry and exportable meshes for game engines. The technical breakthrough here is remarkable: it combines video-based generation with true 3D representation, meaning the worlds maintain visual coherence from every angle.

What excites me most about this isn't just the technology, but where it's heading. We're looking at the future of personalized gaming where every player gets their own custom world tailored to their imagination. Instead of everyone playing the same Mass Effect or Skyrim, you could describe your dream sci-fi colony or fantasy kingdom and have a playable world generated just for you. This is the ultimate "audience of one" experience, where AI becomes your personal game designer, creating infinite unique adventures that no other player will ever experience.

Wan2.2: Open-Source Video AI That Rivals the Big Players [GitHub]

Alibaba just dropped Wan2.2, an open-source video generation model that is genuinely stunning. The model produces 720P videos with a quality you would expect from premium state-of-the-art video generation AI tools like Runway or Pika, but completely free and open-source. The model handles text-to-video, image-to-video, and combined prompts with impressive coherence and detail that honestly surprised me when I saw the sample outputs.

What makes this especially exciting is how accessible it is: you can generate videos on a consumer GPU with 24 GB of memory like the Nvidia RTX 4090, and it's already integrated into ComfyUI for easy use.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.