🤝 How AI turns everyone into a designer

Dear curious mind,

The design world is undergoing a fundamental shift as generative AI tools democratize creative capabilities, making professional design accessible to everyone. In this issue, we explore how Photoshop's dominance might be coming to an end and what that means for everyone.

In this issue:

💡 Shared Insight

The Death of Photoshop: How AI Makes Everyone a Designer

📰 AI Update

Seed-OSS-36B-Instruct: A Flexible Thinking Control Model from ByteDance

Command A Translate: Cohere's 111B Translation Specialist

InternVL3.5: Multimodal Model Family from Shanghai AI Lab

Hermes-4: Nous Research's Uncensored Models

🌟 Media Recommendation

Podcast: AI Agents Will Outnumber Humans at Work

💡 Shared Insight

The Death of Photoshop: How AI Makes Everyone A Designer

After decades of Adobe Photoshop being the gatekeeper of professional image editing, AI is fundamentally changing who can create visual content.

Professional designers spent years mastering layers, masks, selection tools, and complex workflows. This expertise became a moat around the industry, keeping design work in the hands of trained professionals while everyone else struggled with simplified consumer alternatives. But the recent releases of Google's "Nano Banana" and Alibaba's Qwen-Image-Edit signal the beginning of the end for traditional design barriers.

Nano Banana: Google's Game-Changer

Google's recently announced Gemini 2.5 Flash Image (nicknamed "nano-banana") represents their "state-of-the-art image generation and editing model." The model focuses on maintaining consistent results when editing photos of people and pets, allowing users to change outfits, blend photos, and apply styles from one image to another.

Early users have been "going bananas" over the tool, which Google claims is now "the top-rated image editing model in the world."

Consider what this means: A small business owner can now describe the perfect product shot they envision, and Nano Banana will create it. A social media manager can instantly generate variations of content without hiring a designer.

The Hidden Gem: Qwen-Image-Edit Works Local

While Google's cloud-based solution grabs headlines, Alibaba's Qwen-Image-Edit represents an even more disruptive force. Qwen-Image-Edit is a 20B parameter image editing model that runs on your own hardware. Released quietly in August 2025, this tool is flying under the radar despite offering capabilities that rival or exceed cloud-based competitors like nano-banana.

The local deployment aspect cannot be overstated. While Google's Nano Banana requires internet connectivity and operates within Google's ecosystem, Qwen-Image-Edit offers complete independence. Users maintain full control over their data, face no usage restrictions, and pay no ongoing fees. For businesses handling sensitive visual content, this represents a fundamental shift in creating whatever you imagine.

The Implications

For Businesses: Small companies can now produce marketing materials that previously required expensive design agencies. Product photography, social media content, and promotional graphics become as simple as describing your vision.

For Content Creators: YouTubers, bloggers, and social media influencers can generate unlimited variations of visual content without artistic skills or expensive software subscriptions.

For Education: Students and researchers can create compelling visual presentations without learning complex software, focusing on ideas rather than technical execution.

For Personal Use: Family photos can be enhanced, backgrounds changed, and creative projects completed by anyone with imagination.

The Professional Design Response

This doesn't mean professional designers are obsolete—rather, their role is evolving. The value is shifting from technical execution to creative direction, brand strategy, and complex problem-solving. Designers who embrace these AI tools as creative partners rather than threats will find themselves incredibly empowered, able to iterate faster and explore more creative possibilities than ever before.

Conclusion: Design Democracy

AI is breaking down the barriers of professional design. What once required years of training is now accessible to anyone with an idea.

For businesses and individuals, this means more creative freedom without big budgets or technical hurdles. For traditional designers, it means adapting fast to stay relevant.

The real question isn’t whether tools like Photoshop are outdated; it’s whether you’re ready for a world where everyone can be a designer. The future of creativity is here, and it’s only getting better.

What are your thoughts on AI democratizing design? Have you tried any of these new AI image editing tools? Share your experiences and predictions in the comments below.

📰 AI Update

Seed-OSS-36B-Instruct: A Flexible Thinking Control Model from ByteDance

ByteDance released Seed-OSS-36B-Instruct, a 36-billion-parameter model that puts users in control of the AI reasoning process through its innovative thinking budget feature. Unlike traditional models that either think deeply or respond directly, this model lets you specify exactly how many tokens the AI should spend on internal reasoning before giving an answer, making it incredibly adaptable for different tasks.

ByteDance trained the model with just 12 trillion tokens and achieved competitive performance across knowledge, reasoning, math, and coding benchmarks, often matching or surpassing models with similar parameter counts. The model is tuned for agentic tasks like tool-using and supports a huge context length of 512K tokens for processing extensive documents and conversations.

Command A Translate: Cohere's 111B Translation Specialist

Cohere released Command A Translate, a 111-billion parameter model specifically fine-tuned for high-quality translation across 23 languages. This is another step toward specialized AI tools that excel at narrow but crucial tasks. Unlike general-purpose models that handle translation as one of many capabilities, this model was built from the ground up to deliver state-of-the-art translation quality.

The model's 8K input and output context length makes it practical for many real-world translation tasks, from business documents to creative content. As businesses increasingly need reliable translation for sensitive documents, having a fully open-source option would be welcome, but as with other models from Cohere, this one is also only released free for non-commercial use.

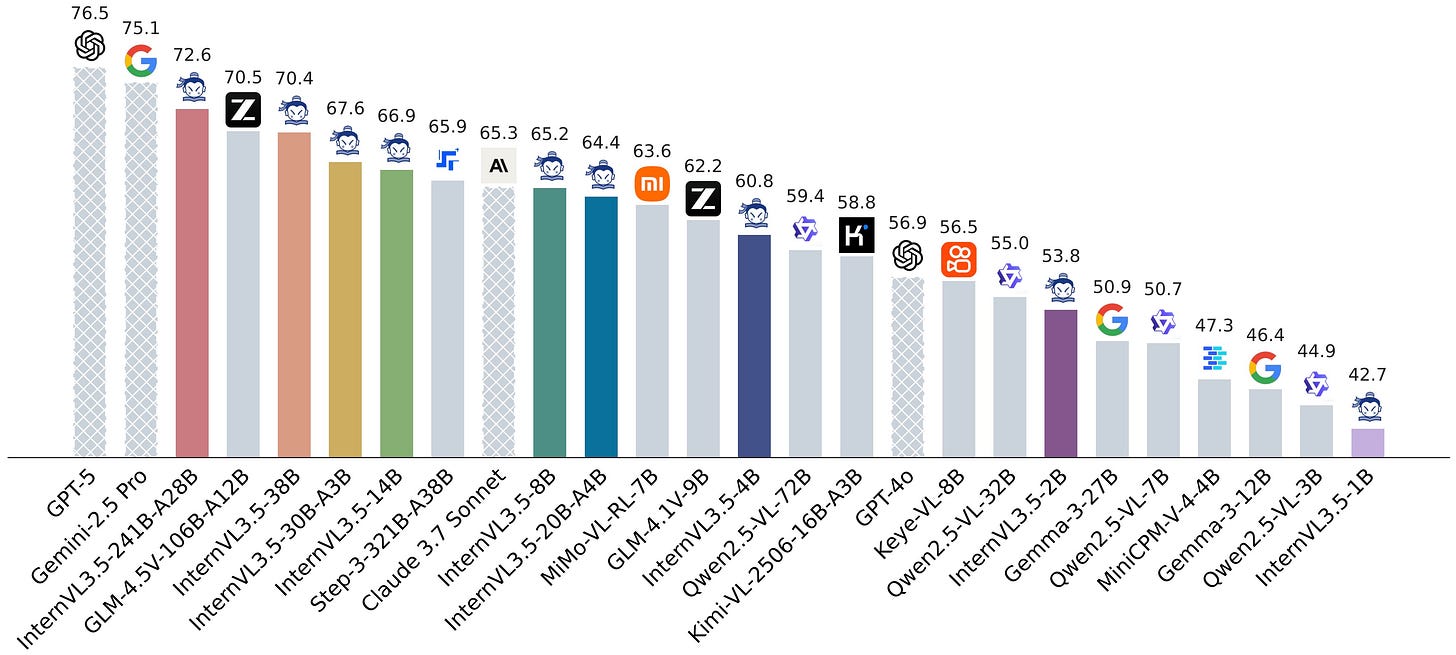

InternVL3.5: Multimodal Model Family from Shanghai AI Lab

OpenGVLab, a research group from Shanghai AI Laboratory, released the InternVL3.5 family, a series of multimodal models ranging from 1B to 241B parameters that significantly advances reasoning capabilities and inference efficiency. The flagship 241B-A28B model achieves state-of-the-art results among open-source multimodal models, rivaling commercial systems like GPT-5. The family supports novel capabilities like GUI interaction. All models are released under the Apache 2.0 License and are free to use for both personal and commercial tasks.

The Decoupled Vision-Language Deployment strategy enables up to 4.05x faster inference by separating vision and language processing across GPUs, making even larger models practical for real-world applications.

Hermes-4: Nous Research's Uncensored Models

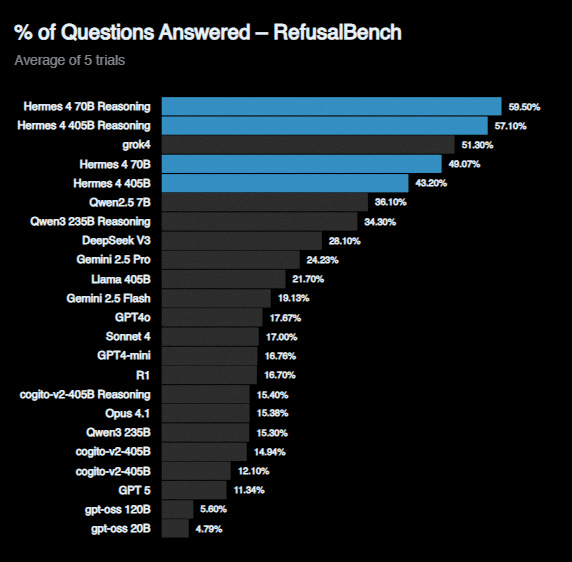

Nous Research has unveiled Hermes-4, two open-source models based on Llama-3.1. The flagship 405B parameter model and its 70B counterpart introduce a hybrid reasoning mode with explicit <think> tags which enable massive improvements in math, code, STEM, logic, and creative tasks while maintaining general assistant quality. What sets Hermes-4 apart is its focus on user alignment by being maximally helpful without censorship.

🌟 Media Recommendation

Podcast: AI Agents Will Outnumber Humans at Work

In a recent episode of Lenny's Podcast, Asha Sharma, CVP of AI Platform at Microsoft, shares her bold prediction for the "agentic society": A future where AI agents will outnumber human employees in companies by 2026.

Based on her insights from building AI products with more than 15,000 companies, Sharma explains how traditional org charts are evolving into dynamic "work charts" where tasks are distributed across human-AI networks rather than rigid hierarchies. She describes a world where AI agents handle routine work, freeing humans for creative and strategic roles, fundamentally reshaping how we think about productivity and collaboration.

Sharma provides concrete examples from Microsoft's own transformation and insights into how companies can prepare for this shift. If you're curious about the future of work in an AI-driven world, this is a podcast episode you should listen to.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.