🤝 Master the art of AI conversations in 5 minutes

Dear curious mind,

I was last week on vacation and did not write a newsletter issue, but the open-source AI space certainly didn't take a break. The pace of open releases is still accelerating, and I had to pick the most noteworthy developments from an overwhelming number of announcements.

It is fascinating to watch two entirely different philosophies emerge in AI model development. While the big cloud providers are pushing toward massive, do-everything models that can handle any task you throw at them, the open-source community is mostly heading in the opposite direction: smaller, more specialized models that excel at specific tasks. Today, we take a closer look at how to extract the most value out of the do-everything models.

In this issue:

💡 Shared Insight

A Practical Guide to Get Better AI Replies

📰 AI Update

DeepSeek V3.1: The AI Architecture Debate Continues

GLM-4.5V: Open-Source Vision AI Takes a Giant Leap

NuMarkdown-8B-Thinking: A Reasoning Model That Converts PDFs to Markdown

Roblox Sentinel: Open-Source AI Guards Against Child Predators

Matrix-Game 2.0: Open-Source Strikes Back in Interactive AI

Jan-v1-4B: Why Smaller Models Might Be the Smarter Choice

Gemma 3 270M: When Tiny Models Pack a Specialized Punch

Qwen-Image-Edit: The Missing Piece of AI Image Generation

💡 Shared Insight

A Practical Guide to Get Better AI Replies

Even though I'm a strong advocate for open-source AI models, I completely understand why most people stick with a cloud-based option like ChatGPT or Claude. They're convenient, powerful, and just work. I still use them myself, despite my best intentions to switch completely to open models. But before diving into that transition (which I'll cover in a future issue), let me share some insights about getting the most out of these cloud-based AI assistants.

The big tech companies are pushing toward a future where one giant model can handle everything you throw at it. The key differentiator isn't which model you use anymore, but how you communicate with it. And here's the beautiful part: you do this in natural language. No coding required. Anyone can do it. But there are some tricks that can dramatically improve your results.

Understanding Why AI Makes Things Up

You've probably encountered AI hallucinations (those confident-sounding but completely fabricated responses). Here's why they happen: During training, these models are fine-tuned using human feedback to make users happy. If an AI admits it doesn't know something, you're disappointed. But if it generates a plausible-sounding answer, even if it's wrong, you might leave satisfied (at least initially). The AI learned that appearing helpful often beats being truthfully.

The latest models, like GPT-5 and Claude 4.1, hallucinate far less than their predecessors, but it still happens. You need to be aware of that and not accept outputs blindly.

Getting Better, More Reliable Answers

Here's what actually helps reduce hallucinations and improve the response quality:

Ask for sources and web searches. Most modern AI models search the web automatically, but you can explicitly request this in your prompt. Try adding something like "Please search the web for current information" to your questions about recent events or rapidly changing topics.

Provide specific context. If you're troubleshooting a problem, don't just ask about it briefly. Add the context that makes your request more specific to get a response tailored to your problem. This can be version numbers of a specific software or even screenshots of the settings dialogue.

Let the AI ask questions. Add this to your prompts: "If you need any clarification to give me the best answer, please ask." This simple addition can prevent the AI from making assumptions that lead to irrelevant responses.

Use full sentences. This might sound strange, but talk to the AI like you would to a knowledgeable colleague. Instead of typing keywords like in a Google search, explain your situation naturally. "Create a weekly meal plan for a family of four, including one vegetarian, and I need to keep the grocery budget under $100" works far better than "meal plan vegetarian cheap."

The Magic Is in the Conversation

What makes this technology almost magical is that all of this happens through natural language. You don't need to learn special commands or syntax. You just need to communicate clearly, just as you would with a human expert who's eager to help but needs proper context to do so effectively.

The key insight? Treat AI as a brilliant but sometimes overeager assistant who needs clear direction. Give it context, be specific about what you need, encourage it to seek current information, and don't hesitate to let it ask clarifying questions. Most importantly, maintain a healthy skepticism about its responses, especially for critical decisions.

These cloud-based models are incredibly powerful tools, but like any tool, they work best when you understand both their capabilities and their limitations. Start implementing these strategies in your next AI conversation, and you'll likely be surprised at how much better the responses become.

📰 AI Update

DeepSeek V3.1: The AI Architecture Debate Continues

DeepSeek just released V3.1, a 671-billion parameter hybrid model that can switch between "thinking" and "non-thinking" modes within the same conversation. This essentially means that the model can choose whether to start a reasoning process or give you quick, direct answers depending on what you need. The performance gains on coding and math benchmarks in its "thinking" mode are impressive, and according to the shared benchmarks, comparable to DeepSeek R1.

What's fascinating is that DeepSeek is following the same path as OpenAI and Anthropic: building one massive model that can handle both quick responses and deep reasoning. This contrasts with teams like Qwen, who are lately separating these capabilities into specialized models. We're watching two entirely different philosophies: the "one model to rule them all" versus the "right tool for the right job" approach. From my perspective, there is a place for both approaches to stay relevant.

GLM-4.5V: Open-Source Vision AI Takes a Giant Leap

The Chinese Z.ai just released GLM-4.5V, a 106-billion-parameter vision-language model that's making waves in the open-source community. What makes this particularly exciting is its "thinking mode" feature that improves the results for analyzing research documents and understanding complex image content.

The game-changer here is accessibility: vision models like GLM-4.5V unlock entirely new applications where you can share what you're seeing and get intelligent reasoning about images, videos, and documents. With smart engineering that keeps only 12 billion parameters active at any time, it's actually feasible to run this locally on consumer hardware. This democratization of advanced vision AI means developers and researchers worldwide can now build multimodal applications without relying on expensive API calls or non-private cloud services.

NuMarkdown-8B-Thinking: A Reasoning Model That Converts PDFs to Markdown

The rise of specialized AI models continues with NuMarkdown-8B-Thinking, an innovative reasoning-based vision-language model specifically fine-tuned for converting documents to clean Markdown. Unlike general-purpose models, this 8B parameter model was created for one task: analyzing PDF document layouts and structures to convert them to high-quality Markdown output.

What's particularly interesting is its "thinking tokens" approach: the model reasons about document structure before generating output, which seems to improve the results. This trend toward hyper-specialized models represents a shift in AI development, where targeted fine-tuning for specific domains often outperforms larger, general-purpose alternatives.

Roblox Sentinel: Open-Source AI Guards Against Child Predators

Gaming platform Roblox just open-sourced Sentinel, their production AI system that processes 6 billion chat messages daily to detect early signs of child endangerment. Unlike traditional content filters that flag individual words, Sentinel uses contrastive learning to analyze conversation patterns over time, tracking whether users' daily interactions trend toward concerning behavior clusters.

The system has already helped submit approximately 1,200 reports of potential child exploitation to authorities in the first half of 2025. In the case of such a detection, trained experts review the case and create a feedback loop to further refine the model with updated traninig data.

Matrix-Game 2.0: Open-Source Strikes Back in Interactive AI

While Google's DeepMind made headlines with their Genie 3 interactive world model, Skywork just proved that the gap between closed and open-source AI is rapidly closing. Matrix-Game 2.0 delivers the same mind-blowing capability (generating interactive game worlds in real-time at 25 FPS) but with one crucial difference: you can actually run it on your own hardware. This model can generate minutes of continuous, interactive video where you can move characters, explore environments, and interact with AI-generated worlds that respond to your keyboard and mouse inputs in real-time.

This release is more than an impressive tech demo. When you can run interactive world models locally, you're not just watching a controlled demonstration; you experience the foundation for entirely new applications like rapid game prototyping, AI agent training environments, and autonomous driving simulations.

Jan-v1-4B: Why Smaller Models Might Be the Smarter Choice

The Jan team just released Jan-v1-4B, a compact 4 billion parameter model that's proving a fascinating point about AI architecture: you don't need to cram the world's knowledge into your model to make it effective. Instead of trying to memorize everything like massive models do, Jan-v1-4B focuses on reasoning and lets you connect it to external knowledge sources (like web search via MCP servers). This approach mirrors what OpenAI attempted with their open-weight gpt-oss models, though that still required 20 billion parameters in its smaller version to achieve comparable results.

What makes Jan-v1-4B particularly compelling is its performance: it achieves 91.1% accuracy on question-answering tasks while reportedly excelling at creative writing tasks where pure knowledge retrieval matters less than reasoning and language fluency. This suggests we're seeing a shift toward specialized, efficient models that do one thing exceptionally well rather than trying to be encyclopedias. When you can run a model this capable locally and supplement it with real-time information access, you get the best of both worlds: privacy, speed, and up-to-date knowledge without the computational overhead of massive parameter counts.

Gemma 3 270M: When Tiny Models Pack a Specialized Punch

Google just released Gemma 3 270M, proving that sometimes the smallest models can deliver the biggest impact for specific use cases. With just 270 million parameters (a fraction of the 4 billion we've often been seeing in small models), this ultra-compact model isn't designed to be a general-purpose powerhouse out of the box. Instead, it's built as a foundation for fine-tuning into highly specialized task-specific models that can run on extremely low-end hardware while delivering impressive performance for narrow applications like sentiment analysis, entity extraction, or creative writing tasks.

The real magic lies in its efficiency, which makes it perfect for always-on applications or devices with strong power constraints. This approach represents a shift toward purposeful AI: instead of trying to build one model that does everything, you can now create tiny specialists that excel at specific tasks while running on hardware that couldn't even load larger models.

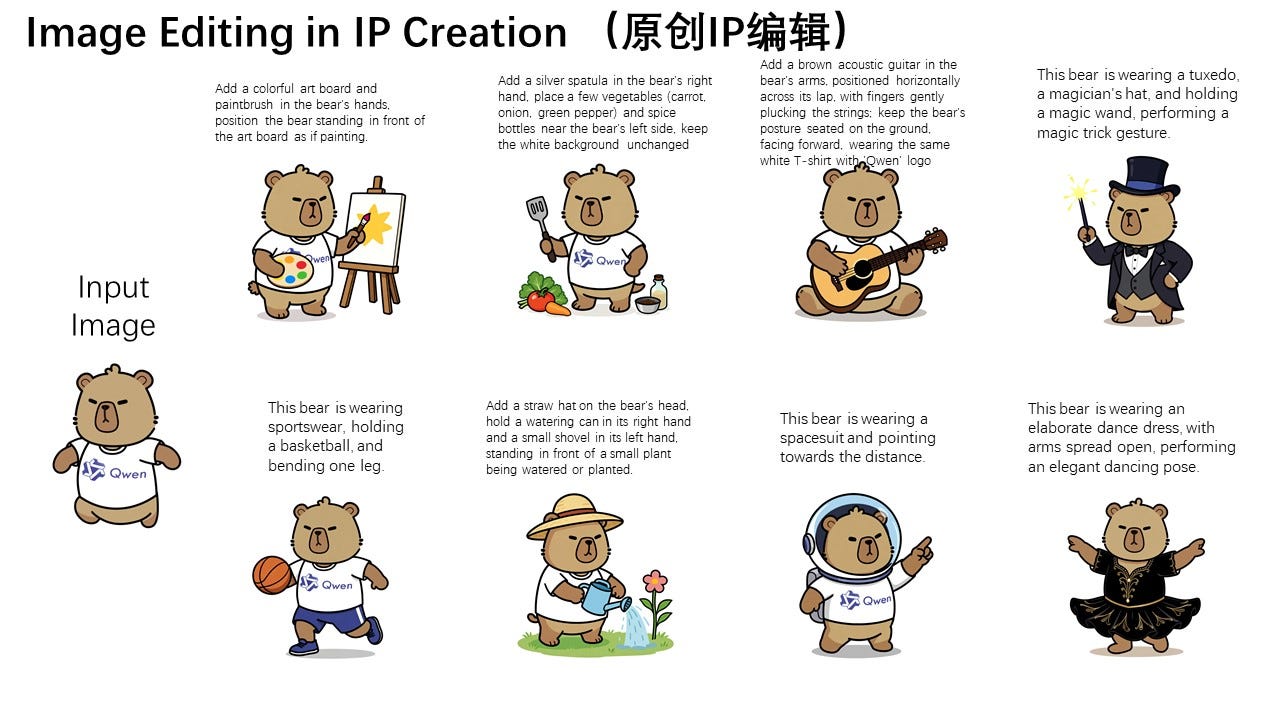

Qwen-Image-Edit: The Missing Piece of AI Image Generation

While AI image generation has become incredibly powerful at creating images from text descriptions, anyone who's used these tools knows the frustration: you get 95% of what you wanted, but that one character's pose is slightly off, or the text in the image has a typo, or the background color isn't quite right. Regenerating the entire image usually gives you something entirely different, losing all the good parts. Qwen-Image-Edit solves this exact problem by letting you make precise, targeted changes while keeping everything else intact. You can rotate objects, fix text (in both English and Chinese while preserving the original font style), change clothing, or modify backgrounds without starting over.

What makes this particularly exciting is that it's the perfect complement to Qwen-Image, which we covered in the last issue. Together, they form a complete open-source image workflow: generate your initial image with Qwen-Image, then fine-tune the details with Qwen-Image-Edit. Having these capabilities in open-weight models means you're not locked into expensive cloud services or waiting for API responses. You can iterate on your images locally, make as many tweaks as needed, and maintain complete control over your creative process.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.