🤝 Why AI companions are game-changers

Dear curious mind,

Picture working with an AI partner that understands your tasks, offers live feedback, and boosts your productivity without interruptions. This issue dives into how AI companions are reshaping work and play, along with open-source AI developments and an expert insight.

In this issue:

💡 Shared Insight

AI Companions Transform How We Play and Work

📰 AI Update

Apertus: Switzerland's Fully Open Multilingual Model

USO: ByteDance’s Model for Style and Subject Image Edit

Hunyuan MT: Tencent's Small Translation Model

🌟 Media Recommendation

Podcast: The AI Future You're Not Ready For

💡 Shared Insight

AI Companions Transform How We Play and Work

Imagine chatting with an AI assistant while you game or work, getting real-time tips, feedback, and help without pausing your activity. This still sounds like science fiction, but it is closer than you might think.

Already in April this year, I discovered a demo of an AI companion named Vector Companion on Reddit. The shared video shows a fully local, open-source multimodal AI that can see your screen, hear your voice, and respond instantly. It switches modes on the fly, offering search features and deep analysis to assist as a general-purpose companion.

This preview hints at a bigger shift: AI companions will become integral to gaming and productivity. Instead of solo playthroughs or isolated work sessions, you will have a smart partner that understands context, provides live guidance, and adapts to your needs. For gamers, this means personalized strategies or instant explanations of complex mechanics. For workers, it could mean real-time collaboration on tasks, error detection, or creative brainstorming.

If you trust Google, you can explore similar cloud-based experiences by sharing your computer screen in Google AI Studio. The model selection and media resolution are limited. The latter is especially unfortunate when you use a high-resolution display. However, the streaming mode in Google AI Studio is an easy and currently free way to explore the new capabilities of talking to an AI assistant while you play or work at a computer.

The way we interact with technology is evolving, and AI companions are, from my point of view, the most likely way of using computers in the future.

📰 AI Update

Apertus: Switzerland's Fully Open Multilingual Model

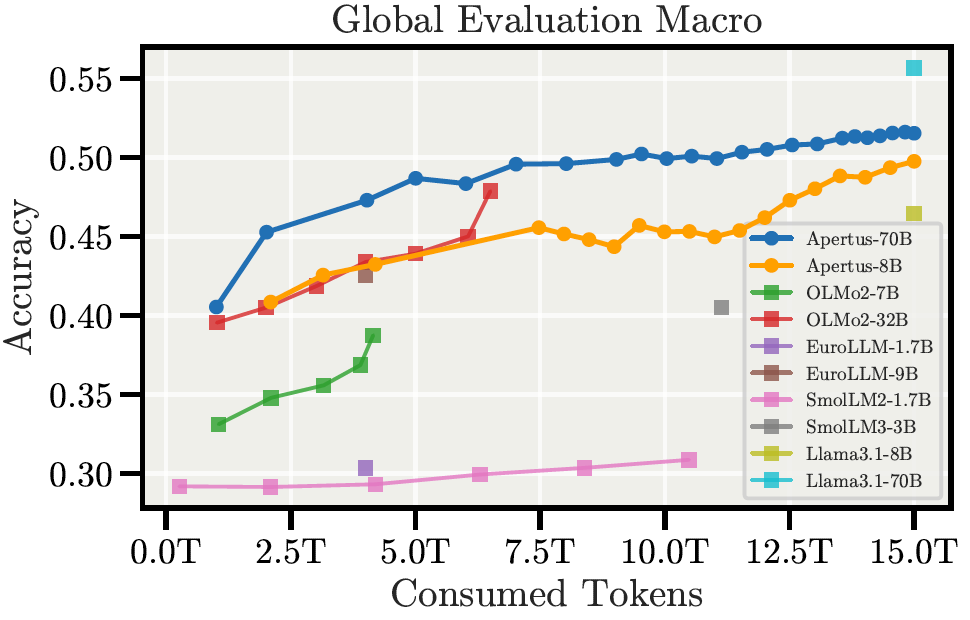

Switzerland has released Apertus, their first large-scale open-source multilingual language model, marking a milestone in European AI sovereignty. Developed through collaboration between EPFL, ETH Zurich, and the Swiss National Supercomputing Centre, this model stands out for its complete transparency. They release everything from architecture and training data to model weights and documentation under the Apache 2.0 license.

What makes Apertus particularly compelling is its multilingual focus, trained on 15 trillion tokens across 1,811 natively supported languages with a staged curriculum of web, code, and math data. The 70 billion parameter version supports context up to 65,536 tokens and includes agentic capabilities for tool use. The model lags behind in performance compared to closed-source models, but the fully open release from a European country and the possibility of data opt-outs even after being trained are outstanding.

Available in both 8 billion and 70 billion parameter versions, Apertus positions itself as a foundation for trustworthy AI applications while maintaining compliance with Swiss data protection laws and the EU AI Act.

USO: ByteDance’s Model for Style and Subject Image Edit

ByteDance has dropped USO, another fantastic open-source AI model that makes image creation super flexible. Like the Qwen-Image-Edit we covered in an earlier issue, this one is free and open for everyone to use and improve.

USO solves a big problem in AI image editing: usually, you have to choose between keeping the exact subject (like a person's face) or matching a style (like an artist's painting). This model combines both! It uses smart learning techniques to separate and then remix the content and style of images, so you can create pictures that look exactly like you want.

Powered by the FLUX model, USO works great with ComfyUI, the popular tool for building AI image workflows. You can run it on a decent gaming PC with 16 GB of VRAM for tasks with one reference image or 18 GB for multiple reference images.

Hunyuan MT: Tencent's Small Translation Model

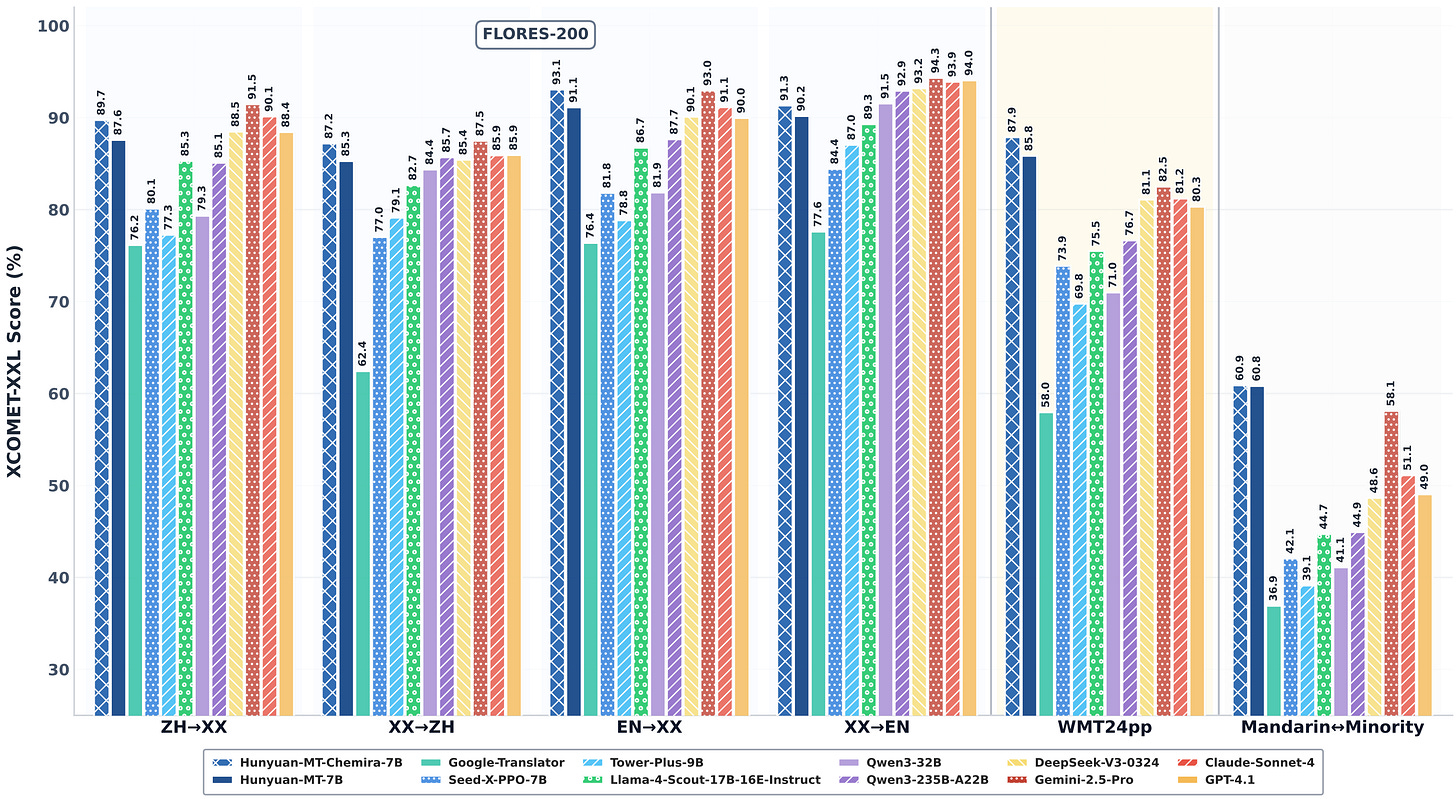

Tencent has released Hunyuan-MT, an open-source translation model. This 7 billion parameter model supports translation between 36 languages, including several Chinese ethnic minority languages.

What sets Hunyuan-MT apart is its impressive performance for such a small model. It delivers industry-leading quality. This is a strong competition to Cohere's Command A Translate we covered in the last issue, enabling high-quality translations on way less powerful and therefore cheaper hardware.

The model comes in two flavors: the base Hunyuan-MT-7B for direct translation and Hunyuan-MT-Chimera, an ensemble version that combines multiple outputs for even better results.

🌟 Media Recommendation

Podcast: The AI Future You're Not Ready For

In an episode of The Next Wave podcast, Microsoft AI CEO Mustafa Suleyman challenges common misconceptions about AI.

He states that the term "hallucination" is misleading. AI's ability to adapt and interpolate between data points is actually a strength, allowing it to create new outputs rather than just recall facts.

Suleyman also emphasizes tool use as the key to AI's power, drawing parallels to how humans manipulate their environment to enhance intelligence.

The conversation dives into how AI will transform work and job security, much like the personal computer did decades ago. Suleyman advises preparing for fundamental changes in what we do and how we live while highlighting opportunities for smaller companies to innovate with AI. This episode offers practical insights for leveraging AI today, from offloading tasks to agents to building products quickly in this new era.

If you are interested in the real potential and challenges of AI, I recommend listening to this episode with Mustafa Suleyman. It provides a clear-eyed view of the AI landscape from one of its leading innovators.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.