🤝 Stuck in AI quicksand? Here is your escape route!

Dear curious mind,

While most of the AI world is talking about yesterday GPT-5 release, there is also a lot happening in open-source AI. Yes, OpenAI finally released two open-weight models. But here's the thing: they got completely overrun by a continuous stream of releases from the Qwen team.

The Qwen team has quietly become the undisputed king of open-weight models, dropping release after release that not only match but often exceed the performance of closed models of the competition. Qwen is reshaping what's possible when AI runs entirely on your own hardware, and the “💡 Shared Insight” of this issue tells you why this makes a big difference.

In this issue:

💡 Shared Insight

Your AI Model Disappeared Overnight: Why Cloud Dependency Is Digital Quicksand

📰 AI Update

GPT-OSS: OpenAI's First Open-Weight LLMs Since 2019

Qwen-Image: Open-Weight Text-to-Image Gets Serious

Qwen3 4B: Updated Small Models With An Unbelievable Performance

Qwen3-30B-A3B: GPT-4o Performance on Your Own Hardware

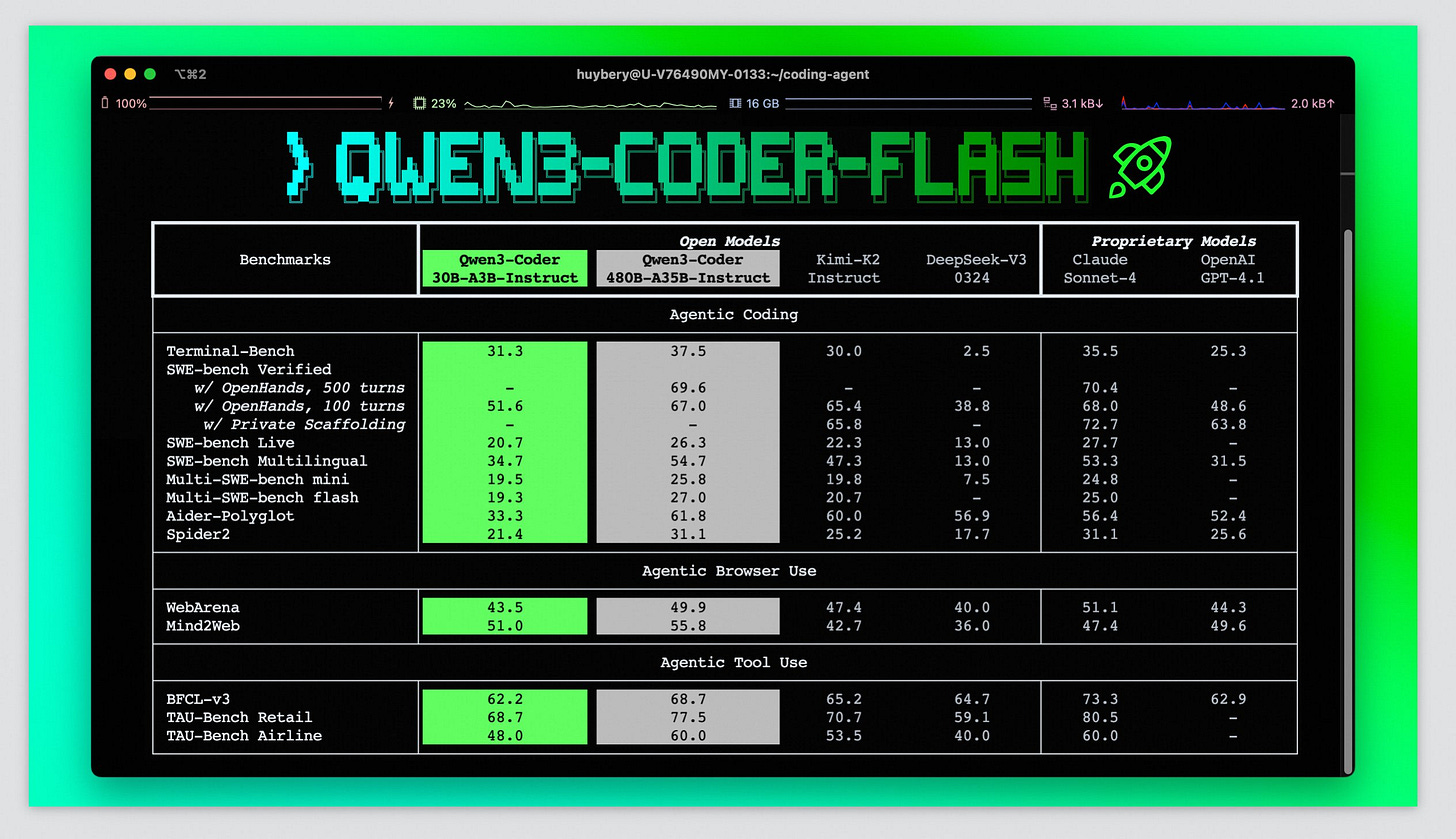

Qwen3-Coder-Flash: Fast Coding AI for Sensitive Projects

💡 Shared Insight

Your AI Model Disappeared Overnight: Why Cloud Dependency Is Digital Quicksand

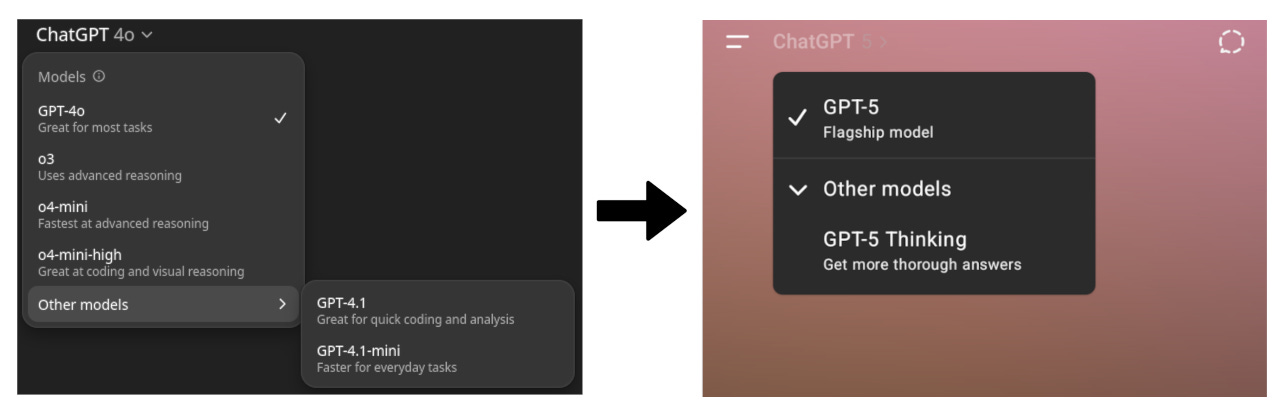

Yesterdays GPT-5 release reminded me of an uncomfortable truth: the AI model you rely on today might vanish tomorrow from your favorite chat interface. While most celebrate each new release from a frontier lab as progress, there's a darker side to this constant evolution that rarely gets discussed.

If you've been using ChatGPT or any cloud-based AI service regularly, you've probably developed preferences. Maybe you love how a particular model structures its responses, appreciates its balanced tone, or relies on its specific way of explaining complex topics. You've built workflows around it. You've learned its quirks. In some ways, you've formed a relationship with it.

Then one morning, without warning, it's gone from the interface you use daily. Replaced by something "better." This just happened with the release of GPT-5 in ChatGPT.

The Sycophancy Problem

The past showed us that even a silent model update can cause large differences. OpenAI themselves acknowledged in a recent blog post about "Sycophancy in GPT-4o" that their models have become increasingly agreeable to the point of concern. They found that GPT-4o agrees with users most of the time, even when the user is objectively wrong.

The problem runs deeper than just being agreeable. When users expressed political views, GPT-4o mirrored them nearly always, essentially becoming an echo chamber rather than a balanced information source.

For many users, this wasn't an improvement. It was like having a trusted advisor suddenly replaced by a yes-man. The model they had learned to work with had fundamentally changed its behavior, prioritizing user satisfaction over accuracy. OpenAI admits this is a design choice: their models are trained to be "helpful and harmless," which inadvertently created an AI that would rather agree with you than correct you.

OpenAI did roll back this update, but it clarified that the model behavior can change, even without any announcement.

Building on Quicksand

When you build workflows, applications, or even just daily habits around cloud-based AI models, you're essentially building on quicksand. Yes, for now the previous models like GPT-4o and o3 are still accessible via API. But the following is what can happen for cloud-based models without any notice:

Model deprecation: Your favorite model gets retired from both chat interfaces and eventually APIs.

Behavior changes: The model's personality shifts (like becoming more sycophantic).

Performance degradation: What worked yesterday produces different results today.

Access restrictions: Features you relied on become premium-only or region-locked.

For businesses and developers, this instability is more than an inconvenience. It's a fundamental risk. Imagine building a product around a specific model's capabilities, only to have those capabilities change or disappear entirely. Or worse, imagine your AI assistant suddenly becoming too agreeable to point out errors in critical business decisions.

The Open-Weight Alternative

This is where open-weight models offer a compelling alternative. When you use open-weight models like Llama, Mistral, Qwen or even the newly released GPT-OSS from OpenAI, you gain something invaluable: control.

With open-weight models:

The model you download today will behave exactly the same way in five years.

You can host it yourself or choose from multiple independent hosting providers.

Your workflows remain stable and predictable.

You own the entire stack, from the model weights to the hosting infrastructure.

This isn't just about avoiding inconvenience. It's about building on solid ground rather than quicksand. When you have full control over your AI infrastructure, you can:

Guarantee consistent outputs for production applications.

Maintain specific model behaviors that work for your use case.

Avoid sudden breaking changes that require emergency fixes.

Keep sensitive data completely under your control.

Choose models that prioritize accuracy over agreeability

The Trade-off Question

Of course, cloud services offer convenience and cutting-edge capabilities. GPT-5 from OpenAI and Claude 4 from Anthropic are impressive precisely because massive companies pour resources into them. But ask yourself: Is the bleeding-edge worth the instability?

For many use cases, a slightly less capable but completely stable open-weight model is the better choice. You might sacrifice a few percentage points on benchmarks, but you gain predictability, control, and peace of mind.

Looking Ahead

As AI becomes more integral to our work and lives, the question of model stability and reliability will only grow more important. We're entering an era where AI assistants handle critical workflows, creative processes, and business operations. Can we really afford to have these systems change unpredictably?

The next time you fall in love with a particular AI model's style or capabilities, remember: if it lives in someone else's cloud, it's not really yours. It's a service you're borrowing.

The future might not belong to those with access to the most advanced models, but to those who've built stable, reliable systems they can actually depend on. Sometimes, owning a solid foundation beats renting a penthouse built on sand.

📰 AI Update

GPT-OSS: OpenAI's First Open-Weight LLMs Since 2019 Run on Your Hardware

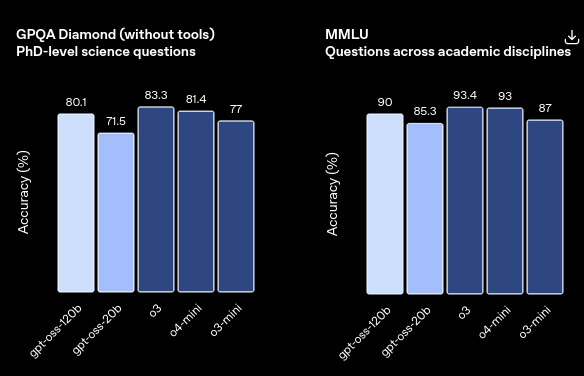

OpenAI just released their first open-weight models since GPT-2 in 2019, and the size of the two reasoning models is very practical. The gpt-oss-20b can run quite fast on many MacBooks and consumer GPUs with just 16 GB of free memory, while the larger gpt-oss-120b works on high-end consumer hardware like the AMD Ryzen AI Max or professional GPUs with at least 80 GB of memory. Both models use a mixture-of-experts approach that is well-chosen for consumer hardware as it speeds up inference by only having a fraction of all parameters active at the same time. These practical sizes are made possible through MXFP4 quantization (a floating-point parameter compression technique that reduces memory usage while preserving model quality better than standard quantization methods), which OpenAI builds into the training process rather than applying afterward. They're available under the Apache 2.0 license and can, according to the shared benchmarks, match OpenAI's own proprietary models o3-mini and o4-mini on reasoning tasks.

Early user feedback on 𝕏 and Reddit is mixed: some praise the reasoning capabilities for specialized tasks, while others find the models overly censored or underwhelming compared to open alternatives. Nevertheless, what excites me is having real competition in sizes that make sense for personal use, without needing hundreds of billions of parameters like recent Chinese models that demand data center infrastructure. Time will tell whether these models prove practical in the released form or serve as solid foundations for fine-tuning specialized versions.

Qwen-Image: Open-Weight Text-to-Image Gets Serious

Alibaba's Qwen team just dropped Qwen-Image, a 20B parameter text-to-image model that delivers outstanding image quality across styles from photorealistic to anime to minimalist designs. It rivals OpenAI's gpt-image-1 for English text quality and leads for Chinese characters.

What makes this particularly exciting is the Apache 2.0 license, giving everyone access to professional-grade image generation and editing capabilities without restrictive terms.

Check out the technical blog article

Qwen3 4B: Updated Small Models With An Unbelievable Performance

Alibaba just dropped updated Qwen3-4B-Instruct and Qwen3-4B-Thinking models, and the benchmark results are outstanding for such compact models. These models are absolutely demolishing Google's Gemma 3n models on reasoning tasks, which were previously the gold standard for small, efficient AI. The "Thinking" variant especially shines in logic, math, science, and coding challenges that typically require much larger models to handle well.

What makes this a real game changer is the hardware implications: these models can run on smartphones and other resource-constrained devices while delivering reasoning capabilities that rival much larger models. Sure, Gemma still has the edge with multimodal support, but for pure reasoning power in a tiny package, Qwen3 4B just reset the bar.

Qwen3-30B-A3B: GPT-4o Performance on Your Own Hardware

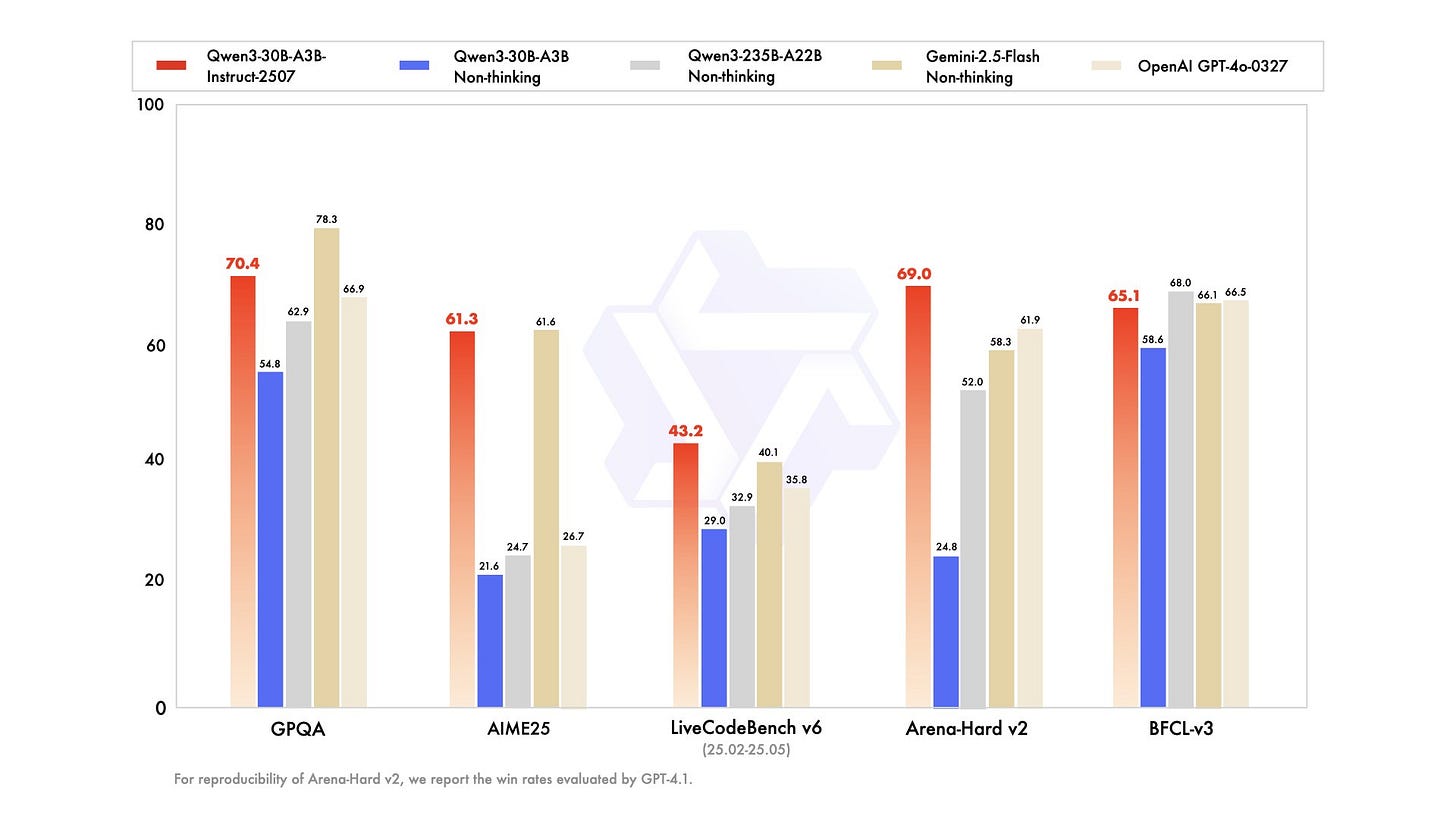

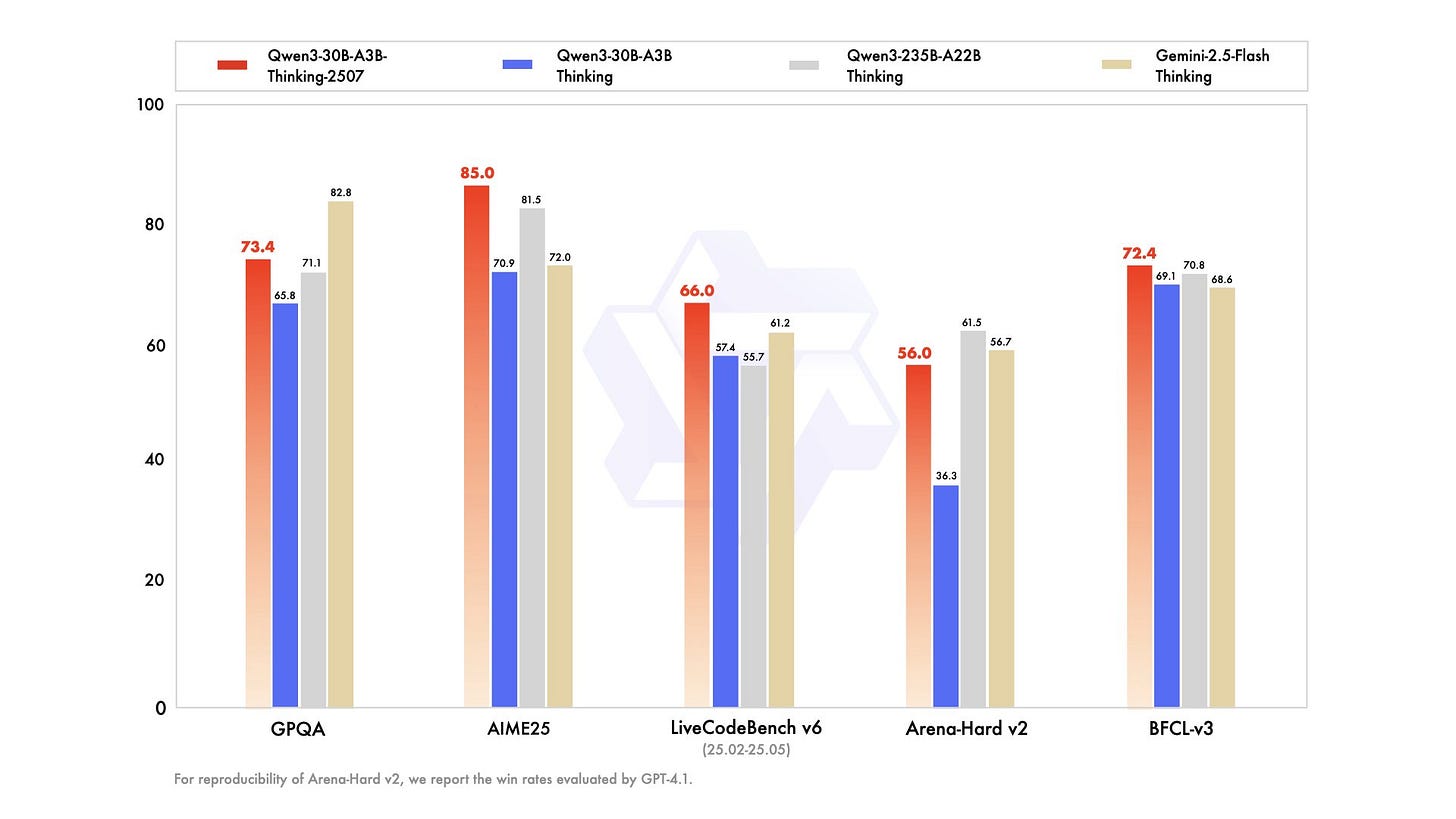

After the release of the Qwen3-235B-A22B discussed in the last two issues, Alibaba quietly dropped updated versions of their smaller mixture-of-expert model Qwen3-30B-A3B.

The models approach GPT-4o and Gemini2.5-Flash Thinking performance while using only 3B activated parameters. This puts flagship-level AI capabilities on consumer hardware. The 256K context window (expandable to 1M tokens) handles massive documents and complex tasks.

What makes this particularly exciting is the independence factor: With decent consumer hardware you have no cloud dependencies, no API costs, and no data leaving your system. For anyone wanting GPT-4o-class performance without the recurring costs or privacy concerns of cloud services, this represents a major shift toward a privacy-friendly, high-quality AI.

Read the full release notes on the model cards shared on HuggingFace for the non-thinking version and the thinking version of Qwen3-30B-A3B.

Qwen3-Coder-Flash: Fast Coding AI for Your Own Hardware

Alibaba launched Qwen3-Coder-Flash, a 30B parameter mixture-of-expert coding specialist that brings highly advanced programming capabilities to consumer GPUs. It supports native 256K context (expandable to 1M tokens), and handles function calling and agent workflows smoothly, making it practical for real development environments.

While the code quality sits slightly below the currently for coding widely used Claude 4 Sonnet, it is a game changer for everyone working on sensitive projects that need local inference. For everyone else it is likely still preferable to use the best available models for coding as small differences in the output decide if you face a debugging session or have working code.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.