🤝 The Secret to AI Productivity

Dear curious mind,

In this issue, we explore the new perspective shared by the person behind the “Building a Second Brain” (BASB) approach, Tiago Forte. You will learn why his perspective matters now more than ever, and how his evolving approach can help you stay ahead in a rapidly changing digital landscape.

In this issue:

💡 Shared Insight

Tiago Forte Returns: Rethinking Note-Taking in the AI Era

📰 AI Update

Qwen3 Gets Official Quantized Models

LTXV13: Real-Time Video Generation on Your GPU

🌟 Media Recommendation

Podcast: The AI advantage with Tiago Forte

Video: Unlocking AI with the Master Prompt Method by Tiago Forte & Hayden Miyamoto

Paper: The Impact of Quantization on Qwen3 Performance

💡 Shared Insight

Tiago Forte Returns: Rethinking Note-Taking in the AI Era

If you’ve been following the world of digital note-taking and personal knowledge management (PKM), you will know the name Tiago Forte. After a period of being relative quiet, Tiago is back in the spotlight. He recently hosted a live webinar, published new blog articles, and was making podcast appearances. For those of us who follow his insights about personal knowledge management and participated in his "Building a Second Brain" (BASB) cohorts (I was part of cohort 15 and learned a lot!), this is exciting news. His PARA and CODE frameworks still form the backbone of my own digital note system, which I’ve further adapted with insights from Nick Milo’s Linking Your Thinking, discussions with other PKM enthusiasts and my own AI-first mindset.

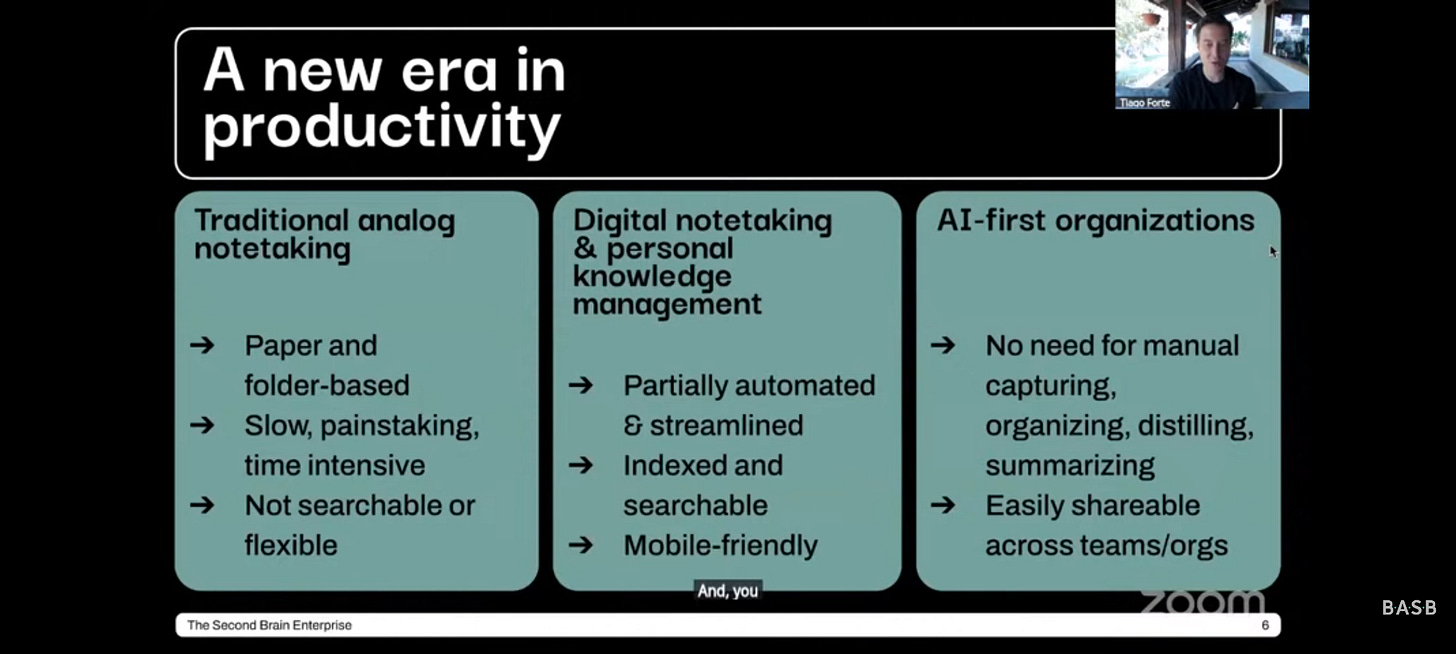

What’s especially interesting is why Tiago stepped back from cohort teaching his BASB framework: He shared in the webinar (more on that in the media recommendation section below) that he realized that AI is fundamentally changing the landscape of note-taking and productivity. He stated that we are entering a new era, one where some of the core concepts of BASB are being reshaped or even made obsolete by AI-powered tools and workflows.

During the webinar, Tiago was joined by Hayden Miyamoto, a serial entrepreneur and investor known for scaling businesses. Together, they introduced the "master prompt method", a simple but underutilized approach: add as much background information as possible to your AI requests to get responses tailored to your specific situation.

If you are building a second brain (a curated set of digital notes), you already have the perfect foundation for this. Your notes become the raw material for highly personalized, context-rich AI interactions. This is something which I communicated already in the first issues of this newsletter nearly two years ago.

For those interested in applying these ideas at the organizational level, be sure to check out the full webinar or my summary of the webinar in the media recommendation section of this newsletter issue.

The future of productivity is being rewritten by AI, and Tiago’s new direction shows that he thinks so too.

📰 AI Update

Qwen3 Gets Official Quantized Models [Reddit post]

The Qwen team has released official quantized versions of their Qwen3 models, making it easier to run them on local hardware with lower resource requirements. Besides FP8 (8-bit floating point) quantizations for all models, only two models are available in the even more compressed Int4 (4-bit integer) format. However, it’s not yet clear whether these official quantizations perform better than the community-made versions that have been available so far. A direct comparison would be helpful.

LTXV13: Real-Time Video Generation on Your GPU [GitHub repository]

Lightricks, the Israeli software company best known for its one tap face edit app Facetune, has unveiled LTXV 13B v0.9.7. This new iteration of their video generation model is capable of producing high-quality, 30 FPS videos at 1216×704 resolution in real time on high-end consumer GPUs. Trained on a massive, diverse video dataset, LTX-Video supports a wide range of features including text-to-image, image-to-video, keyframe animation, video extension (forward and backward), and video-to-video transformations. With these capabilities, it is one of the most versatile video generation models which was released so far. However, despite its public open-weights release, LTX-Video is not truly open-source as companies with more than $10M annual revenue need to acquire a paid licence. If you have a powerful GPU, you can start to explore the model on your own system within the well-established ComfyUI.

🌟 Media Recommendation

Video: Unlocking AI with the Master Prompt Method by Tiago Forte & Hayden Miyamoto

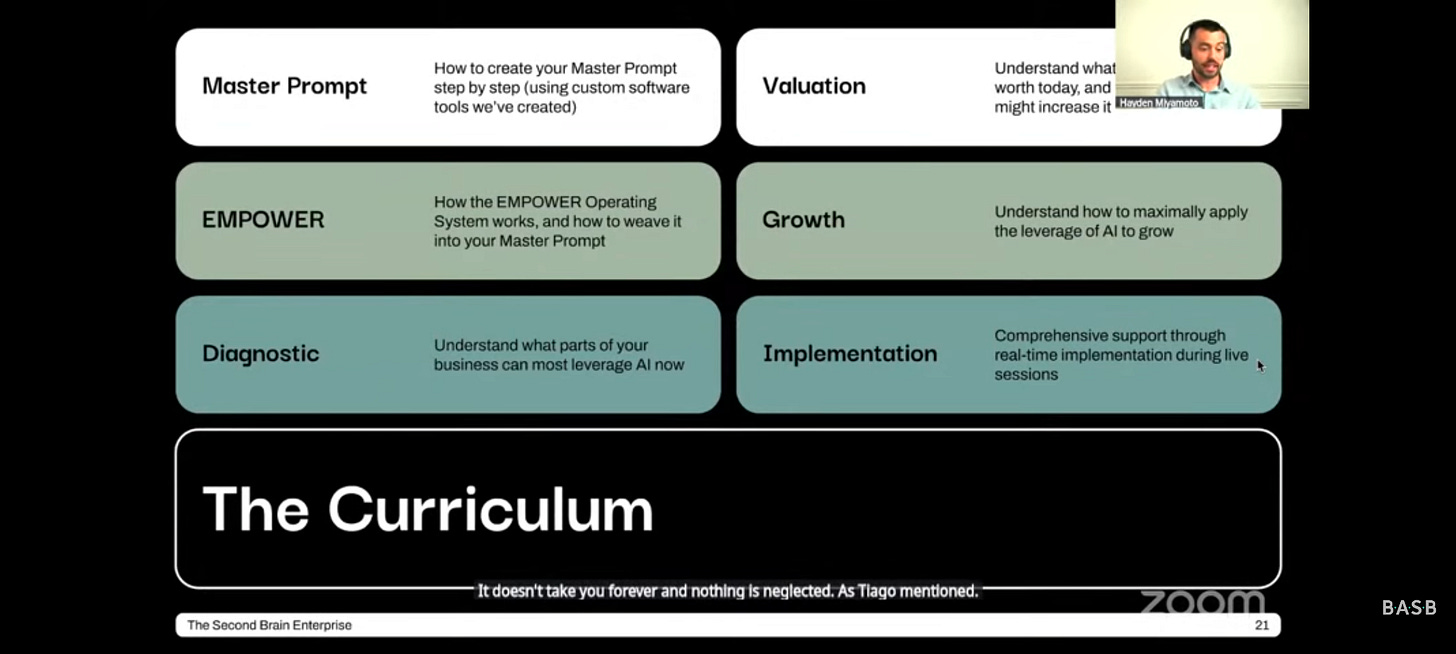

The “Master Prompt Method” is an approach to leverage AI for personal and organizational knowledge management, as presented by Tiago Forte and Hayden Miyamoto in their webinar.

A “master prompt” is a living document (sometimes 20+ pages long) that contains your unique context, enabling AI to deliver highly personalized and effective results.

The webinar discusses how this approach can revolutionize personal but especially business operations. Using AI with the "master prompt" makes it possible for small teams or even individuals to run large-scale businesses.

Tiago and Hayden emphasize focusing on transformational, not just operational, uses of AI. One example which is named is the acceleration of hiring and onboarding by an AI-first approach based on all relevant company information in the "master prompt" document.

The session also introduces a framework for evaluating the impact of AI on business and discusses AI privacy, the future of agents and the evolving role of the “second brain” in the age of AI.

At the end of the webinar, they presented their solution to make companies thrive in the age of AI: The Second Brain Enterprise program. A three-week-long live cohort with a price tag of $9.995.

All the information about the program is shared on a new domain http://secondbrainenterprise.com, which currently resolves to a Notion page.

My take: I am not sure how high the value of the live cohort will be, and every company or team has to judge for themselves if this program is a fit. At the same time, the core idea of the "Master Prompt Method" is valuable for everyone, including individuals, who want to future-proof their workflows with AI.

Podcast: The AI advantage with Tiago Forte

In a recent episode of the Infinite Loops podcast hosted by Jim O’Shaughnessy, Tiago Forte shares his vision for how AI and personal knowledge management are reshaping not just productivity, but our approach to life, work, and self-understanding.

Tiago gives a concrete example of how he is using Google’s NotebookLM as a life and business coach, feeding it 50k words of personal data (including journals, coaching notes, and personality assessments) to surface patterns and insights. Jim O’Shaughnessy is taking a similar approach, digitizing 40 years of journals for AI analysis.

By combining AI with personal data, hidden feelings and desires can be surfaced and interpreted for personal growth.

With everyone having access to the same LLMs, unique, non-digitized information like historical archives becomes a true competitive advantage.

Tiago warns that the cognitive gap between early AI adopters and others is already vast and likely to grow. He also notes that AI can be an equalizing force, blurring the lines between small and large organizations.

My take: With this episode, Tiago tries to generate interest for his new Second Brain Enterprise program. Nevertheless, he shares actionable insights in this interview for leveraging AI as a tool for self-awareness and creativity.

Paper: The Impact of Quantization on Qwen3 Performance

This recent paper investigates the effect of quantization (parameter compression) on the output quality of the Qwen3 models. The main take-away is that 4-bit and higher quantizations have nearly no negative effect on the output quality, especially for larger models. The example they point out is Qwen3-14B which only has a 1% drop in MMLU performance under 4-bit quantization compared to the full-precision model, whereas Qwen3-0.6B suffers a drop of around 10% under the same setting.

My take: Quantization makes it possible to run more powerful models on consumer GPUs with a limited amount of VRAM. Understanding the effects of this compression is crucial to choosing the best models which you can run on your own devices and with that in a privacy-friendly setup without personal data being sent to the cloud.

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.