🤝 Your glasses will remember everything

Dear curious mind

Today we explore the intersection of AI and wearable technology. We dive into the concept of smart glasses equipped with AI that can process what you see, essentially giving you 'perfect memory'.

Let's explore what it means when your glasses don't just help you see, but also help your AI understand the world around you.

In this issue:

💡 Shared Insight

When Your Glasses Have Perfect Memory

📰 AI Update

Jan-nano: A 4B Model Challenging DeepSeek-V3 in Web Search

OpenHands CLI: Flexible Alternative to Claude Code

🌟 Media Recommendation

Podcast: Turn Ideas into Reality with Lovable

💡 Shared Insight

When Your Glasses Have Perfect Memory

Ever notice how a single screenshot can save you five paragraphs of explanation when you are chatting with an AI? Images are contextual gold: they tell the model what app I am in, which menu I am staring at, even the error code I encounter. As besides the major AI models, also the open-weight competitors are more often getting the capability to process images in addition to textual input, I am leaning more on pictures to get answers tailored to my specific request.

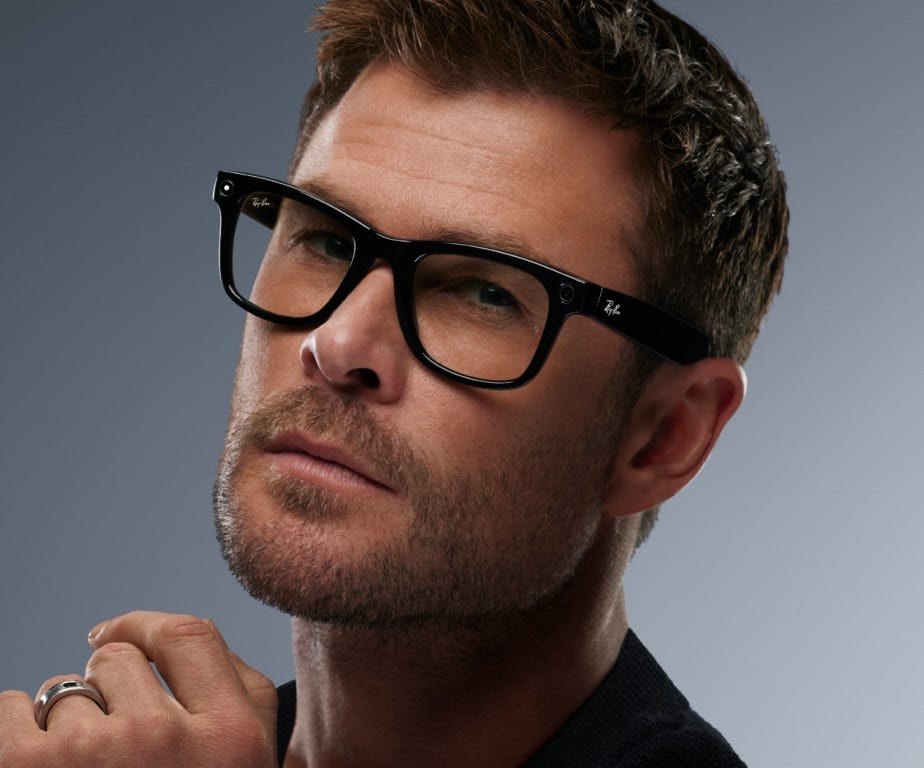

Now zoom out from a laptop or phone screenshot to an always-on camera sitting on your nose, and you understand why smart glasses feel like the next big unlock for using chatbots.

You likely have seen, but maybe not recognized, the Ray-Ban Meta glasses in public. They look like classic Ray-Ban Wayfarers, but they can take photos, film clips, and with Meta’s AI integration, identify objects or translate a sign. They are selling so well that EssilorLuxottica, the company behind the Ray-Ban brand, highlighted the Ray-Ban Meta glasses as one of the strongest US revenue drivers in Q3 2024 as stated in their press release. But they are also a success in Europe as EssilorLuxottica CFO Stefano Grassi stated:

On the frame side, I would say we continue to see strong demand from Ray-Ban Meta. It’s an overall success story that we see. Just to give you an idea, it’s not just a success in the U.S., where it’s obvious, but it’s also success in — success here in Europe. Just to give you an idea, in 60% of the Ray-Ban stores in Europe, in EMEA, Ray-Ban Meta is the best-seller in those stores. So it’s something that it’s extremely pleasing.

Google clearly thinks the moment has arrived, too. After the initial attempt with Google Glass in 2013, they showed their Android XR glasses with a front facing camera and microphone at the Google I/O developer conference a few weeks ago. They demoed how you can ask for directions, get live subtitles in another language, book a table and recall a fact from something you saw earlier.

Put those thoughts together, and you have the first mainstream hardware for image-native prompts. The upside is gigantic:

Context on tap. The model knows whether I am looking at a broken espresso machine or a spreadsheet, so the AI has a lot of context to give me tailored advice.

Hands-free recall. Miss the street sign or need the serial number under your desk? The AI will ask for the specific image frame.

Unique coaching. Get live feedback while you perform digital or physicial tasks. Subtle hints like “your drill is tilted” or “that is the 5 mm hex, not the 4 mm” might help you to get stuff done in a better way.

The Privacy Aspect

But there is a giant elephant in the room: if my glasses can remember everything I see, they can remember everything you do when you are near me. Society decided in the past that they think this is creepy, and people wearing the original Google Glass got sent out of cafés and named Glassholes. However, so far, I did not get aware that the same is happening for the Meta glasses, maybe because of the less obvious appearance. I think we will have situational acceptance: For example, the camera equipped smart glasses will be accepted at concerts and other public events, essential in certain jobs, unwanted in other jobs and in many private situations.

The Trust Aspect

There will be users who do not care at all what happens with the data, but I am looking forward to running the AI locally and keeping the raw video on-device. There should be hardware and software audits to verify nothing leaks. Even if difficult to realize and verify, there should be a hardware shutter or LED that guarantees the camera is off.

Smart glasses will not wait for approval, as the upsides are too compelling. But if we bake in privacy-by-design now, we get the upside of photographic memory without turning every sidewalk into a surveillance zone. I hope that smart glasses will allow us to see the world more clearly, without making the world see us more than it should.

📰 AI Update

Jan-nano: A 4B Model Challenging DeepSeek-V3 in Web Search [Hugging Face model card]

Jan-nano is a fine-tuned Qwen3-4B model which is designed to use search-tools and extract information from the results. According to the released benchmarks, it outperforms the way larger DeepSeek-v3 with 671B parameters at using web-search to answer questions of the SimpleQA benchmark created by OpenAI. I want to state that I really like the idea of small specialized models which run locally, however using web-search is not a local call anymore, which makes the usage of a local model somehow obsolete. On the other hand, small models cannot include all the world knowledge, and giving them the ability to search the web to answer questions will help to improve the output quality.

I tested the model inside the beta version of Jan, which is an alternative chat user interface and a competitor of tools like LM Studio. At least, with my basic prompting and usage of the brave-search MCP server, the results were not overwhelming. For me, it will not replace ChatGPT with web-search enabled, which is my current go to solution for requests which need up-to-date information.

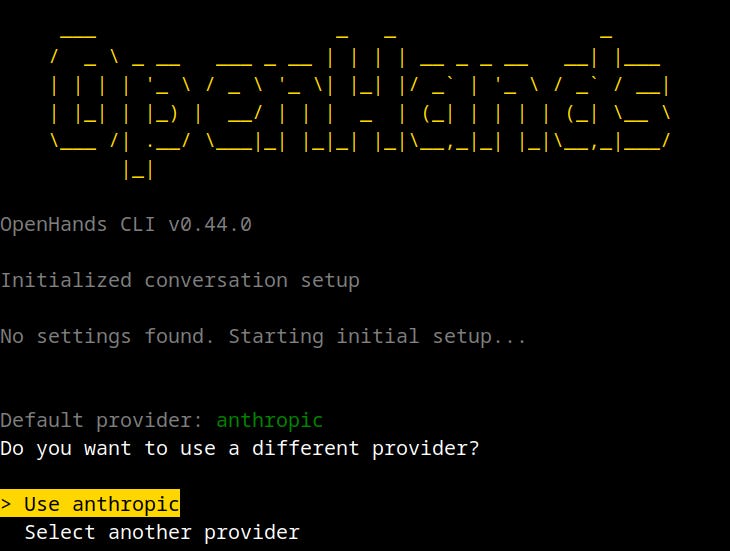

OpenHands CLI: Flexible Alternative to Claude Code [All Hands blog]

All Hands has rolled out an MIT-licensed command line interface (CLI) to initiate and observe the work of coding agents. This gives developers an alternative to Claude Code and OpenAI Codex CLI without the model lock-in. In OpenHands CLI you can use cloud-based models from all major providers as well as local models. However, the results of the latest and, so far, the best local models are still lacking behind and are no real joy to use for most coding tasks. My current advice is to use the cloud-based models if you are allowed to do so.

🌟 Media Recommendation

Podcast: Turn Ideas into Reality with Lovable

In a recent episode of The Next Wave podcast, Matt Wolfe interviews Anton Osika, CEO of Lovable, a revolutionary software platform that lets anyone build and launch software using AI. Using the service needs no experience in software development. Lovable can create fully functional web-applications and even host them for you on your own domain.

In the episode, Anton gives a live demo of Lovable. He reveals how creators of all ages, including kids and solo founders, are launching real businesses with their software.

My take: Quite some time ago, I talked to someone who explored Lovable and was totally stunned by the possibilities. This did already make me curious, but after listening to this podcast episode, I realized that I should also give it a go and see what I can create with it. Exciting times we are living in!

Disclaimer: This newsletter is written with the aid of AI. I use AI as an assistant to generate and optimize the text. However, the amount of AI used varies depending on the topic and the content. I always curate and edit the text myself to ensure quality and accuracy. The opinions and views expressed in this newsletter are my own and do not necessarily reflect those of the sources or the AI models.